A checksum is a unique value generated from a data set using algorithms like CRC or MD5 to ensure its integrity during transmission or storage. It detects errors by comparing the checksum before and after data transfer, safeguarding your information against corruption. Explore the rest of this article to understand how checksums enhance data security and their practical applications.

Table of Comparison

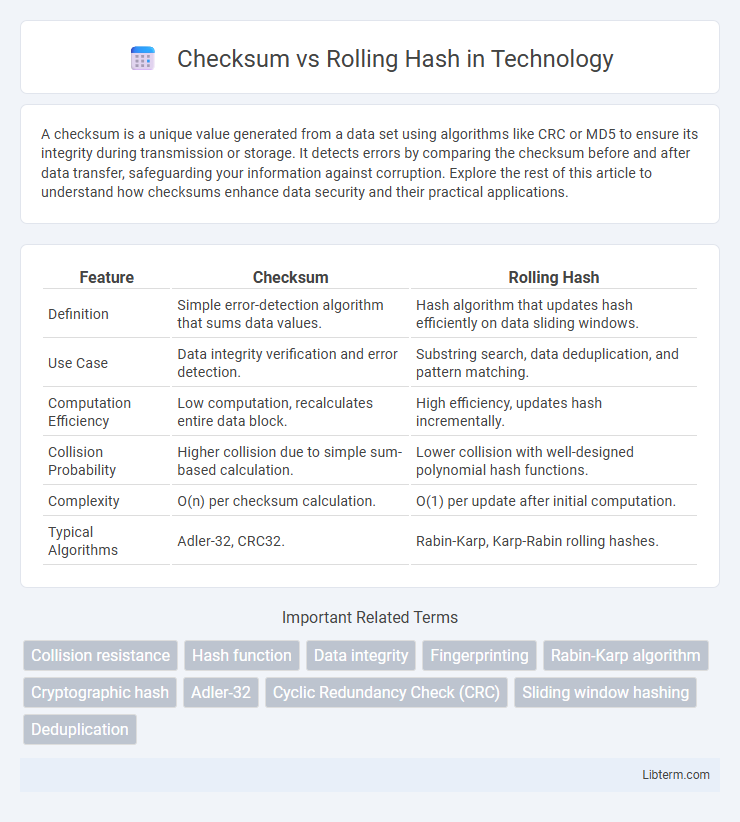

| Feature | Checksum | Rolling Hash |

|---|---|---|

| Definition | Simple error-detection algorithm that sums data values. | Hash algorithm that updates hash efficiently on data sliding windows. |

| Use Case | Data integrity verification and error detection. | Substring search, data deduplication, and pattern matching. |

| Computation Efficiency | Low computation, recalculates entire data block. | High efficiency, updates hash incrementally. |

| Collision Probability | Higher collision due to simple sum-based calculation. | Lower collision with well-designed polynomial hash functions. |

| Complexity | O(n) per checksum calculation. | O(1) per update after initial computation. |

| Typical Algorithms | Adler-32, CRC32. | Rabin-Karp, Karp-Rabin rolling hashes. |

Understanding Checksums: Definition and Purpose

Checksums are simple data verification tools that compute a fixed-size string of bits from variable data, primarily used to detect errors in data storage or transmission. They serve the essential purpose of ensuring data integrity by allowing systems to verify that the original data has not been altered or corrupted. Unlike rolling hashes, which are designed for efficient incremental updates in sliding windows of data, checksums provide a straightforward, static verification mechanism.

What is a Rolling Hash? Core Concept Explained

A rolling hash is a specialized hash function designed to efficiently compute hash values of substrings within a sliding window, enabling rapid updates by removing the leading character and adding the trailing character. Unlike traditional checksums that compute a fixed hash for entire data blocks, rolling hashes support constant-time hash recalculations, crucial for algorithms like Rabin-Karp in string matching and data deduplication. This core concept leverages modular arithmetic and polynomial accumulation to maintain hash consistency while enabling swift incremental updates across dynamic data segments.

Key Differences Between Checksum and Rolling Hash

Checksums generate a fixed-size value from a data set primarily to detect errors, using straightforward algorithms like CRC or Adler-32, while rolling hashes efficiently compute hash values for substrings within sliding windows, enabling quick updates as data shifts. Checksum methods are ideal for data integrity verification, whereas rolling hashes are optimized for dynamic pattern matching and substring searches in applications such as Rabin-Karp. Key differences include computational complexity, with rolling hashes supporting incremental updates in O(1) time, contrasting with checksums which typically require recalculation of the entire data block.

Common Use Cases for Checksums

Checksums are widely used for error detection in data transmission and storage, ensuring data integrity by verifying that data has not been altered or corrupted. Common use cases include file integrity verification, network packet error checking, and data consistency validation in backup systems. Rolling hashes, on the other hand, optimize performance in applications such as string searching and file deduplication by efficiently recalculating hash values on sliding windows of data.

Typical Applications of Rolling Hash

Rolling hash algorithms excel in applications such as substring search, data deduplication, and network packet integrity verification due to their efficient incremental hash computation. Unlike traditional checksums that compute hash values over entire data blocks, rolling hashes allow quick updates by adding or removing characters, making them ideal for algorithms like Rabin-Karp in plagiarism detection and string matching. This efficiency reduces computational overhead in large-scale text analysis, real-time monitoring, and streaming data integrity checks.

Performance Comparison: Speed and Efficiency

Checksum algorithms typically offer faster performance due to their simple arithmetic operations, making them ideal for quick data integrity verification on smaller datasets. Rolling hash functions, while slightly slower because of more complex computation, excel in efficiency for large-scale data processing by enabling incremental updates without rehashing entire data blocks. The choice between checksum and rolling hash hinges on the balance between speed requirements and the need for efficient handling of dynamic or streaming data.

Data Integrity: Checksum vs Rolling Hash

Checksums provide a simple, fixed-size value derived from data to detect accidental errors, offering basic data integrity verification but limited error localization. Rolling hashes enable efficient recalculation over sliding windows, enhancing performance in scenarios like data synchronization or deduplication while maintaining integrity checks in dynamic data streams. Rolling hashes support more granular integrity validation compared to traditional checksums, making them suitable for real-time and incremental data verification.

Security Considerations: Which is More Robust?

Rolling hash algorithms offer enhanced security compared to simple checksums by generating hash values that are sensitive to data order and content changes, making them more resistant to collisions and tampering. Checksums, such as CRC or simple additive sums, primarily detect accidental errors and are vulnerable to intentional manipulation due to their lower cryptographic complexity. In cryptographic contexts, rolling hashes integrated with strong cryptographic primitives provide robust data integrity verification, whereas checksums alone are insufficient for security-critical applications.

Implementation Challenges and Best Practices

Implementing checksums involves straightforward arithmetic operations but can face limitations in error detection capabilities, whereas rolling hash functions require careful management of window size and hash value updates for efficient substring comparison. Best practices recommend selecting appropriate polynomial bases and modulus values in rolling hashes to minimize collisions and ensure computational efficiency. Robust implementation also includes consistent handling of character encoding and boundary conditions to prevent hash mismatches or checksum inaccuracies.

Choosing the Right Method: Factors to Consider

Choosing between a checksum and a rolling hash depends on factors such as the need for incremental updates, collision resistance, and computational efficiency. Checksums like CRC are faster and suitable for simple error detection, whereas rolling hashes excel in applications requiring quick recalculations over sliding windows, like in data deduplication or plagiarism detection. Evaluate the expected input size, performance constraints, and security requirements to select the optimal method.

Checksum Infographic

libterm.com

libterm.com