Dynamic state refers to a condition where a system or object is continuously changing or evolving over time, influenced by external forces or internal processes. Understanding dynamic states is crucial in fields like physics, engineering, and computer science to predict behavior and improve performance. Explore the rest of this article to learn how dynamic states impact various applications and what they mean for your specific interests.

Table of Comparison

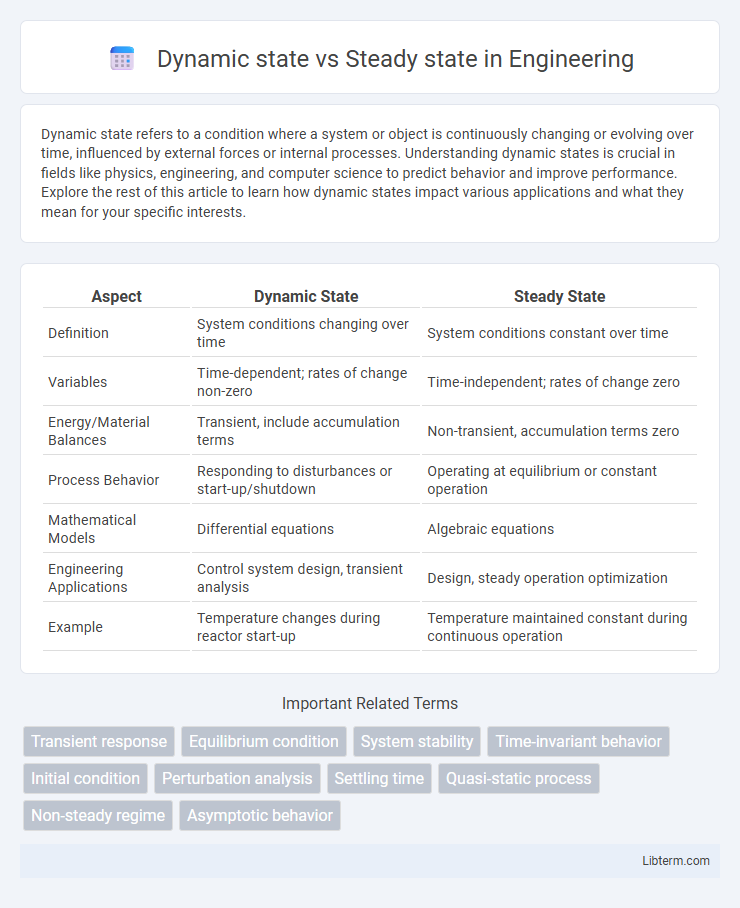

| Aspect | Dynamic State | Steady State |

|---|---|---|

| Definition | System conditions changing over time | System conditions constant over time |

| Variables | Time-dependent; rates of change non-zero | Time-independent; rates of change zero |

| Energy/Material Balances | Transient, include accumulation terms | Non-transient, accumulation terms zero |

| Process Behavior | Responding to disturbances or start-up/shutdown | Operating at equilibrium or constant operation |

| Mathematical Models | Differential equations | Algebraic equations |

| Engineering Applications | Control system design, transient analysis | Design, steady operation optimization |

| Example | Temperature changes during reactor start-up | Temperature maintained constant during continuous operation |

Understanding Dynamic State and Steady State

Dynamic state refers to a condition where system variables continuously change over time in response to external or internal influences, reflecting transient behaviors and fluctuations. Steady state occurs when these variables become constant or fluctuate within a stable range, indicating equilibrium despite ongoing processes. Understanding the distinction between dynamic state and steady state is crucial for analyzing system performance, predicting future behavior, and designing effective control strategies in engineering and science.

Key Differences Between Dynamic and Steady States

Dynamic state is characterized by continuous change and flux in system variables, whereas steady state occurs when these variables remain constant over time despite ongoing processes. In dynamic states, energy or matter flows cause variations in system conditions, while steady states achieve a balance where input and output rates are equal. Key differences include temporal variability, system stability, and response to disturbances, with dynamic states exhibiting transient behaviors and steady states reflecting equilibrium conditions.

Importance of State Analysis in Systems

State analysis is crucial for understanding dynamic systems by revealing how system variables evolve over time and respond to changing inputs. It helps differentiate between transient dynamic states, where variables fluctuate, and steady states, where system behavior stabilizes, ensuring accurate modeling and control. This understanding enables engineers and scientists to design more reliable and efficient systems in fields such as electronics, thermodynamics, and control theory.

Real-World Examples of Dynamic and Steady States

Dynamic state is observed in ecosystems like coral reefs, where species populations and nutrient cycles constantly change to adapt to environmental fluctuations. Steady state occurs in engineered systems such as a thermostat-regulated heating system, maintaining a constant temperature despite external changes. Urban traffic flow often exemplifies dynamic states with variable congestion, while steady-state conditions might be seen in large-scale industrial processes operating under controlled parameters for consistent output.

Dynamic State: Definition and Characteristics

Dynamic state refers to a condition in which a system is continuously changing over time, exhibiting fluctuations in variables such as energy, pressure, or concentration. Unlike steady state, where system variables remain constant, dynamic state is characterized by transient behavior, non-equilibrium processes, and time-dependent changes. Understanding dynamic state is crucial for analyzing systems in chemical reactions, fluid mechanics, and biological processes where ongoing adjustments determine system performance.

Steady State: Definition and Attributes

Steady state refers to a condition in which all system variables remain constant over time despite ongoing processes or changes within the system. It is characterized by equilibrium where input rates equal output rates, resulting in no net accumulation or depletion of system resources. Key attributes include temporal stability, balanced fluxes, and consistent system parameters that enable predictability and control in engineering, biological, and economic systems.

Applications in Engineering and Science

Dynamic state analysis is essential in engineering disciplines such as control systems, mechanical vibrations, and chemical reactors where time-dependent processes and transient responses must be understood and managed. Steady state conditions are crucial in electrical circuit design, thermodynamics, and fluid mechanics, enabling simplification of complex systems by assuming constant parameters over time for stability and efficiency assessments. Engineers and scientists apply dynamic state models to predict system behavior during changes, while steady state models facilitate optimization and long-term performance evaluation.

Measuring Transitions Between States

Measuring transitions between dynamic state and steady state involves analyzing time-dependent parameters such as concentration, temperature, or velocity to capture system responsiveness and stability. Techniques like time-resolved spectroscopy, transient analysis, and state-space modeling enable precise quantification of changes during the shift from transient dynamics to equilibrium conditions. Accurate assessment of these transitions provides critical insights into system control, optimization, and real-time monitoring in engineering and scientific applications.

The Role of Feedback in Sustaining States

Feedback mechanisms are crucial in sustaining both dynamic and steady states by continuously adjusting system variables to maintain equilibrium or promote change. In a dynamic state, positive feedback amplifies changes, driving system evolution, while negative feedback counteracts deviations, preserving steady state stability. Effective feedback loops enable systems to respond adaptively to internal and external perturbations, ensuring resilience and functional coherence.

Implications for System Optimization and Stability

Dynamic state involves continual change in system variables, requiring adaptive control strategies to maintain stability and optimize performance under fluctuating conditions. Steady state represents equilibrium where system variables remain constant, allowing for predictable resource allocation and simplified optimization models. Understanding transitions between dynamic and steady states enables improved system resilience, minimizes oscillations, and enhances long-term efficiency in complex engineering and economic systems.

Dynamic state Infographic

libterm.com

libterm.com