Principal components are key to simplifying complex data sets by transforming variables into uncorrelated factors that capture the most variance. This technique enhances data analysis and visualization, making it easier to identify underlying patterns and trends. Explore the rest of the article to discover how principal components can optimize Your data-driven decisions.

Table of Comparison

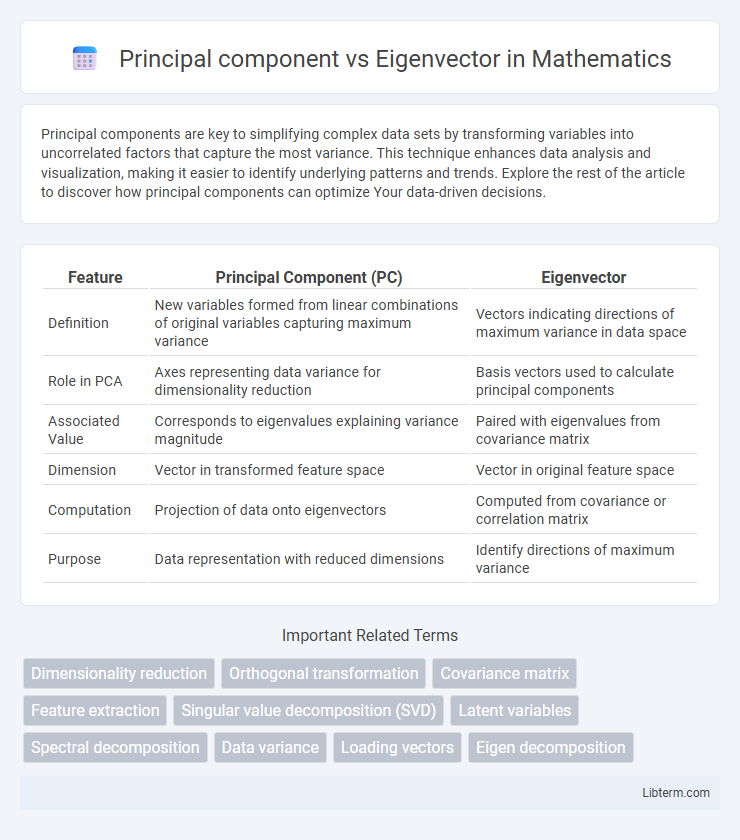

| Feature | Principal Component (PC) | Eigenvector |

|---|---|---|

| Definition | New variables formed from linear combinations of original variables capturing maximum variance | Vectors indicating directions of maximum variance in data space |

| Role in PCA | Axes representing data variance for dimensionality reduction | Basis vectors used to calculate principal components |

| Associated Value | Corresponds to eigenvalues explaining variance magnitude | Paired with eigenvalues from covariance matrix |

| Dimension | Vector in transformed feature space | Vector in original feature space |

| Computation | Projection of data onto eigenvectors | Computed from covariance or correlation matrix |

| Purpose | Data representation with reduced dimensions | Identify directions of maximum variance |

Introduction to Principal Components and Eigenvectors

Principal components are the directions in a dataset along which the variance is maximized, providing a reduced-dimensional representation while preserving essential information. Eigenvectors are the mathematical vectors corresponding to the directions of these principal components, derived from the covariance matrix of the data. The eigenvalues associated with each eigenvector quantify the amount of variance captured by that principal component.

Definition of Principal Component

Principal components are the directions in a dataset that capture the maximum variance, serving as new axes formed by linear combinations of the original variables. Each principal component corresponds to an eigenvector of the covariance matrix, with the eigenvector indicating the direction and its associated eigenvalue representing the variance magnitude along that direction. These components enable dimensionality reduction by projecting data onto fewer dimensions while preserving essential variance.

Understanding Eigenvectors

Eigenvectors are fundamental components in principal component analysis (PCA), representing directions in a multidimensional space along which data varies the most. Each eigenvector corresponds to a principal component and defines an axis that maximizes the variance of the projected data. Understanding eigenvectors enables the identification of patterns and dimensionality reduction by transforming correlated variables into uncorrelated components.

Mathematical Relationship between Principal Components and Eigenvectors

Principal components are linear combinations of the original variables formed by projecting data onto eigenvectors derived from the covariance or correlation matrix. Eigenvectors define the directions of maximum variance in the data, while the corresponding eigenvalues quantify the amount of variance captured by each principal component. The mathematical relationship is that principal components equal the original data matrix multiplied by the eigenvectors, transforming data into uncorrelated coordinates aligned with the eigenvector directions.

Principal Components Analysis (PCA) Explained

Principal Components Analysis (PCA) transforms correlated variables into a set of linearly uncorrelated principal components, which represent directions of maximum variance in the data. Each principal component corresponds to an eigenvector of the data covariance matrix, with the associated eigenvalue indicating the amount of variance captured. Understanding the distinction between principal components and eigenvectors is essential, as principal components are the projections of data onto eigenvectors that summarize key data patterns in a lower-dimensional space.

Role of Eigenvectors in PCA

Eigenvectors in Principal Component Analysis (PCA) represent the directions of maximum variance within the data, serving as the principal components. Each eigenvector corresponds to an axis along which the data is projected to reveal the most significant patterns and reduce dimensionality. The magnitude of its associated eigenvalue quantifies the variance captured by that principal component, guiding the selection of components for effective data representation.

Differences between Principal Components and Eigenvectors

Principal components are linear combinations of the original variables that maximize variance in the data, derived from eigenvectors of the covariance matrix. Eigenvectors represent directions in the feature space along which data variance is measured, serving as the basis vectors for principal components. The key difference lies in eigenvectors being mathematical directions, while principal components are the projections of the data onto these eigenvector directions, reflecting actual transformed variables.

Applications in Data Science and Machine Learning

Principal components represent the directions of maximum variance in a dataset derived from eigenvectors of the covariance matrix. Eigenvectors serve as the foundational vectors that define these principal components, enabling dimensionality reduction techniques like Principal Component Analysis (PCA). In machine learning, principal components facilitate feature extraction and noise reduction, enhancing model performance and interpretability.

Advantages and Limitations

Principal components are linear combinations of original variables that maximize variance, offering data dimensionality reduction while preserving essential information for tasks like PCA. Eigenvectors, fundamental in linear algebra, define directions of maximum variance but may not always correspond to interpretable features in complex datasets. Principal components provide clearer insights in data analysis due to variance-based ordering, though they can lose interpretability, whereas eigenvectors serve as mathematical tools without inherent statistical ranking.

Conclusion: Choosing Principal Components or Eigenvectors

Choosing between principal components and eigenvectors depends on the analysis goal: principal components provide uncorrelated variables that maximize variance, ideal for dimensionality reduction and interpretability in PCA. Eigenvectors represent directions in the feature space associated with eigenvalues, crucial for understanding variance structure but less intuitive for direct data representation. Prioritize principal components for data compression and feature extraction, while eigenvectors serve as the mathematical foundation for these transformations.

Principal component Infographic

libterm.com

libterm.com