Accessing accurate and timely information is crucial for making informed decisions and staying ahead in today's fast-paced world. Reliable data sources enhance your understanding of complex topics and enable effective problem-solving. Explore the rest of this article to uncover practical tips for gathering and verifying information efficiently.

Table of Comparison

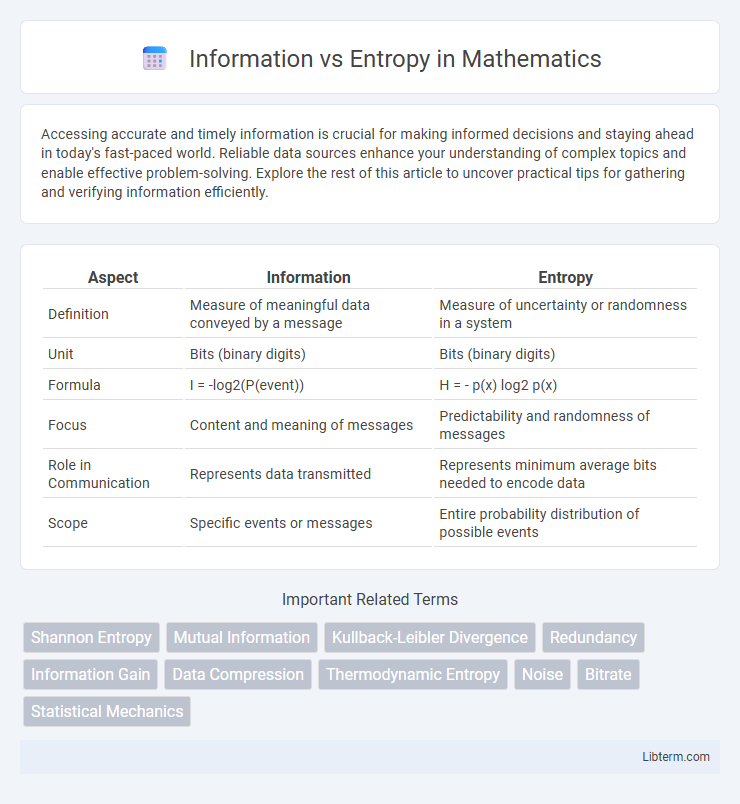

| Aspect | Information | Entropy |

|---|---|---|

| Definition | Measure of meaningful data conveyed by a message | Measure of uncertainty or randomness in a system |

| Unit | Bits (binary digits) | Bits (binary digits) |

| Formula | I = -log2(P(event)) | H = - p(x) log2 p(x) |

| Focus | Content and meaning of messages | Predictability and randomness of messages |

| Role in Communication | Represents data transmitted | Represents minimum average bits needed to encode data |

| Scope | Specific events or messages | Entire probability distribution of possible events |

Understanding Information: A Fundamental Concept

Information quantifies the reduction of uncertainty in a system, serving as the fundamental measure in communication theory. Entropy represents the average amount of uncertainty or randomness inherent in a source, defining the limits of information compression and transmission. Understanding the relationship between information and entropy enables efficient encoding and accurate data interpretation in various technological applications.

Defining Entropy in Information Theory

Entropy in information theory quantifies the uncertainty or unpredictability of a random variable's possible outcomes. It is mathematically defined by Shannon as the average amount of information produced by a stochastic source of data, measured in bits. Higher entropy indicates greater randomness and less predictability, while lower entropy reflects more structured and predictable information.

The Mathematical Relationship Between Information and Entropy

Information quantifies the reduction of uncertainty and is mathematically defined as the negative logarithm of the probability of an event, while entropy measures the expected value of this information across all possible events in a distribution. The Shannon entropy \( H(X) = -\sum p(x) \log p(x) \) represents the average information content or uncertainty inherent in a random variable \( X \). The greater the entropy, the higher the uncertainty, and the more information is required to fully describe the system's state.

Historical Origins: From Shannon to Modern Theories

Claude Shannon pioneered the concept of information theory in 1948, introducing entropy as a measure of uncertainty in communication systems. His work quantified information, laying the foundation for modern digital communication, coding theory, and data compression. Contemporary advancements extend Shannon's entropy to quantum information, machine learning, and complex systems analysis, reflecting the evolving understanding of information and entropy in technology and science.

Measuring Information: Bits, Bytes, and Beyond

Measuring information involves quantifying uncertainty reduction, commonly using bits as the standard unit, where one bit represents a binary choice between two equally likely outcomes. Bytes, consisting of eight bits, serve as a fundamental data measurement unit in computing, enabling efficient storage and transmission of complex data structures. Advanced metrics such as shannons extend beyond basic bits and bytes by incorporating probabilistic models to capture the nuanced variations in information content across different communication systems.

Entropy as Uncertainty: What Does It Really Mean?

Entropy measures the uncertainty or unpredictability in a set of data, quantifying the average amount of information produced by a stochastic source. It reflects how much information is needed to describe the state of a system or the outcome of a random variable. High entropy indicates greater uncertainty and less predictability, meaning more information is required to specify the exact state or message.

Information Gain: Reducing Entropy in Communication

Information gain quantifies the reduction in entropy achieved by gaining knowledge about a specific variable, effectively enhancing communication clarity. In communication systems, minimizing entropy corresponds to reducing uncertainty and increasing the predictability of messages. This reduction in entropy through information gain optimizes data transmission by improving signal efficiency and reliability.

Practical Applications: Coding, Compression, and Cryptography

Information theory underpins coding techniques by quantifying the minimum number of bits required to represent data efficiently, directly influencing lossless compression algorithms such as Huffman and arithmetic coding. Entropy measures the uncertainty or randomness in data sources, guiding optimal compression rates and ensuring maximum reduction without information loss. In cryptography, entropy quantifies key randomness and unpredictability, which is critical for secure encryption schemes and minimizing vulnerability to attacks.

Information vs Entropy in Artificial Intelligence

In artificial intelligence, information quantifies the reduction of uncertainty about a given dataset or model, while entropy measures the degree of unpredictability or disorder within that data. High entropy indicates more randomness, requiring AI systems to process and learn from complex patterns, whereas lower entropy suggests more structured data, facilitating more efficient learning. Optimizing AI algorithms involves balancing information gain and entropy to improve decision-making accuracy and model performance.

Key Differences and Interdependence: Summing Up

Information quantifies the reduction of uncertainty, while entropy measures the inherent uncertainty or randomness within a system. High entropy correlates with less predictable data, implying lower information content per message, yet the presence of entropy is essential for meaningful information extraction. Their interdependence highlights that information arises from decreases in entropy through data encoding and transmission processes.

Information Infographic

libterm.com

libterm.com