Kolmogorov Complexity measures the shortest possible description length of an object, such as a string, in a fixed computational language, reflecting its algorithmic randomness and information content. This concept helps in understanding data compression, randomness tests, and the limits of computability. Explore the article to see how Kolmogorov Complexity impacts computer science and your understanding of information theory.

Table of Comparison

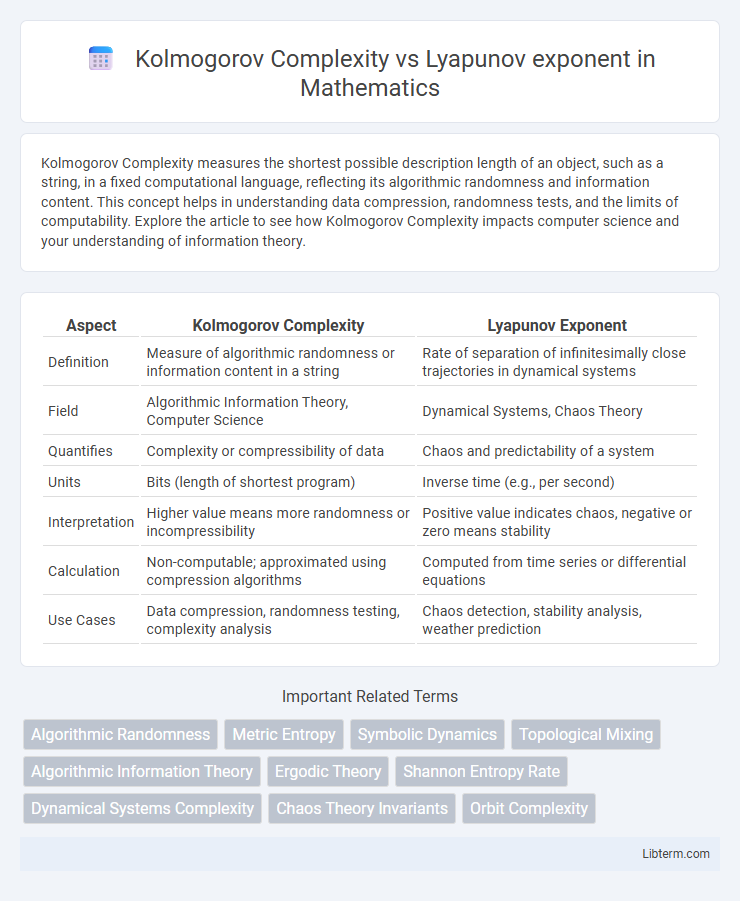

| Aspect | Kolmogorov Complexity | Lyapunov Exponent |

|---|---|---|

| Definition | Measure of algorithmic randomness or information content in a string | Rate of separation of infinitesimally close trajectories in dynamical systems |

| Field | Algorithmic Information Theory, Computer Science | Dynamical Systems, Chaos Theory |

| Quantifies | Complexity or compressibility of data | Chaos and predictability of a system |

| Units | Bits (length of shortest program) | Inverse time (e.g., per second) |

| Interpretation | Higher value means more randomness or incompressibility | Positive value indicates chaos, negative or zero means stability |

| Calculation | Non-computable; approximated using compression algorithms | Computed from time series or differential equations |

| Use Cases | Data compression, randomness testing, complexity analysis | Chaos detection, stability analysis, weather prediction |

Introduction to Complexity and Chaos

Kolmogorov Complexity measures the shortest algorithmic description length of a data sequence, revealing the intrinsic computational complexity of a system. Lyapunov exponent quantifies the average exponential rate of divergence of nearby trajectories in a dynamical system, indicating sensitivity to initial conditions and chaotic behavior. Both concepts provide distinct yet complementary frameworks for analyzing complexity and chaos in mathematical and physical systems.

Defining Kolmogorov Complexity

Kolmogorov Complexity quantifies the shortest algorithmic description length of a string, reflecting its intrinsic randomness or information content. Unlike the Lyapunov exponent, which measures the rate of divergence in dynamical systems to characterize chaos, Kolmogorov Complexity evaluates the compressibility and predictability of data sequences. This metric is crucial in theoretical computer science and information theory for assessing system complexity independent of probabilistic assumptions.

Understanding Lyapunov Exponents

Lyapunov exponents measure the rate of separation of infinitesimally close trajectories in dynamic systems, quantifying chaos and predictability. A positive Lyapunov exponent indicates sensitive dependence on initial conditions, signifying chaos, while a zero or negative value implies periodic or stable behavior. Understanding Lyapunov exponents is crucial for distinguishing between chaotic and regular dynamics, complementing Kolmogorov complexity which assesses the algorithmic randomness of system outputs.

Mathematical Foundations of Both Concepts

Kolmogorov complexity quantifies the shortest algorithmic description length of a string, formalizing the notion of randomness and compressibility through Turing machines and algorithmic information theory. The Lyapunov exponent measures the average exponential rate of divergence of nearby trajectories in dynamical systems, defined using limits of logarithmic separation growth over time. Both rely on rigorous mathematical frameworks: Kolmogorov complexity anchors in computability theory and information theory, while the Lyapunov exponent emerges from ergodic theory and differential equations.

Algorithmic Information Theory and Dynamical Systems

Kolmogorov Complexity measures the shortest algorithmic description length of a string, quantifying its inherent randomness and information content within Algorithmic Information Theory. Lyapunov exponent characterizes the average rate of exponential divergence of nearby trajectories in Dynamical Systems, indicating sensitivity to initial conditions and chaos. Both concepts intersect by linking computational complexity and predictability, where high Kolmogorov Complexity corresponds to systems with positive Lyapunov exponents and unpredictable dynamic behavior.

Applications in Physics and Computer Science

Kolmogorov Complexity measures the minimal description length of a data sequence, making it crucial for data compression and algorithmic information theory in computer science. Lyapunov exponents quantify the rate of separation of infinitesimally close trajectories in dynamical systems, providing insight into chaos theory and stability analysis in physics. Combining these concepts enhances the understanding of chaotic systems by linking computational complexity with system predictability and entropy.

Comparing Predictability and Randomness

Kolmogorov Complexity quantifies the randomness of a sequence by measuring the shortest algorithmic description needed to reproduce it, providing a metric for intrinsic unpredictability. The Lyapunov exponent evaluates the sensitivity of a dynamical system to initial conditions, indicating the rate at which nearby trajectories diverge, thus assessing predictability in chaotic systems. While Kolmogorov Complexity addresses randomness in data patterns, the Lyapunov exponent focuses on instability and the predictability horizon in time-evolving systems.

Measuring Disorder: Kolmogorov vs Lyapunov

Kolmogorov Complexity quantifies disorder by measuring the shortest algorithmic description of a dataset, reflecting its inherent randomness and informational content. Lyapunov exponent measures disorder by evaluating the sensitivity to initial conditions in dynamical systems, quantifying the rate of divergence of nearby trajectories. While Kolmogorov complexity addresses computational randomness, Lyapunov exponent captures dynamic instability and chaotic behavior in physical processes.

Limitations and Challenges in Interpretation

Kolmogorov Complexity faces challenges in practical computation due to its uncomputability and dependence on the choice of universal Turing machine, limiting its direct application in quantifying system randomness. Lyapunov exponent, while effective in measuring local exponential divergence in dynamical systems, struggles with noise sensitivity and difficulties in interpreting values in non-stationary or high-dimensional systems. Both metrics require careful consideration of context, with Kolmogorov Complexity providing theoretical bounds on randomness and Lyapunov exponent offering measurable insights into chaos, yet neither alone fully characterizes system complexity or predictability.

Future Directions and Interdisciplinary Insights

Exploring the intersection of Kolmogorov Complexity and Lyapunov exponent offers promising future directions in quantifying system unpredictability by integrating algorithmic information theory with dynamical systems analysis. Interdisciplinary insights from fields such as computational neuroscience, climate modeling, and financial engineering can advance predictive modeling by combining complexity measures with chaos indicators. Developing hybrid frameworks that leverage both metrics could enhance understanding of emergent behaviors in complex adaptive systems across physics and biology.

Kolmogorov Complexity Infographic

libterm.com

libterm.com