Recurrence refers to the return of a disease or condition after a period of improvement or remission. It often signals that the underlying cause was not fully addressed, prompting the need for ongoing monitoring and possibly adjusted treatment strategies. Explore this article to understand how recurrence can impact your health and the measures you can take to manage it effectively.

Table of Comparison

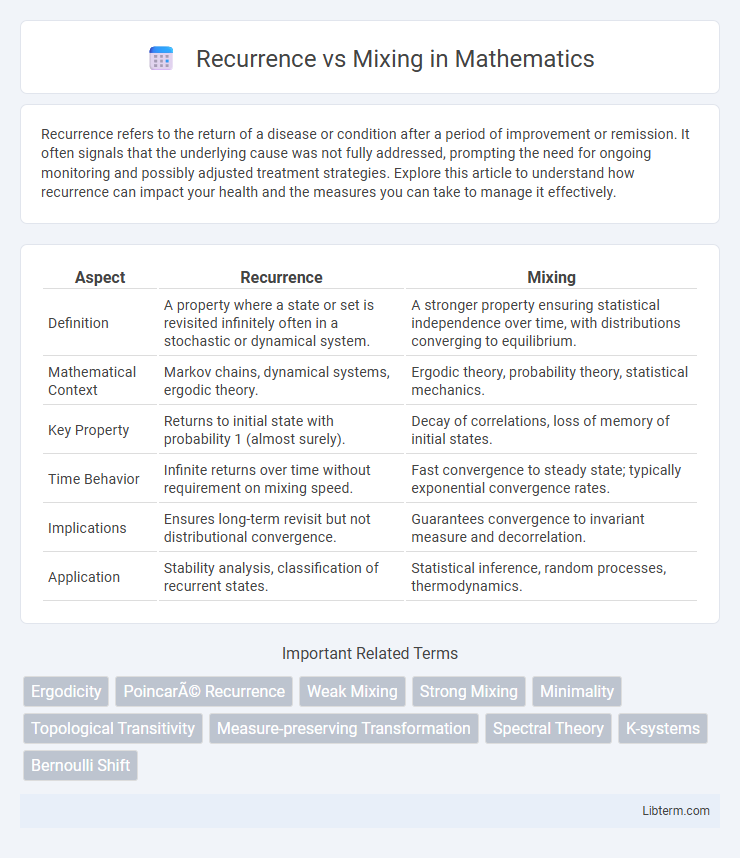

| Aspect | Recurrence | Mixing |

|---|---|---|

| Definition | A property where a state or set is revisited infinitely often in a stochastic or dynamical system. | A stronger property ensuring statistical independence over time, with distributions converging to equilibrium. |

| Mathematical Context | Markov chains, dynamical systems, ergodic theory. | Ergodic theory, probability theory, statistical mechanics. |

| Key Property | Returns to initial state with probability 1 (almost surely). | Decay of correlations, loss of memory of initial states. |

| Time Behavior | Infinite returns over time without requirement on mixing speed. | Fast convergence to steady state; typically exponential convergence rates. |

| Implications | Ensures long-term revisit but not distributional convergence. | Guarantees convergence to invariant measure and decorrelation. |

| Application | Stability analysis, classification of recurrent states. | Statistical inference, random processes, thermodynamics. |

Introduction to Recurrence and Mixing

Recurrence measures the likelihood that a stochastic process returns to a particular state, reflecting long-term stability and predictability in Markov chains or dynamical systems. Mixing describes the rate at which a system loses memory of its initial state, ensuring convergence to a stationary distribution and uniform behavior over time. Understanding recurrence and mixing is essential for analyzing ergodic properties and convergence rates in probabilistic models.

Defining Recurrence in Dynamical Systems

Recurrence in dynamical systems refers to the property where a system's state returns arbitrarily close to its initial condition after some evolution time. This concept is crucial for understanding long-term behavior and stability within phase spaces, often characterized by Poincare recurrence theorem. Unlike mixing, which implies thorough statistical blending of states over time, recurrence emphasizes the repeated approximation of original states without necessarily homogenizing the system.

Understanding Mixing: Concept and Significance

Mixing refers to the property of a dynamical system where any two subsets of the space become increasingly intertwined over time, ensuring that the system evolves towards a state of statistical uniformity. This concept is significant because it guarantees that initial conditions lose their influence, leading to predictable long-term behavior despite short-term chaos. Understanding mixing provides critical insight into the ergodic properties of systems, distinguishing them from mere recurrence where points return close to their starting position without necessarily blending the entire space.

Key Differences Between Recurrence and Mixing

Recurrence in neural networks involves loops that allow information to persist, enabling models like RNNs to capture sequential and temporal dependencies in data. Mixing, often seen in architectures such as the MLP-Mixer, relies on per-token and per-channel transformations without relying on recurrent connections, promoting parallel processing of data. Key differences include recurrence's stateful, step-by-step processing suitable for sequences, while mixing uses non-recurrent, fixed-length input handling with efficient spatial and feature mixing.

Mathematical Formulation of Recurrence

Recurrence relations express sequences where each term is defined as a function of preceding terms, typically represented by equations such as \( a_{n} = f(a_{n-1}, a_{n-2}, \ldots) \). These mathematical formulations enable the analysis of discrete dynamical systems by describing their stepwise evolution and stability properties. Recursive structures contrast with mixing processes, which emphasize the statistical blending and convergence behaviors of states over time.

Mathematical Formulation of Mixing

Mixing in dynamical systems is formally described using measure theory, where a system is mixing if, for any two measurable sets A and B, the measure of their intersection under the system's evolution converges to the product of their individual measures: \(\lim_{n \to \infty} \mu(T^{-n}A \cap B) = \mu(A) \mu(B)\). This mathematical formulation captures the intense level of randomness and loss of memory in the system, contrasting with recurrence, which only guarantees that states return close to their initial positions. Mixing implies ergodicity but strengthens it by ensuring statistical independence of sets over time, providing a rigorous framework for understanding chaotic behavior in measure-preserving transformations.

Real-World Examples Illustrating Recurrence

Recurrent neural networks (RNNs) excel in handling sequential data such as time series forecasting and natural language processing, where previous states influence future predictions. Real-world examples include speech recognition systems that process audio signals over time and financial models predicting stock price movements by analyzing historical trends. These use cases demonstrate recurrence's strength in capturing temporal dependencies that static mixing models might overlook.

Applications and Implications of Mixing

Mixing in dynamical systems ensures thorough state space exploration, crucial for optimizing algorithms in machine learning and statistical physics. Applications of mixing include improved convergence rates in Markov Chain Monte Carlo methods, enhancing reliability in simulations and probabilistic models. The implications of strong mixing properties guarantee robustness in complex systems analysis, enabling accurate long-term predictions across diverse fields such as economics and climate science.

Recurrence and Mixing in Ergodic Theory

Recurrence in ergodic theory refers to the property that points in a measure-preserving dynamical system return arbitrarily close to their initial position infinitely often, as formalized by Poincare's Recurrence Theorem. Mixing, a stronger notion, implies that the system's future states become statistically independent of its initial states over time, leading to the convergence of the measure of intersections to the product of measures. The distinction between recurrence and mixing highlights different levels of randomness and predictability in ergodic systems, with mixing ensuring a more profound form of statistical homogenization.

Conclusion: Recurrence vs Mixing in Practice

Recurrence offers structured temporal dependencies ideal for sequential data, yet mixing architectures excel in parallel processing and long-range pattern capture. Practically, mixing models demonstrate superior scalability and efficiency in handling diverse data types without sacrificing performance. The choice between recurrence and mixing hinges on the application's latency requirements and sequence complexity, with mixing favored for large-scale, high-speed tasks.

Recurrence Infographic

libterm.com

libterm.com