An association serves as a powerful platform for individuals or organizations sharing common interests to collaborate, network, and achieve collective goals. Your participation in an association can open doors to valuable resources, professional growth, and a strong support system. Explore the rest of the article to discover how joining the right association can enhance your personal and professional life.

Table of Comparison

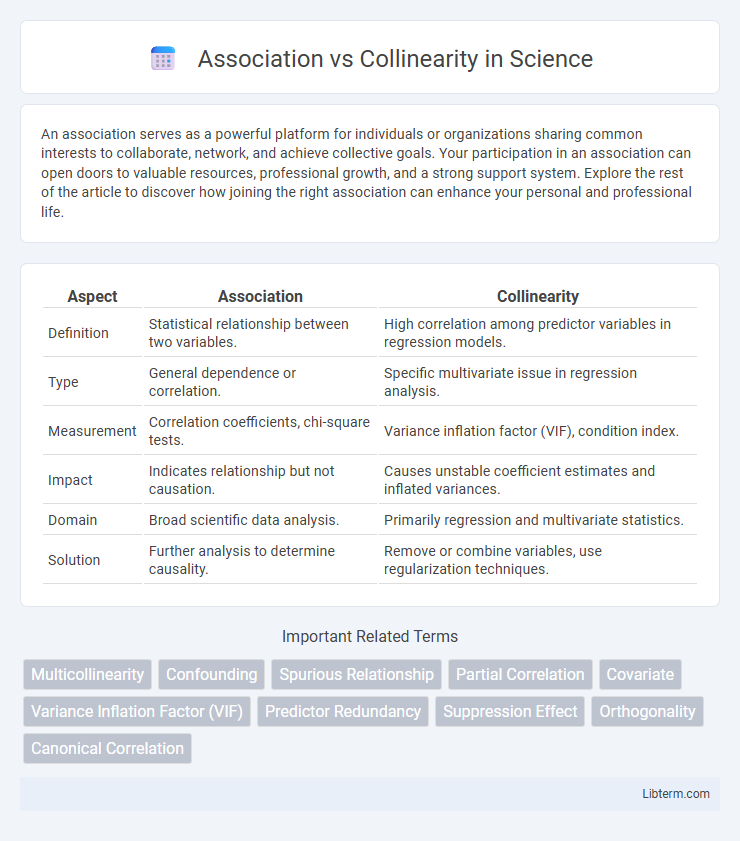

| Aspect | Association | Collinearity |

|---|---|---|

| Definition | Statistical relationship between two variables. | High correlation among predictor variables in regression models. |

| Type | General dependence or correlation. | Specific multivariate issue in regression analysis. |

| Measurement | Correlation coefficients, chi-square tests. | Variance inflation factor (VIF), condition index. |

| Impact | Indicates relationship but not causation. | Causes unstable coefficient estimates and inflated variances. |

| Domain | Broad scientific data analysis. | Primarily regression and multivariate statistics. |

| Solution | Further analysis to determine causality. | Remove or combine variables, use regularization techniques. |

Understanding Association in Data Analysis

Association in data analysis measures the relationship between variables, indicating how changes in one variable correspond with changes in another without implying causation. It is crucial for identifying patterns, trends, and dependencies in datasets, guiding hypothesis generation and decision-making processes. Unlike collinearity, which specifically addresses linear relationships among predictor variables in regression models, association encompasses a broader range of relationships, including linear and non-linear connections.

Defining Collinearity and Its Implications

Collinearity refers to a statistical phenomenon where two or more predictor variables in a regression model exhibit a high degree of correlation, making it difficult to isolate their individual effects on the response variable. This strong linear relationship among predictors can lead to inflated standard errors, unstable coefficient estimates, and reduced model interpretability. Understanding and addressing collinearity is crucial for ensuring reliable regression analysis outcomes and robust inference.

Key Differences Between Association and Collinearity

Association describes a general relationship between two variables, indicating how one variable changes as the other changes without implying causation. Collinearity specifically refers to a high linear correlation between two or more predictor variables in a regression model, which can cause instability in coefficient estimates. Key differences include that association applies broadly to any relationship type, while collinearity pertains to multivariate linear dependencies affecting model accuracy and interpretability.

Types of Associations in Statistical Modeling

Association in statistical modeling refers to any relationship between two variables, which can be classified into types such as positive, negative, linear, and nonlinear associations, reflecting the direction and form of their connection. Collinearity specifically describes a linear association between predictor variables where one can be nearly expressed as a linear combination of others, leading to redundancy and potential instability in regression coefficients. Understanding the types of associations, including the presence of collinearity, is crucial for model selection, interpretation, and improving the accuracy of inferential statistics.

Causes and Sources of Collinearity

Collinearity arises when predictor variables in a regression model exhibit strong linear relationships, often due to shared underlying factors such as similar data collection methods, inherent correlations in natural phenomena, or redundant variables measuring the same concept. It differs from mere association because collinearity specifically impairs the estimation of individual regression coefficients, leading to inflated standard errors and unstable estimates. Common sources include highly correlated demographic variables, multicollinear survey items, and economic indicators influenced by common macroeconomic trends.

Detecting Association in Data Sets

Detecting association in data sets involves evaluating the relationship between variables without implying causation. Measures such as Pearson's correlation coefficient and chi-square tests quantify the strength and direction of linear and categorical associations, respectively. Understanding association helps identify potential patterns and dependencies, whereas collinearity specifically refers to high intercorrelation among predictor variables in regression models, which can distort coefficient estimates.

Identifying Collinearity with Statistical Techniques

Collinearity occurs when two or more predictor variables in a regression model exhibit high correlation, causing difficulties in estimating individual regression coefficients accurately. Identifying collinearity involves statistical techniques such as calculating the Variance Inflation Factor (VIF), where values exceeding 5 or 10 suggest problematic collinearity, and examining the correlation matrix to detect high pairwise correlations above 0.8. Eigenvalue decomposition and condition index analysis further help diagnose multicollinearity by revealing linear dependencies among variables within the dataset.

Impact of Collinearity on Regression Models

Collinearity occurs when two or more predictor variables in a regression model are highly correlated, leading to unreliable coefficient estimates and inflated standard errors. This multicollinearity undermines the interpretability of individual predictors, making it difficult to determine their distinct impact on the dependent variable. Detecting and addressing collinearity, using methods like variance inflation factor (VIF) analysis or principal component regression, is essential for maintaining model stability and predictive accuracy.

Practical Examples: Association vs Collinearity

Association refers to any relationship between two variables where changes in one variable relate to changes in another, such as the positive correlation between exercise frequency and cardiovascular health. Collinearity, a specific type of association, occurs when two or more predictor variables in a regression model are highly correlated, reducing the model's ability to isolate individual variable effects; for example, height and weight often exhibit collinearity when predicting body mass index (BMI). Practical detection of collinearity involves calculating variance inflation factors (VIF), which help identify whether predictor overlap may distort regression results, unlike simple measures of association like Pearson's correlation coefficient.

Best Practices to Address Collinearity in Analysis

Addressing collinearity in statistical analysis involves identifying highly correlated predictor variables using variance inflation factor (VIF) or correlation matrices to prevent inflated standard errors. Employing techniques such as principal component analysis (PCA), ridge regression, or removing redundant variables ensures more reliable coefficient estimates. Regularly validating models through cross-validation enhances robustness and mitigates the adverse effects of multicollinearity on predictive performance.

Association Infographic

libterm.com

libterm.com