Double descent describes the counterintuitive phenomenon in machine learning where increasing model complexity beyond the traditional overfitting threshold leads to improved performance instead of deterioration. This challenges classic bias-variance tradeoff theory by showing that highly overparameterized models, such as deep neural networks, can generalize well on unseen data. Explore the rest of the article to understand how double descent reshapes your approach to model training and evaluation.

Table of Comparison

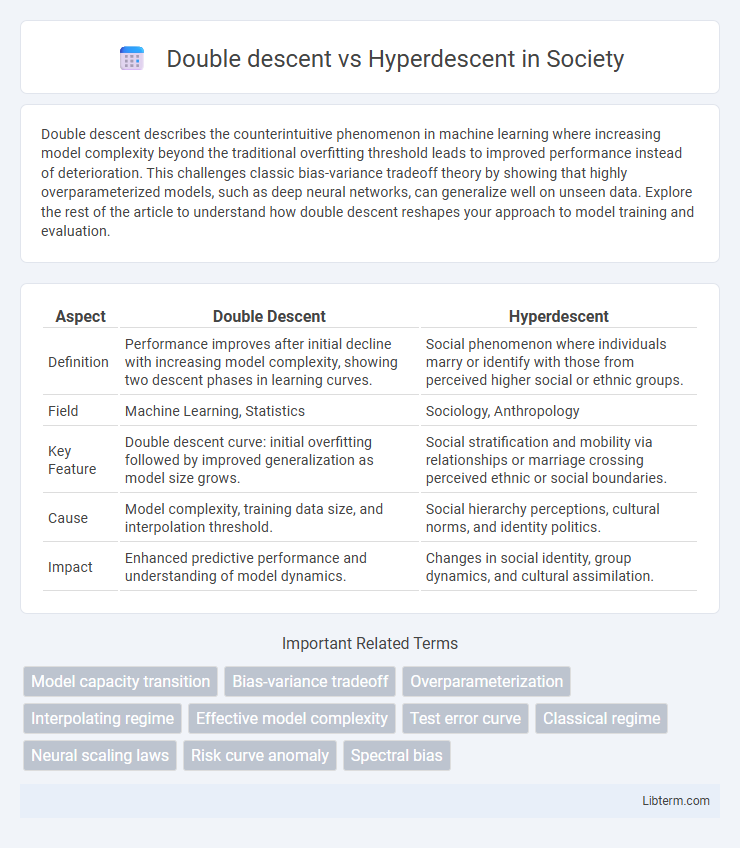

| Aspect | Double Descent | Hyperdescent |

|---|---|---|

| Definition | Performance improves after initial decline with increasing model complexity, showing two descent phases in learning curves. | Social phenomenon where individuals marry or identify with those from perceived higher social or ethnic groups. |

| Field | Machine Learning, Statistics | Sociology, Anthropology |

| Key Feature | Double descent curve: initial overfitting followed by improved generalization as model size grows. | Social stratification and mobility via relationships or marriage crossing perceived ethnic or social boundaries. |

| Cause | Model complexity, training data size, and interpolation threshold. | Social hierarchy perceptions, cultural norms, and identity politics. |

| Impact | Enhanced predictive performance and understanding of model dynamics. | Changes in social identity, group dynamics, and cultural assimilation. |

Introduction to Model Complexity: Double Descent vs Hyperdescent

Model complexity plays a critical role in understanding the phenomena of double descent and hyperdescent in machine learning. Double descent describes the non-monotonic risk curve where test error decreases, then increases, and decreases again as model complexity grows, often linked to overparameterized models like deep neural networks. Hyperdescent extends this concept by exploring even more intricate patterns of generalization error, emphasizing the interplay between model capacity, training dynamics, and data structure in highly complex models.

Traditional Bias-Variance Tradeoff Revisited

Double descent challenges the traditional bias-variance tradeoff by demonstrating that model performance can improve beyond the interpolation threshold, contradicting the conventional U-shaped risk curve. Hyperdescent further extends this phenomenon, showing a second descent phase where overparameterized models continue to generalize well despite extreme complexity. Re-examining the bias-variance tradeoff requires incorporating these behaviors to better understand generalization in modern machine learning models with high capacity.

What is Double Descent in Machine Learning?

Double descent in machine learning refers to a phenomenon where the model's test error initially decreases, then increases around the interpolation threshold, and finally decreases again as model complexity or training data increases. This contrasts with traditional U-shaped bias-variance tradeoff curves by showing a second descent phase where overparameterized models generalize well despite fitting training data perfectly. Understanding double descent aids in optimizing model capacity and avoiding overfitting in deep learning architectures.

Defining Hyperdescent: Beyond Double Descent

Hyperdescent extends the concept of double descent by describing a more complex risk curve where error rates initially decrease, then increase, and decrease again at higher model complexities, followed by yet another rise and fall. This phenomenon highlights multiple peaks and valleys in test error beyond the traditional double descent's single peak, emphasizing the nuanced behavior of model generalization in overparameterized regimes. Understanding hyperdescent is crucial for optimizing deep neural networks, as it reveals hidden performance gains achievable through extreme overparameterization and iterative model scaling.

Key Differences Between Double Descent and Hyperdescent

Double descent describes a phenomenon where model performance improves after initially deteriorating as model complexity or training data size increases, while hyperdescent refers to an exaggerated form with multiple performance dips and peaks beyond the initial double descent curve. Key differences include the complexity of the error curve, with double descent showing a simpler U-shaped pattern followed by improvement, whereas hyperdescent exhibits several oscillations in error rates. Understanding these distinctions aids in optimizing model capacity and training regimes for neural networks and complex machine learning systems.

Mathematical Foundations of Double Descent and Hyperdescent

Double descent and hyperdescent phenomena are rooted in the mathematical analysis of model complexity and generalization error within overparameterized regimes. Double descent is characterized by a risk curve exhibiting two phases of error decrease separated by a peak at the interpolation threshold, mathematically explained using eigenvalue spectra of empirical covariance matrices and implicit regularization effects. Hyperdescent extends this framework by revealing further descents in risk beyond the classical double descent peak, supported by high-dimensional probability theory and refined bias-variance decompositions in deep and kernelized models.

Empirical Evidence: Real-world Examples and Benchmarks

Empirical evidence for double descent phenomena appears prominently in deep neural network training, where test error initially decreases, then increases near interpolation thresholds, and decreases again as model complexity grows, demonstrated in benchmarks like CIFAR-10 and ImageNet classification tasks. Hyperdescent, a less documented concept, suggests continual improvement beyond typical overfitting regimes, yet lacks widespread empirical validation across standard datasets and requires more rigorous exploration with real-world benchmarks. Comparative analyses highlight that double descent effects consistently emerge across different architectures and data settings, confirming the phenomenon's robustness in practical scenarios.

Implications for Neural Network Architecture Design

Double descent illustrates how increasing model capacity beyond the interpolation threshold can improve neural network generalization, challenging traditional bias-variance trade-offs. Hyperdescent extends this concept, showing continuous performance gains with even larger, overparameterized models, suggesting architectures benefit from extreme depth and width. Designing neural networks must consider these phenomena by optimizing capacity and regularization strategies to leverage improved learning without overfitting.

Practical Guidelines for Navigating Double Descent and Hyperdescent

Navigating double descent and hyperdescent requires careful tuning of model complexity and training data size to balance underfitting and overfitting patterns. Employ cross-validation techniques alongside monitoring validation errors to detect unique risk curves associated with double descent, ensuring models do not enter the hyperdescent region where performance degrades sharply. Leveraging early stopping and regularization methods can mitigate hyperdescent effects, improving generalization in high-capacity models like deep neural networks and ensemble methods.

Future Directions and Open Questions in Model Capacity Research

Future directions in model capacity research emphasize understanding the interplay between double descent and hyperdescent phenomena across varying neural network architectures and training regimes. Open questions remain about how model capacity scaling influences generalization errors beyond classical bias-variance tradeoffs, particularly under different regularization methods and data distributions. Investigating these mechanisms could lead to novel training paradigms that optimize performance while preventing overfitting in increasingly complex models.

Double descent Infographic

libterm.com

libterm.com