Deviation refers to the amount by which a measurement differs from a standard or expected value, often used in quality control and data analysis to identify errors or variations. Understanding deviation can help you spot trends and improve accuracy in various fields such as manufacturing, finance, or statistics. Explore the full article to learn how managing deviation enhances precision and decision-making.

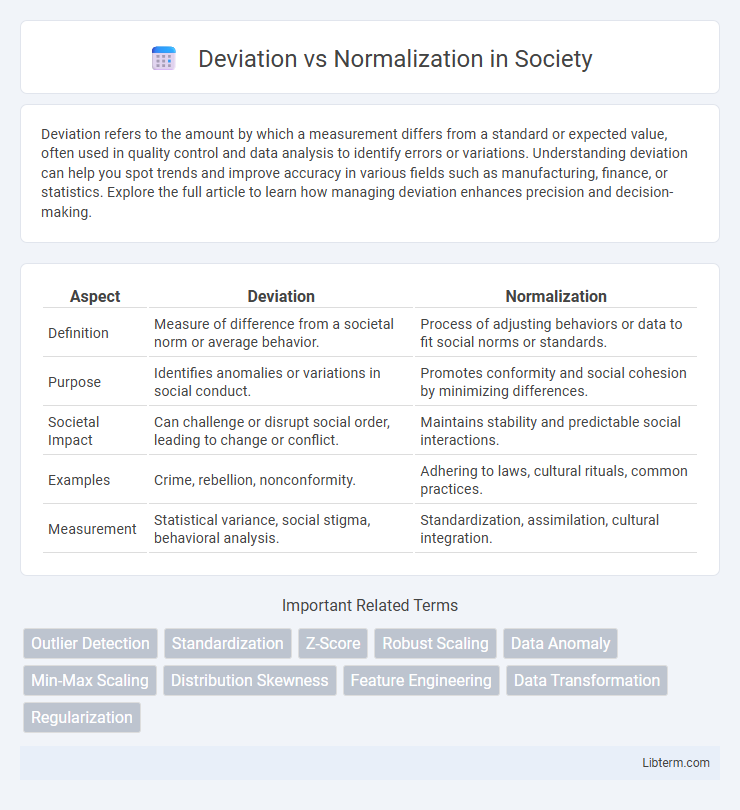

Table of Comparison

| Aspect | Deviation | Normalization |

|---|---|---|

| Definition | Measure of difference from a societal norm or average behavior. | Process of adjusting behaviors or data to fit social norms or standards. |

| Purpose | Identifies anomalies or variations in social conduct. | Promotes conformity and social cohesion by minimizing differences. |

| Societal Impact | Can challenge or disrupt social order, leading to change or conflict. | Maintains stability and predictable social interactions. |

| Examples | Crime, rebellion, nonconformity. | Adhering to laws, cultural rituals, common practices. |

| Measurement | Statistical variance, social stigma, behavioral analysis. | Standardization, assimilation, cultural integration. |

Understanding Deviation and Normalization

Understanding deviation involves measuring how much individual data points differ from the mean, highlighting variability within a dataset. Normalization scales data to a specific range, typically 0 to 1, enabling comparison across different units or magnitudes. Both deviation and normalization are essential techniques in data preprocessing for enhancing model accuracy and interpretability.

Key Definitions: Deviation vs. Normalization

Deviation measures the difference between an individual data point and a reference value, usually the mean, highlighting how far the data varies from the average. Normalization transforms data into a standard scale, typically between 0 and 1, to ensure comparability and reduce bias in analysis. Understanding deviation helps identify data variability, while normalization prepares data for machine learning models by scaling features uniformly.

Historical Context of Data Deviation

Historical data deviation reveals significant fluctuations and anomalies in datasets over time, often caused by changes in data collection methods, external events, or measurement errors. Understanding these deviations is crucial for accurately interpreting trends and making informed decisions, emphasizing the need for normalization techniques to standardize data. Normalization adjusts data ranges and scales, mitigating the impact of historical deviations and enabling consistent comparison across different time periods.

The Importance of Normalization in Data Analysis

Normalization standardizes data ranges, enabling accurate comparisons and improving algorithm performance in data analysis. It reduces bias caused by feature scale disparities, ensuring models such as k-nearest neighbors and neural networks interpret input features uniformly. This process enhances convergence speed and stability, making normalized datasets crucial for reliable statistical analysis and machine learning outcomes.

Core Differences Between Deviation and Normalization

Deviation measures the difference between observed and expected values, quantifying variability within a dataset, while normalization transforms data into a standard scale, enabling direct comparison across different units or magnitudes. Deviation focuses on assessing dispersion or anomalies in data points, whereas normalization adjusts the range or distribution of data to improve consistency. Core differences include their objectives: deviation detects variance and outliers, and normalization ensures uniformity and comparability for analytical processes.

Applications of Deviation in Real-World Scenarios

Deviation plays a crucial role in quality control processes by measuring variations from target values to identify defects or inconsistencies in manufacturing. In finance, deviation metrics such as standard deviation help assess risk by quantifying the volatility of asset returns, guiding investment decisions. Environmental monitoring leverages deviation to detect anomalies in climate data, enabling early identification of trends like unusual temperature changes or pollution spikes.

When Should You Normalize Data?

Normalization should be applied when data features have different scales, which can negatively impact the performance of machine learning algorithms such as gradient descent, k-nearest neighbors, and support vector machines. It is especially important when the model is sensitive to the magnitude of input values and requires features to contribute proportionally during training. Normalizing data helps achieve faster convergence and improved accuracy by rescaling features to a consistent range, typically between 0 and 1 or using z-score standardization.

Pros and Cons: Deviation vs. Normalization

Deviation measures the distance between data points and the mean, making it useful for identifying variability but sensitive to outliers which can distort results. Normalization scales data into a specific range, improving comparability and algorithm performance, yet it may suppress meaningful variance and distort data distribution. Choosing between deviation and normalization depends on the dataset characteristics and the specific requirements of the analysis or machine learning model.

Common Pitfalls and Misconceptions

Deviation and normalization often face misconceptions related to their intended use and interpretation; deviation measures the spread of data from the mean, while normalization rescales data to a common range, typically 0 to 1. A common pitfall is confusing the two processes, leading to inappropriate application in data preprocessing or statistical analysis, which can skew results or impair model performance. Misunderstanding their roles can undermine efforts in data standardization, model training, and comparison across datasets, emphasizing the importance of clear conceptual distinction.

Best Practices for Choosing Between Deviation and Normalization

Choosing between deviation and normalization depends on data distribution and analysis goals, with deviation best suited for identifying variability and outliers while normalization is optimal for scaling features to a uniform range for machine learning algorithms. Deviations highlight anomalies and measure spread in datasets with non-uniform distributions, whereas normalization ensures equal weight for features, improving model convergence and accuracy. Evaluating the context of the data, such as the presence of outliers or the need for feature scaling, is essential for selecting the appropriate method in data preprocessing workflows.

Deviation Infographic

libterm.com

libterm.com