A load balancer efficiently distributes incoming network traffic across multiple servers to ensure reliability and high availability of applications. It enhances performance by preventing any single server from becoming overwhelmed, thus maintaining seamless user experience. Discover how a load balancer can optimize your system's scalability and resilience in the rest of the article.

Table of Comparison

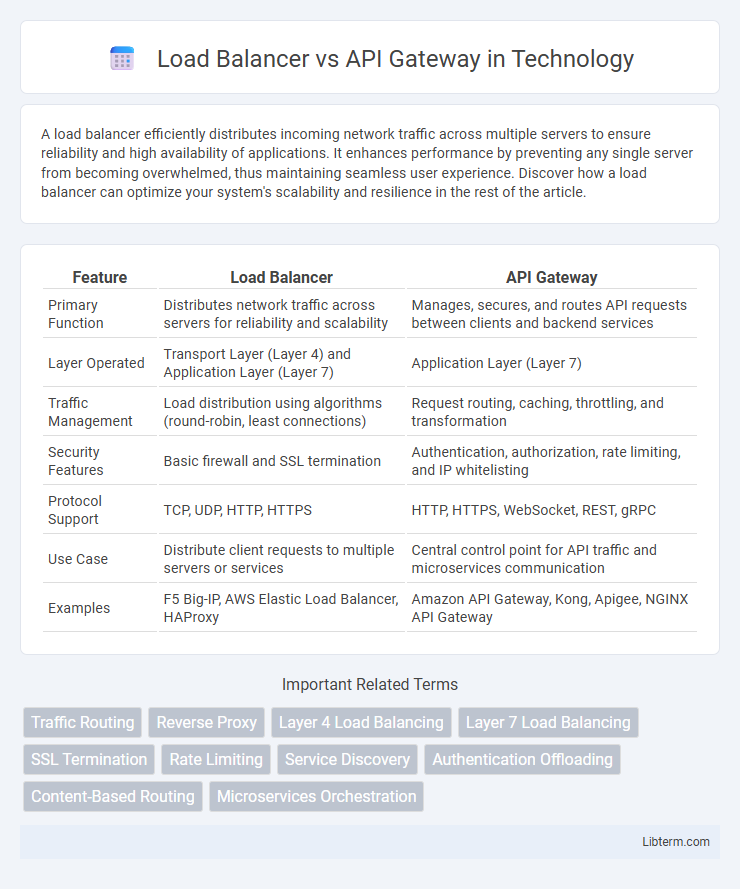

| Feature | Load Balancer | API Gateway |

|---|---|---|

| Primary Function | Distributes network traffic across servers for reliability and scalability | Manages, secures, and routes API requests between clients and backend services |

| Layer Operated | Transport Layer (Layer 4) and Application Layer (Layer 7) | Application Layer (Layer 7) |

| Traffic Management | Load distribution using algorithms (round-robin, least connections) | Request routing, caching, throttling, and transformation |

| Security Features | Basic firewall and SSL termination | Authentication, authorization, rate limiting, and IP whitelisting |

| Protocol Support | TCP, UDP, HTTP, HTTPS | HTTP, HTTPS, WebSocket, REST, gRPC |

| Use Case | Distribute client requests to multiple servers or services | Central control point for API traffic and microservices communication |

| Examples | F5 Big-IP, AWS Elastic Load Balancer, HAProxy | Amazon API Gateway, Kong, Apigee, NGINX API Gateway |

Introduction to Load Balancer vs API Gateway

Load balancers distribute incoming network traffic across multiple servers to ensure high availability and reliability of applications, optimizing resource utilization and minimizing response time. API gateways manage and secure API requests, providing capabilities such as authentication, rate limiting, and request routing, centralizing access to microservices. While load balancers function primarily at the transport layer, API gateways operate at the application layer, offering more granular control over API traffic.

Core Functions: Load Balancer Explained

Load balancers distribute incoming network traffic evenly across multiple servers to ensure high availability and reliability by preventing any single server from becoming overwhelmed. They operate at the transport layer (Layer 4) or the application layer (Layer 7) to manage connections and optimize resource utilization. Core functions include health checks, session persistence, and SSL termination to maintain seamless and secure user experiences.

Core Functions: API Gateway Explained

An API Gateway manages API requests by routing, rate limiting, authentication, and protocol translation, ensuring secure and efficient communication between clients and backend services. Unlike a Load Balancer, which primarily distributes network traffic to multiple servers to optimize resource use and availability, an API Gateway provides additional functionalities such as request validation, API composition, and analytics. This makes API Gateways essential for managing microservices architectures, where complex service interactions require centralized control and enhanced security measures.

Key Differences Between Load Balancers and API Gateways

Load balancers distribute network traffic evenly across multiple servers to ensure high availability and reliability, primarily working at the transport (Layer 4) or application layer (Layer 7) of the OSI model. API gateways provide a unified entry point for API requests, handling authentication, rate limiting, request routing, and transformations, often incorporating advanced features like API analytics and security policies. Unlike load balancers that focus on traffic distribution and fault tolerance, API gateways manage API lifecycle and enforce policies, making them essential in microservices architectures for comprehensive API management.

Architectural Placement in Modern Applications

Load balancers operate at the transport or network layer to distribute incoming traffic across multiple servers, ensuring high availability and scalability, while API gateways function at the application layer, managing and routing API requests, enforcing policies, and handling authentication. In modern microservices architectures, load balancers sit at the edge of the server cluster to evenly distribute network traffic, whereas API gateways serve as a centralized entry point for clients, orchestrating API calls and aggregating responses from multiple backend services. This architectural placement enables load balancers to optimize resource utilization and API gateways to provide fine-grained control over API behavior and security.

Performance Impacts and Scalability Considerations

Load balancers distribute network traffic evenly across multiple servers, enhancing performance by preventing any single server from becoming a bottleneck and enabling horizontal scalability. API gateways perform additional functions such as request routing, authentication, rate limiting, and protocol translation, which can introduce latency but provide essential API management and security features for scalable microservices architectures. Scalability considerations favor load balancers for simple traffic distribution in high-throughput scenarios, while API gateways support complex, scalable service orchestration at the cost of slightly increased performance overhead.

Security Features: Comparing Protections

Load balancers primarily provide basic traffic distribution and SSL termination, offering limited security features such as DDoS protection and IP whitelisting. API gateways deliver advanced security controls including OAuth, JWT authentication, rate limiting, and threat detection to safeguard APIs from unauthorized access and attacks. Enhanced security at the API gateway level ensures robust protection through detailed request validation and encryption mechanisms.

Use Cases: When to Use Load Balancers

Load balancers are ideal for distributing incoming network traffic across multiple servers to ensure high availability and reliability of applications, especially in scenarios that require handling large volumes of HTTP, TCP, or UDP requests. They excel in use cases involving horizontal scaling of web servers, optimizing resource utilization, and preventing server overload by dynamically routing traffic based on factors like server health and load. Deploying load balancers is crucial for applications demanding fault tolerance and seamless failover without the need for complex API management or request transformation features typical of API gateways.

Use Cases: When to Use API Gateways

API Gateways are essential in microservices architectures for managing client requests by routing, authentication, and rate limiting, which Load Balancers do not typically provide. They enable features like request transformation, caching, and detailed monitoring, making them ideal for securing and controlling API traffic in complex applications. Use API Gateways when you need centralized API management, enhanced security, and fine-grained control over API requests in distributed systems.

Choosing the Right Solution for Your Application

Load balancers distribute incoming network traffic across multiple servers to enhance availability and reliability, making them ideal for applications requiring high scalability and fault tolerance. API gateways provide advanced functionalities such as request routing, rate limiting, authentication, and API monitoring, which are essential for managing microservices and securing APIs. Selecting the right solution depends on whether the primary need is efficient traffic distribution or comprehensive API management within your application's architecture.

Load Balancer Infographic

libterm.com

libterm.com