Micro-batching enhances data processing efficiency by grouping small tasks into batches, reducing overhead and improving throughput. This technique balances latency and resource utilization, making it ideal for real-time data streaming and machine learning workflows. Discover how micro-batching can optimize Your data pipelines in the following article.

Table of Comparison

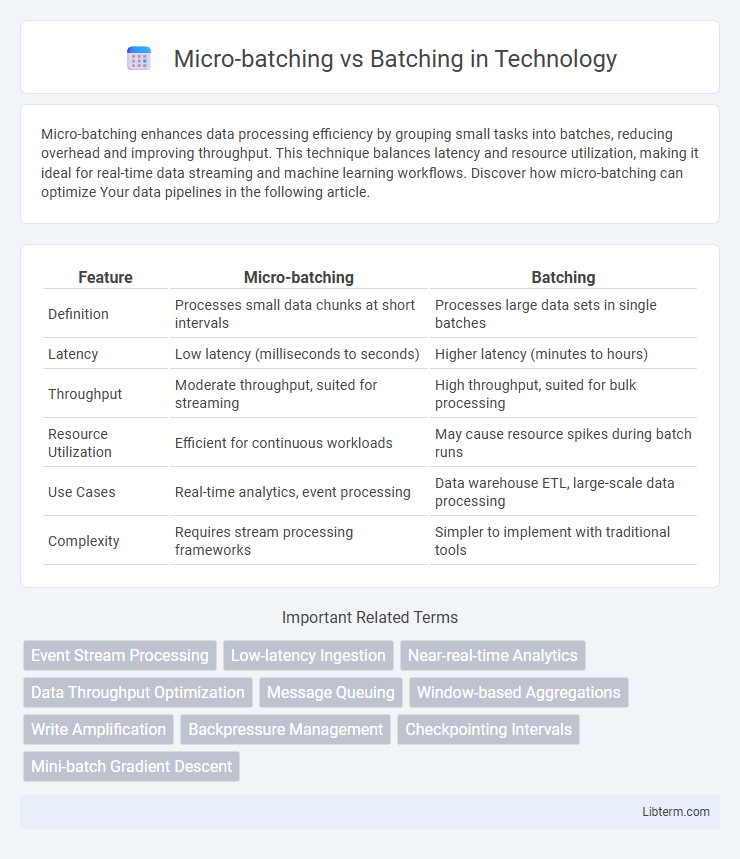

| Feature | Micro-batching | Batching |

|---|---|---|

| Definition | Processes small data chunks at short intervals | Processes large data sets in single batches |

| Latency | Low latency (milliseconds to seconds) | Higher latency (minutes to hours) |

| Throughput | Moderate throughput, suited for streaming | High throughput, suited for bulk processing |

| Resource Utilization | Efficient for continuous workloads | May cause resource spikes during batch runs |

| Use Cases | Real-time analytics, event processing | Data warehouse ETL, large-scale data processing |

| Complexity | Requires stream processing frameworks | Simpler to implement with traditional tools |

Introduction to Micro-batching and Batching

Batching processes data in large groups to improve throughput and reduce overhead, commonly used in data processing and machine learning workflows. Micro-batching breaks data into smaller, more manageable chunks, balancing latency and computation efficiency for near-real-time processing. This approach is essential for systems requiring timely responses while still benefiting from the advantages of batch processing.

Defining Micro-batching: Key Characteristics

Micro-batching processes data in small, discrete chunks, typically ranging from milliseconds to a few seconds, enabling near real-time analytics and reduced latency compared to traditional batching methods. This approach combines the efficiency of batch processing with the responsiveness of streaming, allowing systems to handle high-velocity data streams while maintaining fault tolerance and resource optimization. Key characteristics of micro-batching include minimal delay, incremental data handling, and improved throughput, making it ideal for applications requiring timely insights without sacrificing processing accuracy.

Understanding Traditional Batching

Traditional batching processes large volumes of data by grouping them into substantial, fixed-size batches for efficient handling and resource optimization in systems like ETL pipelines or database transactions. This method reduces overhead by minimizing the number of read/write operations but can introduce latency due to waiting for batch completion before processing. Understanding traditional batching highlights its suitability for stable workloads where throughput outweighs the need for real-time response.

Core Differences between Micro-batching and Batching

Micro-batching processes data streams in small, discrete chunks at fixed time intervals, optimizing latency and resource utilization for near real-time applications. Traditional batching aggregates large volumes of data before processing, emphasizing throughput and efficiency over immediacy. Core differences include latency sensitivity, with micro-batching enabling faster data insights, while batching prioritizes bulk processing for higher computational efficiency.

Performance Comparison: Micro-batching vs Batching

Micro-batching processes small batches of data at high frequency, reducing latency and enabling near real-time performance, whereas traditional batching handles large volumes of data less frequently, often resulting in higher throughput but increased delay. Micro-batching offers improved resource utilization and faster response times for streaming applications, while standard batching excels in scenarios where maximizing overall throughput is more critical than immediate processing speed. Performance comparison reveals micro-batching is optimal for use cases demanding low latency and agility, whereas batching suits bulk processing tasks prioritizing efficiency over speed.

Latency and Throughput Implications

Micro-batching processes smaller batches of data at high frequency, significantly reducing latency compared to traditional batching, which groups larger datasets to improve throughput but often increases processing delay. Batching maximizes resource utilization and throughput by processing bulk data together, suitable for workloads where latency is less critical. Micro-batching strikes a balance by enhancing real-time processing capabilities with moderate throughput, making it ideal for applications requiring low-latency insights and scalable data handling.

Use Cases Best Suited for Micro-batching

Micro-batching excels in use cases requiring near-real-time data processing with reduced latency, such as streaming analytics, fraud detection, and real-time recommendation systems. It balances throughput and latency by processing small batches of data at short intervals, making it ideal for scenarios where rapid response is critical but event-at-a-time processing is too costly. Industries like finance, e-commerce, and IoT often leverage micro-batching to handle continuous data streams efficiently while enabling timely decision-making.

When to Choose Traditional Batching

Traditional batching is ideal when processing large volumes of data where latency is less critical, allowing for efficient resource utilization and simplified system design. It suits workflows with predictable workloads and fixed processing windows, such as end-of-day reporting or monthly data aggregation. Choosing traditional batching can enhance throughput and reduce overhead in scenarios where real-time or near-real-time processing is unnecessary.

Challenges and Trade-offs in Each Approach

Micro-batching offers lower latency and finer-grained processing but introduces complexity in system design and higher overhead due to frequent context switching. Batching optimizes throughput and resource utilization by processing large data chunks but increases latency and risks data staleness in time-sensitive applications. Choosing between micro-batching and batching requires balancing trade-offs in latency, throughput, system complexity, and real-time data requirements.

Future Trends in Data Processing Strategies

Future trends in data processing emphasize micro-batching for enhanced real-time analytics, reducing latency compared to traditional batching methods. Advances in stream processing platforms like Apache Flink and Spark Structured Streaming optimize micro-batching to balance throughput and latency effectively. Emerging hybrid approaches combine micro-batching and batch processing to handle diverse workload requirements and improve scalability in distributed systems.

Micro-batching Infographic

libterm.com

libterm.com