On-premises AI offers companies full control over their data and infrastructure, enhancing security and compliance with industry regulations. This approach reduces latency and ensures real-time processing by keeping AI workloads within your local network. Explore the full article to understand the benefits and implementation strategies of on-premises AI solutions.

Table of Comparison

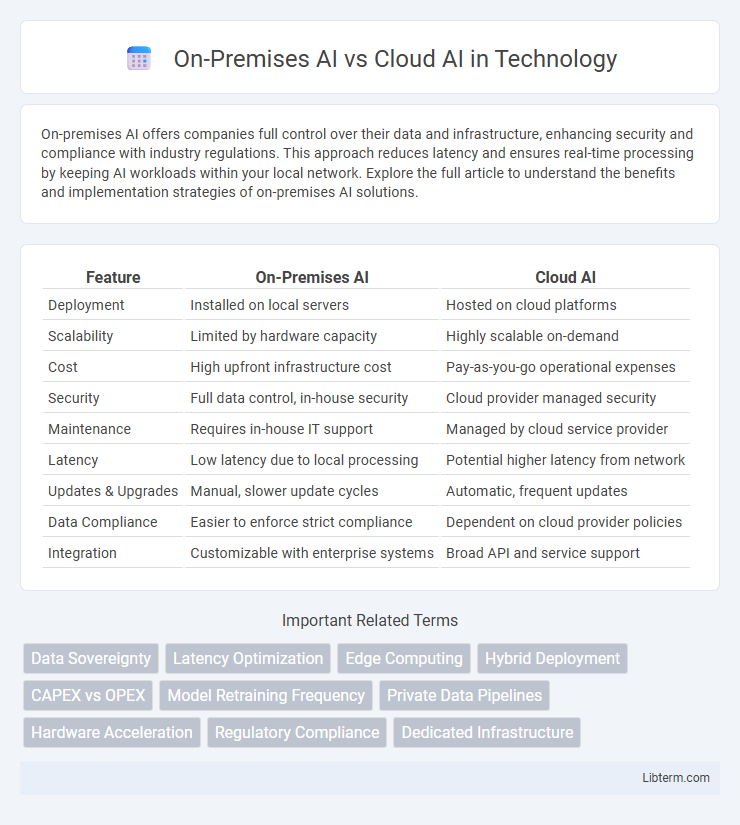

| Feature | On-Premises AI | Cloud AI |

|---|---|---|

| Deployment | Installed on local servers | Hosted on cloud platforms |

| Scalability | Limited by hardware capacity | Highly scalable on-demand |

| Cost | High upfront infrastructure cost | Pay-as-you-go operational expenses |

| Security | Full data control, in-house security | Cloud provider managed security |

| Maintenance | Requires in-house IT support | Managed by cloud service provider |

| Latency | Low latency due to local processing | Potential higher latency from network |

| Updates & Upgrades | Manual, slower update cycles | Automatic, frequent updates |

| Data Compliance | Easier to enforce strict compliance | Dependent on cloud provider policies |

| Integration | Customizable with enterprise systems | Broad API and service support |

Introduction to On-Premises AI and Cloud AI

On-Premises AI involves deploying artificial intelligence systems directly within a company's local infrastructure, ensuring full control over data security and latency-sensitive applications. Cloud AI leverages remote servers hosted on the internet to provide scalable AI resources, offering flexibility, rapid deployment, and reduced upfront hardware costs. Understanding the differences between On-Premises AI and Cloud AI enables organizations to choose solutions best aligned with their data privacy requirements, computational needs, and budget constraints.

Core Differences Between On-Premises and Cloud AI

On-Premises AI offers full data control and low latency by hosting AI infrastructure within an organization's local environment, ensuring compliance with strict data privacy regulations. Cloud AI provides scalable resources and easy integration with other cloud services, facilitating rapid deployment and flexibility without heavy upfront investments. Core differences revolve around data sovereignty, scalability, infrastructure management, and cost structures.

Security and Data Privacy Considerations

On-premises AI provides enhanced security and data privacy by maintaining sensitive information within an organization's physical infrastructure, minimizing exposure to external threats or breaches common in cloud environments. Cloud AI, while offering scalability and accessibility, requires rigorous encryption protocols, compliance with regulatory standards like GDPR or HIPAA, and robust identity and access management to mitigate data risks. Organizations must evaluate their data sensitivity, regulatory requirements, and control preferences to determine the optimal balance between security and operational flexibility in AI deployment.

Performance and Latency Comparisons

On-premises AI systems deliver superior performance and lower latency by processing data locally, eliminating the delays caused by data transmission to cloud servers. Cloud AI benefits from scalable computing resources but may experience variable latency due to network congestion and distance from data centers. Enterprises requiring real-time analytics and rapid decision-making often prefer on-premises solutions to ensure consistent, high-speed processing.

Scalability and Flexibility Factors

On-premises AI offers limited scalability as it relies on fixed hardware capacity, often requiring significant investment for expansion, whereas cloud AI provides virtually unlimited scalability through dynamic resource allocation. Cloud AI enhances flexibility by enabling rapid deployment and easy updates across distributed environments, while on-premises solutions may face constraints due to infrastructure and maintenance requirements. Enterprises seeking agile scaling and adaptable AI models often prefer cloud AI for its ability to handle fluctuating workloads efficiently.

Cost Implications: Upfront and Ongoing

On-premises AI requires significant upfront investment in hardware, infrastructure, and maintenance, leading to higher capital expenditures. Cloud AI offers a pay-as-you-go model with lower initial costs but can incur substantial ongoing expenses based on usage, data storage, and compute power. Organizations must evaluate total cost of ownership by balancing fixed on-premises costs against variable cloud service fees to optimize budget efficiency.

Control, Customization, and Compliance

On-Premises AI offers greater control over data and infrastructure, enabling organizations to customize AI models to specific business needs while maintaining strict compliance with industry regulations. Cloud AI provides scalable resources and faster deployment but often involves shared environments that can complicate data security and compliance management. Enterprises prioritizing data sovereignty and tailored AI solutions typically prefer on-premises deployments, whereas businesses seeking agility and cost-effectiveness lean towards cloud AI services.

Maintenance, Updates, and Technical Support

On-premises AI requires in-house maintenance teams to handle hardware upkeep, software updates, and technical troubleshooting, resulting in higher operational costs and longer downtime windows. Cloud AI platforms offer automated updates, continuous monitoring, and dedicated technical support from service providers, ensuring minimal disruption and enhanced system reliability. Enterprises choosing cloud AI benefit from scalable maintenance solutions and rapid issue resolution, contrasting with the resource-intensive demands of on-premises AI systems.

Use Cases: When to Choose On-Premises or Cloud AI

On-premises AI is ideal for organizations requiring stringent data security, low latency processing, and complete control over infrastructure, such as in healthcare, finance, and government sectors. Cloud AI suits businesses needing scalable resources, rapid deployment, and cost-effective solutions for applications like customer service chatbots, predictive analytics, and real-time data processing. Choosing between on-premises and cloud AI depends on specific use cases, regulatory compliance, budget constraints, and performance requirements.

Future Trends in AI Deployment Models

Future trends in AI deployment models emphasize a hybrid approach combining on-premises AI and cloud AI to balance data security with scalability. Edge computing advancements will enhance on-premises AI by enabling real-time processing and reduced latency, critical for industries like healthcare and manufacturing. Cloud AI platforms will continue evolving with improved orchestration and AI model management tools, fostering seamless integration between local infrastructure and cloud resources.

On-Premises AI Infographic

libterm.com

libterm.com