Concept drift occurs when the statistical properties of target variables change over time, affecting the performance of machine learning models. Detecting and adapting to concept drift ensures your models remain accurate and reliable in dynamic environments. Explore the rest of the article to discover effective strategies for managing concept drift in your AI systems.

Table of Comparison

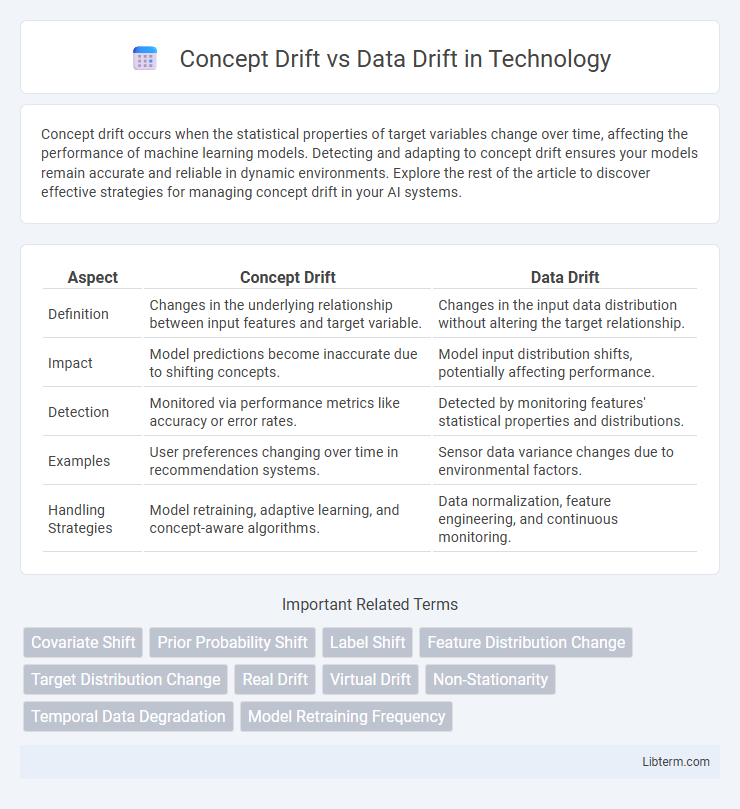

| Aspect | Concept Drift | Data Drift |

|---|---|---|

| Definition | Changes in the underlying relationship between input features and target variable. | Changes in the input data distribution without altering the target relationship. |

| Impact | Model predictions become inaccurate due to shifting concepts. | Model input distribution shifts, potentially affecting performance. |

| Detection | Monitored via performance metrics like accuracy or error rates. | Detected by monitoring features' statistical properties and distributions. |

| Examples | User preferences changing over time in recommendation systems. | Sensor data variance changes due to environmental factors. |

| Handling Strategies | Model retraining, adaptive learning, and concept-aware algorithms. | Data normalization, feature engineering, and continuous monitoring. |

Understanding Concept Drift and Data Drift

Concept Drift refers to changes in the underlying relationship between input data and target variables, typically impacting model accuracy over time. Data Drift involves shifts in the input data distribution without necessarily affecting the target variable associations, signaling potential input feature changes. Detecting and differentiating Concept Drift and Data Drift is crucial for maintaining robust machine learning model performance in dynamic environments.

Key Differences Between Concept Drift and Data Drift

Concept Drift refers to changes in the underlying relationship between input features and the target variable, whereas Data Drift involves shifts in the distribution of input features over time. Concept Drift directly impacts model accuracy by altering the predictive patterns, while Data Drift affects input data characteristics that may or may not influence model performance. Detecting Concept Drift typically requires monitoring model predictions and error rates, whereas Data Drift detection focuses on statistical changes in feature distributions.

Causes of Concept Drift in Machine Learning

Concept drift in machine learning occurs when the statistical properties of the target variable change over time, causing models trained on historical data to become less accurate. Key causes include evolving user behavior, changes in external environment or market conditions, and shifts in the underlying data distribution that impact the relationship between input features and target labels. Unlike data drift, which involves changes in input feature distribution, concept drift specifically refers to changes in the target concept or output relationship, necessitating continuous model monitoring and adaptation.

Causes of Data Drift in Machine Learning

Data drift in machine learning occurs when the statistical properties of input data change over time, altering the distribution and leading to decreased model accuracy. Common causes include changes in user behavior, seasonal trends, sensor degradation, and evolving external environments. Continuous monitoring and adaptive retraining are essential to address these shifts and maintain model performance.

Detecting Concept Drift: Methods and Tools

Detecting concept drift involves monitoring changes in the underlying relationships between input features and target variables, using methods like statistical hypothesis tests, drift detection algorithms such as DDM (Drift Detection Method) and ADWIN (Adaptive Windowing), or machine learning techniques based on error rate analysis and model retraining triggers. Tools like River, Alibi Detect, and NannyML provide robust frameworks for real-time drift detection by leveraging these algorithms to identify shifts in predictive model performance and data distributions. Effective concept drift detection ensures timely adaptation of models to evolving data patterns, maintaining accuracy and reliability in dynamic environments.

Detecting Data Drift: Techniques and Strategies

Detecting data drift involves monitoring changes in the input data distribution using statistical tests like the Kolmogorov-Smirnov test, Chi-square test, and Population Stability Index (PSI). Advanced techniques incorporate unsupervised learning algorithms such as clustering and dimensionality reduction to identify subtle shifts in feature space. Implementing real-time drift detection tools and continuous monitoring pipelines enhances the ability to promptly respond to evolving data environments and maintain model reliability.

Impact of Concept Drift on Model Performance

Concept drift causes the underlying relationships between input features and target variables to change over time, leading to decreased model accuracy and unreliable predictions. Unlike data drift, which involves changes in input data distribution, concept drift directly affects the predictive function learned by the model, necessitating frequent retraining or adaptation. Ignoring concept drift results in model degradation, increased error rates, and compromised decision-making in dynamic environments.

Impact of Data Drift on Model Accuracy

Data drift refers to changes in the input data distribution over time, which directly impacts model accuracy by causing the model to make incorrect predictions based on outdated patterns. Unlike concept drift, which involves changes in the relationship between input features and the target variable, data drift primarily affects the feature distribution, leading to reduced model performance if not detected and addressed. Continuous monitoring of data drift through statistical tests and adaptation techniques is essential to maintain robust predictive accuracy in machine learning models.

Best Practices for Managing Concept and Data Drift

Effective management of concept drift and data drift requires continuous monitoring of model performance and data distributions using statistical tests such as Population Stability Index (PSI) and Kolmogorov-Smirnov (KS) test. Implementing adaptive models with online learning or periodic retraining ensures responsiveness to evolving patterns in data and target relationships. Maintaining a robust data pipeline with automated alerts and version control supports early detection and swift correction of drift impacts, preserving model accuracy and reliability.

Future Trends in Drift Detection and Adaptation

Future trends in drift detection and adaptation emphasize the integration of real-time monitoring systems leveraging advanced machine learning models that dynamically adjust to evolving data patterns. The use of adaptive algorithms incorporating deep learning and reinforcement learning enables proactive identification and correction of both concept drift, affecting model accuracy, and data drift, impacting input distribution. Emerging approaches also prioritize explainability and interpretability to enhance trust in automated drift management across diverse applications such as finance, healthcare, and autonomous systems.

Concept Drift Infographic

libterm.com

libterm.com