Model drift occurs when the performance of a predictive model degrades over time due to changes in data patterns or external factors. Detecting and addressing model drift is essential to maintain accuracy and reliability in machine learning applications. Explore the rest of this article to learn how you can identify and mitigate model drift effectively.

Table of Comparison

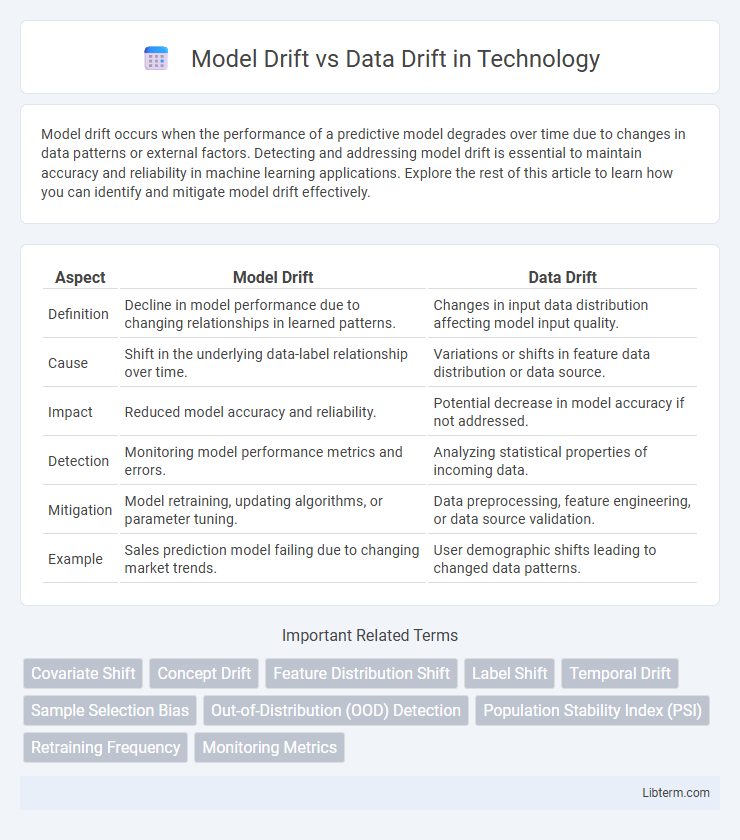

| Aspect | Model Drift | Data Drift |

|---|---|---|

| Definition | Decline in model performance due to changing relationships in learned patterns. | Changes in input data distribution affecting model input quality. |

| Cause | Shift in the underlying data-label relationship over time. | Variations or shifts in feature data distribution or data source. |

| Impact | Reduced model accuracy and reliability. | Potential decrease in model accuracy if not addressed. |

| Detection | Monitoring model performance metrics and errors. | Analyzing statistical properties of incoming data. |

| Mitigation | Model retraining, updating algorithms, or parameter tuning. | Data preprocessing, feature engineering, or data source validation. |

| Example | Sales prediction model failing due to changing market trends. | User demographic shifts leading to changed data patterns. |

Understanding Model Drift: Definition and Causes

Model drift refers to the gradual degradation of a machine learning model's performance over time due to changes in the relationship between input features and target variables. This phenomenon occurs when the statistical properties that the model learned during training no longer hold true in the production environment, often caused by evolving data patterns, seasonal trends, or external factors. Identifying model drift involves monitoring prediction accuracy, error rates, and feature importance shifts to ensure the model remains reliable and relevant.

What is Data Drift? Key Concepts Explained

Data drift refers to changes in the input data's statistical properties over time, which can affect the performance of machine learning models. It involves shifts in feature distributions, such as mean, variance, or correlation structures, that differ from the training data, leading to degraded prediction accuracy. Monitoring data drift is crucial for maintaining model reliability and ensuring timely retraining or recalibration.

Model Drift vs Data Drift: Core Differences

Model drift refers to the degradation in a machine learning model's performance over time due to changes in the underlying relationships between input features and target variables. Data drift, or covariate shift, involves shifts in the statistical properties of input data distributions without necessarily impacting the predictive relationship. The core difference lies in model drift affecting the model's accuracy and decision boundaries, while data drift primarily concerns changes in input data patterns that may lead to model drift if unaddressed.

Common Signs of Model Drift in Machine Learning

Common signs of model drift in machine learning include a sudden decline in prediction accuracy, increased error rates, and inconsistent performance on new data compared to the training set. Detection often involves monitoring key performance indicators such as precision, recall, and F1 score over time to identify deviations from expected behavior. Changes in feature importance or shifts in input data distribution without corresponding model adjustments also indicate underlying model drift.

Indicators of Data Drift in Production Systems

Indicators of data drift in production systems include significant shifts in feature distributions, where statistical measures like mean, variance, or correlation deviate from the training data patterns. Monitoring performance metrics such as prediction accuracy, precision, or recall can also reveal data drift when they degrade without changes in the model architecture. Changes in data quality, missing values, or unusual input patterns serve as practical signals of data drift affecting model reliability.

Real-World Examples of Model and Data Drift

Model drift occurs when a predictive model's performance degrades over time due to changes in the underlying relationships within the data, such as a credit scoring model becoming less accurate as borrower behavior evolves post-pandemic. Data drift involves shifts in the input data distribution without changes in the target relationship, exemplified by seasonal changes in customer purchase patterns affecting retail demand forecasts. Real-world cases include fraud detection systems that face model drift as fraud tactics adapt, and sensor data in manufacturing where data drift emerges from changes in equipment behavior or environmental conditions.

Impact of Drift on Model Performance and Accuracy

Model drift and data drift both degrade model performance and accuracy by introducing discrepancies between training and production environments. Model drift occurs when the relationship between input features and target variables changes, leading to incorrect predictions despite stable input data distribution. Data drift, involving shifts in input feature distributions, can cause models to misinterpret new data patterns, reducing prediction accuracy and increasing error rates over time.

Techniques to Detect Model and Data Drift

Techniques to detect model drift include monitoring performance metrics such as accuracy, precision, recall, and F1 score over time to identify significant drops indicating model degradation. Data drift detection methods rely on statistical tests like the Kolmogorov-Smirnov test, Population Stability Index (PSI), and Kullback-Leibler divergence to compare current input data distributions against historical data. Leveraging tools such as Explainable AI (XAI) models and feature attribution methods can also help identify changes in feature importance that signal model or data drift.

Strategies for Managing and Mitigating Drift

Effective strategies for managing model drift include continuous monitoring of model performance metrics and implementing adaptive algorithms that retrain on new data to maintain accuracy. Addressing data drift involves real-time detection techniques, such as statistical tests and feature distribution analysis, to identify changes in input data patterns promptly. Employing robust data pipelines with automated alerts and feedback loops ensures timely intervention, reducing the risk of degraded model outcomes caused by evolving data environments.

Best Practices to Future-Proof Machine Learning Models

Model drift occurs when the relationship between input features and the target variable changes, whereas data drift refers to shifts in the input data distribution over time. To future-proof machine learning models, continuous monitoring of both model performance metrics and input feature distributions is essential, alongside implementing automated retraining pipelines triggered by detected drifts. Employing robust feature engineering, adaptive algorithms, and version control for data and models further enhances resilience against evolving data environments.

Model Drift Infographic

libterm.com

libterm.com