Data aggregation consolidates information from multiple sources to create a comprehensive dataset, enhancing analysis accuracy and decision-making. This process optimizes big data handling, enabling businesses to uncover trends, improve customer insights, and streamline operations. Discover how effective data aggregation can transform Your data strategy by reading the full article.

Table of Comparison

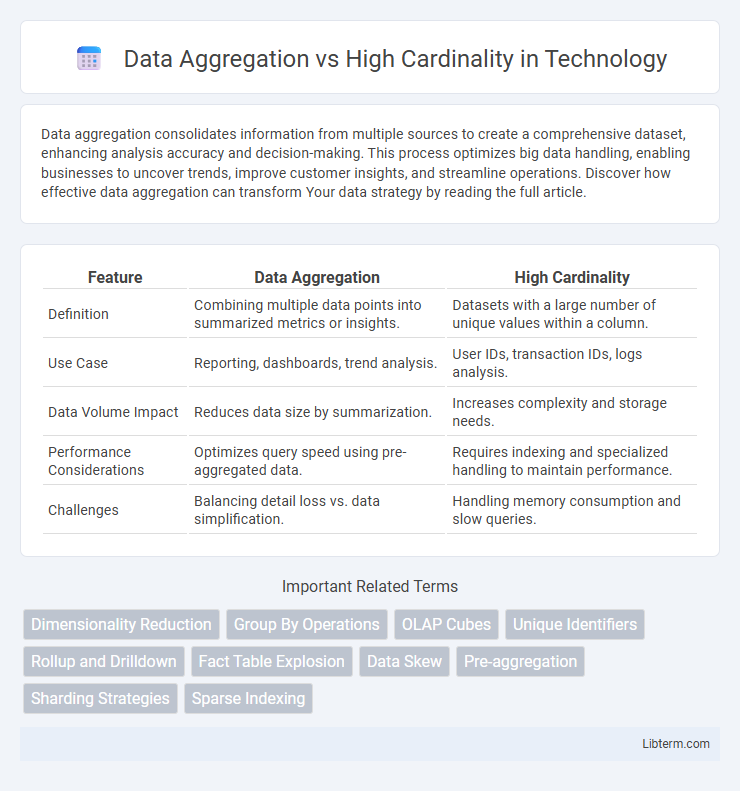

| Feature | Data Aggregation | High Cardinality |

|---|---|---|

| Definition | Combining multiple data points into summarized metrics or insights. | Datasets with a large number of unique values within a column. |

| Use Case | Reporting, dashboards, trend analysis. | User IDs, transaction IDs, logs analysis. |

| Data Volume Impact | Reduces data size by summarization. | Increases complexity and storage needs. |

| Performance Considerations | Optimizes query speed using pre-aggregated data. | Requires indexing and specialized handling to maintain performance. |

| Challenges | Balancing detail loss vs. data simplification. | Handling memory consumption and slow queries. |

Understanding Data Aggregation

Data aggregation involves collecting and summarizing detailed data into a more manageable form, facilitating efficient analysis and reporting. It reduces data complexity by grouping values based on common attributes, which is essential when handling large datasets with high cardinality, where unique values are numerous and detailed queries can become resource-intensive. Understanding data aggregation enables better decision-making by transforming raw data into meaningful insights without overwhelming analytical systems.

What Is High Cardinality?

High cardinality refers to a dataset attribute containing a vast number of unique values, such as user IDs, email addresses, or transaction numbers. Managing high cardinality is crucial in data aggregation processes because it affects storage efficiency and query performance, often requiring specialized indexing or summarization techniques. Understanding high cardinality enables better data modeling and optimization in databases, ensuring faster analytics and more insightful reporting.

Key Differences: Data Aggregation vs High Cardinality

Data aggregation involves summarizing large datasets into concise metrics, reducing granularity for easier analysis and reporting, while high cardinality refers to a dataset characteristic with a vast number of unique values in a column, often complicating data processing. High cardinality impacts database performance and indexing, requiring specialized handling techniques, whereas data aggregation improves query efficiency by decreasing dataset size. Understanding these key differences is essential for optimizing data storage, query execution, and analytics workflows in big data environments.

Benefits of Data Aggregation in Analytics

Data aggregation streamlines analytics by consolidating vast datasets into summarized, manageable insights, enhancing computational efficiency and reducing storage requirements. It improves data clarity, enabling quicker trend identification and informed decision-making across business intelligence platforms. By minimizing noise from high cardinality data, aggregation optimizes query performance and supports scalable analytics infrastructure.

Challenges Posed by High Cardinality Data

High cardinality data presents significant challenges in data aggregation due to the sheer volume of unique values, leading to increased memory consumption and slower query performance. Managing and analyzing datasets with millions of distinct identifiers can overwhelm aggregation algorithms, causing inefficient indexing and complex query optimization. Effective handling requires specialized techniques like dimensionality reduction or advanced hashing methods to maintain performance and accuracy.

Impact on Data Storage and Performance

Data aggregation reduces high cardinality by summarizing detailed records into fewer, meaningful groups, which significantly decreases data storage requirements and improves query performance. High cardinality datasets with numerous unique values increase storage demands and slow down indexing and retrieval processes, negatively affecting system efficiency. Optimizing data aggregation strategies can balance storage utilization and enhance performance in large-scale databases dealing with complex, high-cardinality data.

Use Cases: When to Aggregate, When to Preserve Cardinality

Data aggregation is ideal for use cases requiring summary statistics or trend analysis across large datasets, such as monthly sales reports or user engagement metrics, where reducing data volume enhances performance and clarity. High cardinality preservation is crucial when detailed, individual-level insights or granular segmentation are needed, for example, in fraud detection, personalized marketing, or identity resolution scenarios. Choosing between aggregation and preserving high cardinality depends on the balance between analytical objectives demanding broad trends versus those requiring fine-grained, unique record distinctions.

Best Practices for Handling High Cardinality

Handling high cardinality requires efficient data aggregation techniques that minimize memory usage and improve query performance. Using methods such as bucketing, hashing, or encoding categorical variables can effectively reduce the dimensionality and optimize storage. Employing approximate algorithms like HyperLogLog further enhances scalability when aggregating large datasets with numerous unique values.

Tools and Technologies Supporting Aggregation and Cardinality

Data aggregation and high cardinality challenges are addressed by specialized tools like Apache Spark, Druid, and ClickHouse, which offer efficient data summarization and real-time analytics capabilities. Technologies such as Elasticsearch and Apache Pinot excel in handling high cardinality by optimizing index structures and enabling fast queries on large, unique datasets. Cloud platforms like AWS Redshift and Google BigQuery provide scalable solutions combining data aggregation functions and high cardinality support with advanced SQL engines and distributed architectures.

Future Trends in Data Aggregation and High Cardinality Management

Future trends in data aggregation emphasize advanced machine learning algorithms that optimize processing of high cardinality datasets, enabling more accurate real-time analytics. Scalable cloud-based platforms and edge computing architectures are designed to efficiently handle vast, complex data sources with numerous unique identifiers, reducing latency and enhancing data integration. Enhanced semantic models and automated feature engineering tools are revolutionizing high cardinality management by improving data quality and enabling richer predictive insights.

Data Aggregation Infographic

libterm.com

libterm.com