Bandwidth determines the amount of data that can be transmitted over a network connection in a given time, directly impacting your internet speed and performance. Higher bandwidth allows for smoother video streaming, faster downloads, and improved online gaming experiences. Explore the rest of the article to learn how to optimize your bandwidth for the best digital experience.

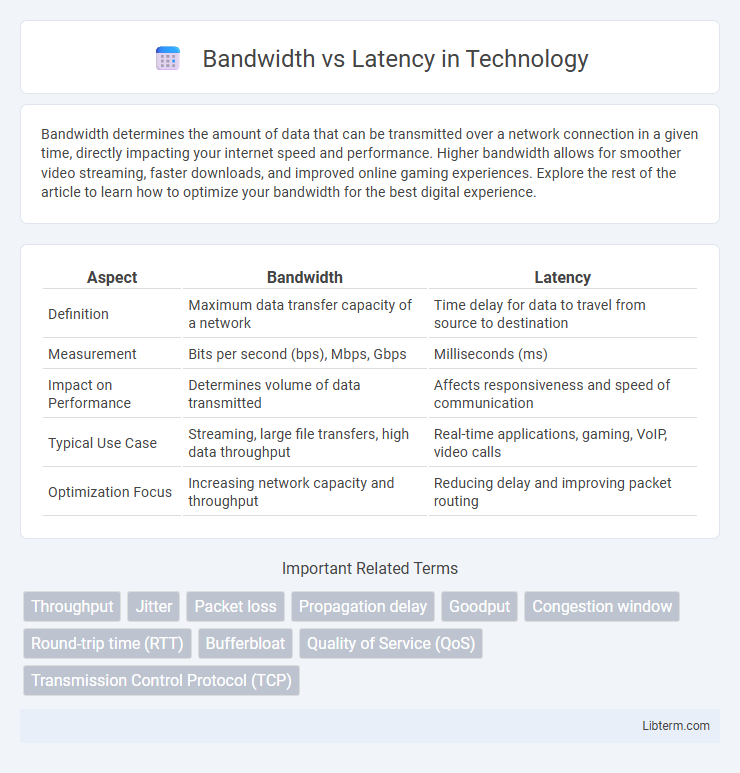

Table of Comparison

| Aspect | Bandwidth | Latency |

|---|---|---|

| Definition | Maximum data transfer capacity of a network | Time delay for data to travel from source to destination |

| Measurement | Bits per second (bps), Mbps, Gbps | Milliseconds (ms) |

| Impact on Performance | Determines volume of data transmitted | Affects responsiveness and speed of communication |

| Typical Use Case | Streaming, large file transfers, high data throughput | Real-time applications, gaming, VoIP, video calls |

| Optimization Focus | Increasing network capacity and throughput | Reducing delay and improving packet routing |

Understanding Bandwidth: Definition and Importance

Bandwidth refers to the maximum data transfer rate of a network connection, measured in bits per second (bps), and determines how much information can be transmitted within a given time frame. High bandwidth is crucial for supporting data-intensive applications such as video streaming, online gaming, and large file downloads, ensuring smooth and efficient performance. Understanding bandwidth capacity helps in optimizing network infrastructure and managing user demands effectively.

What is Latency? Explaining the Basics

Latency refers to the time delay between sending a data packet and receiving a response, typically measured in milliseconds (ms). It impacts the responsiveness of networks, with lower latency resulting in faster communication crucial for real-time applications like gaming and video conferencing. Unlike bandwidth, which measures the amount of data transferable per second, latency specifically quantifies the speed of data transmission across the network.

Bandwidth vs Latency: Key Differences

Bandwidth refers to the maximum amount of data transmitted over a network connection in a given time, typically measured in Mbps or Gbps, while latency indicates the delay before data begins to transfer, measured in milliseconds. High bandwidth enables faster data transfer rates, but low latency ensures quicker response times and real-time communication efficiency. Understanding the key differences between bandwidth and latency is crucial for optimizing network performance based on specific application requirements.

How Bandwidth Affects Network Performance

Bandwidth determines the maximum amount of data that can be transmitted over a network connection in a given time, directly impacting the network's capacity to handle high volumes of traffic. Higher bandwidth allows for faster data transfer rates, reducing congestion and improving the overall user experience during activities such as streaming, gaming, or large file downloads. While bandwidth influences throughput, it does not affect latency, which is the delay before data transfer begins, but greater bandwidth can support more simultaneous connections, enhancing network performance under heavy load.

The Impact of Latency on User Experience

Latency critically affects user experience by causing delays between user actions and system responses, leading to noticeable lag in applications like video streaming, online gaming, and real-time communications. While bandwidth determines the volume of data transmitted, low latency ensures instantaneous interaction, which is essential for maintaining smooth performance and user satisfaction. High latency can result in buffering, choppy audio, and delayed feedback, severely degrading the overall quality of digital services.

Common Causes of High Latency

High latency is commonly caused by network congestion, inefficient routing, and physical distance between devices, which increases the time data packets take to reach their destination. Packet loss and hardware limitations, such as outdated routers or network interface cards, also contribute significantly to latency spikes. Understanding these factors is essential for optimizing network performance alongside bandwidth considerations.

Bandwidth Limitations: Myths and Facts

Bandwidth limitations are often misunderstood; many believe higher bandwidth always guarantees faster internet speeds, but actual performance depends on factors like network congestion and hardware capabilities. Myths include the idea that simply upgrading bandwidth can fix all latency issues, while the fact is latency relates to the time data takes to travel, unaffected by bandwidth size. Real-world scenarios show that bandwidth sets the maximum data transfer capacity, but efficient data transmission relies on balancing both bandwidth and latency for optimal network performance.

Real-World Examples: Bandwidth and Latency in Action

Streaming 4K ultra-high-definition videos requires high bandwidth to handle large data volumes smoothly, while online gaming depends heavily on low latency to minimize lag and ensure real-time responsiveness. Video conferencing platforms like Zoom optimize bandwidth to maintain video quality but also prioritize reducing latency to prevent delays in conversation flow. Content delivery networks (CDNs) enhance user experience by increasing bandwidth capacity for faster downloads and decreasing latency to speed up content retrieval from geographically distributed servers.

Optimizing Network Performance: Bandwidth or Latency?

Optimizing network performance requires understanding the distinct roles of bandwidth and latency; bandwidth measures data transfer capacity, while latency indicates the delay in data transmission. For applications like video streaming or large file downloads, high bandwidth is crucial to handle substantial data volumes efficiently. Conversely, latency is more critical in real-time applications such as online gaming or VoIP calls, where minimizing delay ensures seamless user experience.

Choosing the Right Solution: Bandwidth or Latency Improvements

Choosing between bandwidth and latency improvements depends on the specific application requirements; high bandwidth is crucial for data-intensive tasks like video streaming, while low latency is essential for real-time communications and gaming. Network optimization strategies should prioritize enhancing bandwidth for bulk data transfers and reducing latency for time-sensitive interactions to maximize overall performance. Assessing current network bottlenecks through performance metrics enables informed decisions to balance bandwidth upgrades or latency reduction techniques effectively.

Bandwidth Infographic

libterm.com

libterm.com