Throughput measures the rate at which data is successfully transmitted within a network or processed by a system, directly impacting overall performance and efficiency. High throughput ensures smoother operations and better resource utilization, making it critical for applications requiring fast data exchange. Explore this article to understand how improving throughput can enhance your system's functionality.

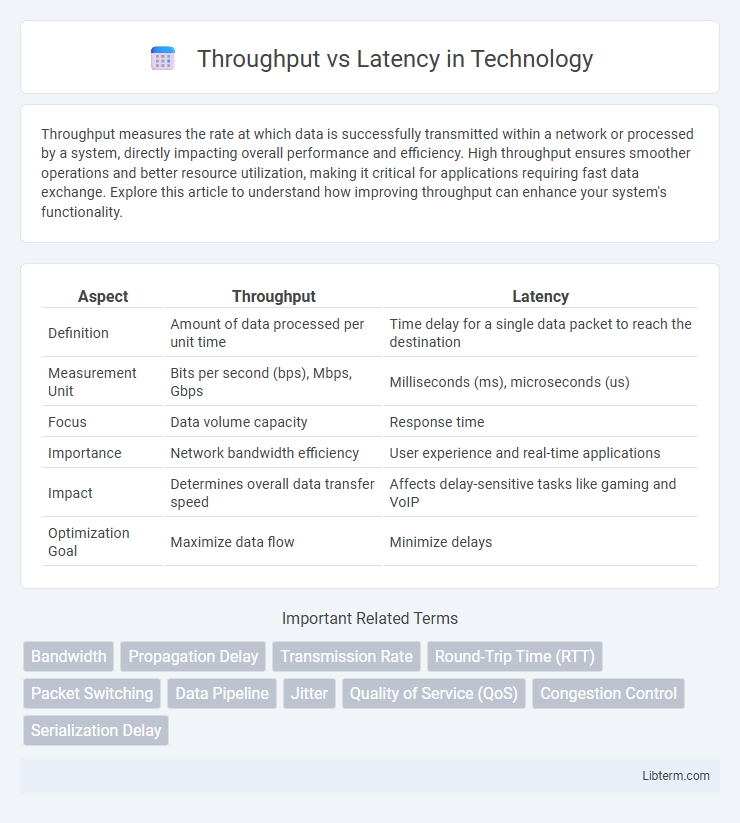

Table of Comparison

| Aspect | Throughput | Latency |

|---|---|---|

| Definition | Amount of data processed per unit time | Time delay for a single data packet to reach the destination |

| Measurement Unit | Bits per second (bps), Mbps, Gbps | Milliseconds (ms), microseconds (us) |

| Focus | Data volume capacity | Response time |

| Importance | Network bandwidth efficiency | User experience and real-time applications |

| Impact | Determines overall data transfer speed | Affects delay-sensitive tasks like gaming and VoIP |

| Optimization Goal | Maximize data flow | Minimize delays |

Understanding Throughput and Latency: Key Definitions

Throughput measures the amount of data successfully processed or transmitted within a given time frame, typically expressed in bits per second (bps) or transactions per second (TPS). Latency refers to the time delay between initiating a request and receiving a response, often measured in milliseconds (ms), reflecting speed and responsiveness. Understanding throughput and latency is crucial for optimizing network performance, system efficiency, and user experience in applications like streaming, gaming, and data centers.

The Fundamental Differences Between Throughput and Latency

Throughput measures the amount of data processed over a specific time period, while latency represents the delay before data transfer begins or a system responds. High throughput indicates efficient data handling capacity, whereas low latency reflects quick response times crucial for real-time applications. Understanding the fundamental difference helps optimize systems by balancing maximum data flow with minimal delays.

How Throughput and Latency Impact System Performance

Throughput measures the amount of data processed within a given time frame, directly influencing a system's capacity to handle high volumes of transactions efficiently. Latency reflects the time delay before a data transfer begins, impacting real-time responsiveness and user experience. Balancing throughput and latency is essential for optimizing system performance in applications ranging from network communications to database management.

Measuring Throughput: Metrics and Methods

Throughput measurement relies on metrics such as bits per second (bps), packets per second (pps), and transactions per second (TPS) to evaluate data transfer rates effectively. Methods like benchmarking tools, network analyzers, and performance monitoring systems provide accurate throughput assessment under varying workloads. Analyzing throughput alongside latency helps optimize system performance by identifying bottlenecks in bandwidth or processing speed.

Measuring Latency: Metrics and Methods

Measuring latency involves key metrics such as round-trip time (RTT), response time, and processing delay to evaluate the speed of data transmission across a network. Common methods include packet timestamping, ping tests, and traceroute analyses to identify latency sources and variations within network paths. Accurate latency measurement enables optimization of system performance, particularly in applications where real-time data processing is critical.

Real-World Examples: Throughput vs Latency in Action

In high-frequency trading, low latency is critical to execute trades within microseconds, minimizing delay to capitalize on market movements before competitors. Streaming services prioritize high throughput to deliver continuous, high-quality video without buffering, ensuring seamless user experience even with multiple concurrent viewers. Autonomous vehicles rely on a balance of low latency and high throughput to process sensor data rapidly and accurately, enabling real-time decision-making for safe navigation.

Factors Affecting Throughput and Latency

Throughput and latency are influenced by network bandwidth, hardware capabilities, and protocol efficiency. High throughput depends on maximizing bandwidth and minimizing packet loss, while low latency requires reducing transmission delays and processing times. Network congestion, signal interference, and packet size also significantly impact both throughput and latency performance.

Optimizing Systems for Throughput and Latency

Optimizing systems for throughput involves maximizing the amount of data processed within a given time frame, crucial for high-demand applications like streaming or batch processing. Reducing latency focuses on minimizing delay between input and response, essential for real-time systems such as online gaming or financial trading platforms. Balancing throughput and latency requires techniques like parallel processing, efficient resource allocation, and low-latency networking to ensure system performance meets specific application needs.

Trade-offs Between Throughput and Latency

Trade-offs between throughput and latency are critical in system performance optimization, as increasing throughput often leads to higher latency due to processing and queuing delays. Systems designed for maximum throughput prioritize handling more transactions per second, which can result in slower response times for individual requests. Conversely, low-latency systems emphasize faster response times, sometimes at the cost of reduced throughput, balancing these metrics depends on application-specific requirements and resource constraints.

Choosing the Right Strategy: When to Prioritize Throughput or Latency

Optimizing system performance requires prioritizing throughput or latency based on specific application needs, where high throughput benefits batch processing and data-intensive tasks by maximizing data handling speed. Conversely, minimizing latency is crucial for real-time applications like video conferencing and online gaming, ensuring immediate responsiveness and user experience. Understanding workload patterns and performance goals enables informed decisions to balance or emphasize throughput or latency effectively.

Throughput Infographic

libterm.com

libterm.com