Warm start techniques improve optimization algorithms by using previous solutions to initialize new problems, enhancing convergence speed and accuracy. These methods reduce computational time and resource consumption, making them ideal for real-time applications and iterative processes. Explore the full article to understand how warm starts can optimize your workflows effectively.

Table of Comparison

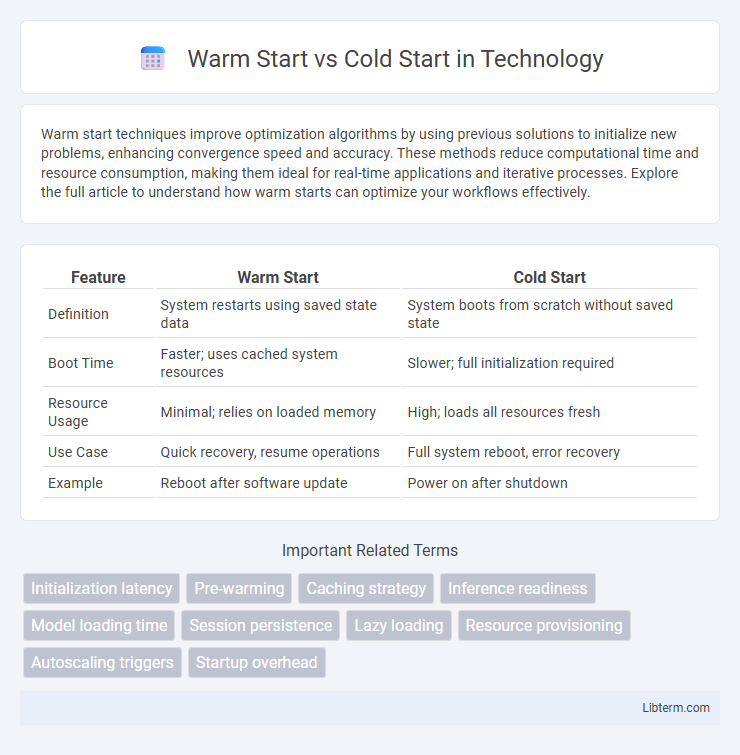

| Feature | Warm Start | Cold Start |

|---|---|---|

| Definition | System restarts using saved state data | System boots from scratch without saved state |

| Boot Time | Faster; uses cached system resources | Slower; full initialization required |

| Resource Usage | Minimal; relies on loaded memory | High; loads all resources fresh |

| Use Case | Quick recovery, resume operations | Full system reboot, error recovery |

| Example | Reboot after software update | Power on after shutdown |

Understanding Warm Start and Cold Start

Warm start refers to initializing a model or system with pre-existing knowledge or parameters from previous training or executions, enabling faster convergence and improved performance. Cold start occurs when a model or system begins from scratch without any prior information, often requiring more data and time to achieve optimal results. Understanding these concepts is crucial for optimizing machine learning workflows and recommendation engines.

Key Differences Between Warm Start and Cold Start

Warm start leverages previous model parameters or solutions to accelerate convergence in machine learning or optimization tasks, while cold start begins training or solving from scratch without any prior information. Warm start typically reduces computational time and improves efficiency by building on existing knowledge, whereas cold start requires more data and resources to achieve optimal performance. The choice between warm and cold start impacts model initialization, training speed, and overall resource utilization in artificial intelligence applications.

Importance of Initialization in Systems

Initialization critically impacts system performance by determining how quickly and accurately algorithms converge. Warm start utilizes previous results or prior information to jump-start the process, enhancing efficiency and reducing computational costs. Cold start begins without prior data, often requiring more iterations and resources, which can slow down learning or optimization tasks especially in machine learning and recommender systems.

Warm Start: Benefits and Use Cases

Warm start optimizes machine learning model training by utilizing previously learned parameters, significantly reducing training time and computational resources compared to a cold start. This approach enhances performance in transfer learning, iterative model refinement, and real-time adaptation scenarios where rapid convergence is critical. Key use cases include fine-tuning pre-trained neural networks, hyperparameter optimization, and continuous learning systems in dynamic environments.

Cold Start: Challenges and Solutions

Cold Start challenges arise from the absence of prior data, making it difficult for recommendation systems to provide accurate predictions for new users or items. Solutions include employing content-based filtering, leveraging user demographic data, and incorporating transfer learning from related domains to enhance initial recommendations. Hybrid models combining collaborative filtering with side information have demonstrated effectiveness in mitigating the Cold Start problem by enriching sparse datasets.

Impact on System Performance

Warm start significantly improves system performance by reducing initialization time and enabling faster response due to retained system states and cached data. Cold start, lacking prior context and cached resources, causes longer latency and increased computational overhead during startup, negatively impacting user experience. Systems optimized for warm starts demonstrate more efficient resource utilization and quicker task execution compared to cold start scenarios.

Application Scenarios: When to Use Warm or Cold Start

Warm start is ideal in machine learning applications where models need frequent updates with new data, such as online recommendation systems or real-time fraud detection, because it allows faster convergence by reusing previous training states. Cold start is necessary in scenarios involving entirely new models or domains without prior data, like launching a new app or introducing novel product categories in e-commerce, where the system lacks historical information. Choosing between warm and cold start depends on the availability of pretrained models and the need for rapid adaptation versus building from scratch to avoid bias.

Strategies to Minimize Cold Start Latency

Strategies to minimize cold start latency in serverless computing include using provisioned concurrency to keep functions initialized and ready to respond instantly. Implementing lightweight dependencies and optimizing function package size reduces initialization time, accelerating the cold start process. Additionally, leveraging cache mechanisms and pre-warming techniques can significantly decrease latency by maintaining active execution environments.

Best Practices for Efficient Start-Up Processes

Warm start techniques leverage pre-loaded data and cached states to reduce initialization time, improving system responsiveness compared to cold start methods that require full loading from scratch. Implementing state preservation and incremental loading optimizes resource usage and accelerates performance during restarts. Employing warm start practices in machine learning model deployment and application server restarts enhances operational efficiency and minimizes downtime.

Future Trends in Start-Up Optimization

Future trends in start-up optimization emphasize leveraging advanced machine learning models to mitigate cold start challenges by harnessing cross-domain data and user behavior prediction. Integrating real-time analytics and AI-driven personalization enhances warm start strategies, enabling faster user engagement and retention. Emerging technologies like federated learning and synthetic data generation offer novel solutions to improve recommendation accuracy and scalability in both warm and cold start scenarios.

Warm Start Infographic

libterm.com

libterm.com