Federated Learning enables collaborative model training across multiple devices while preserving data privacy by keeping data localized. This innovative approach reduces the risks associated with data sharing and ensures compliance with data protection regulations. Discover how Federated Learning can transform Your AI projects and enhance security by exploring the rest of this article.

Table of Comparison

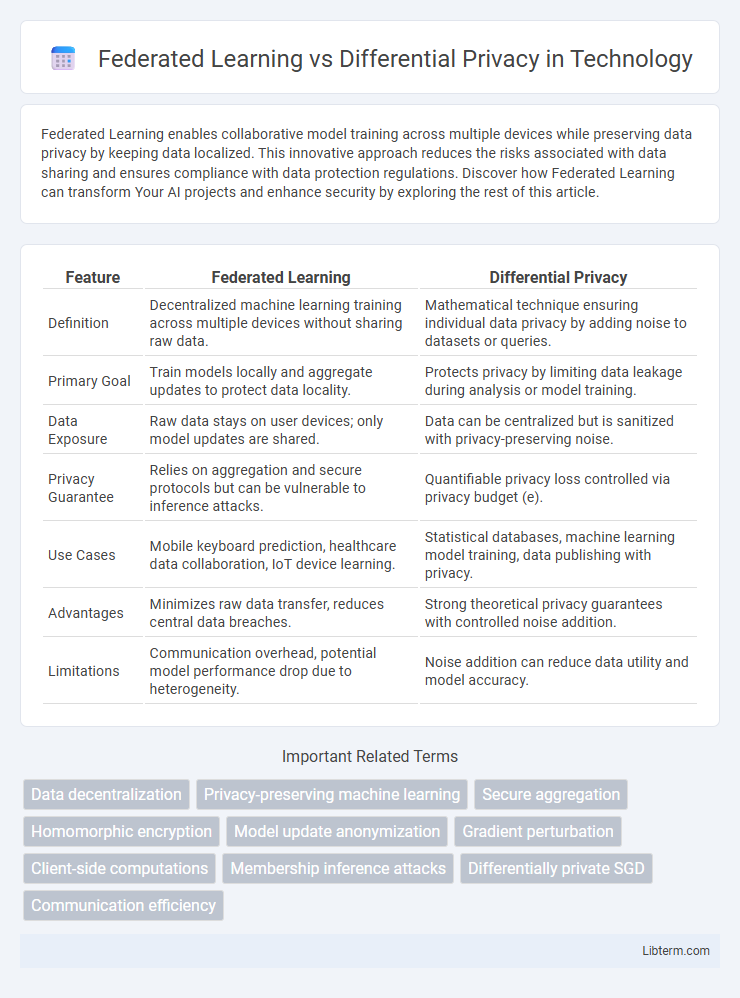

| Feature | Federated Learning | Differential Privacy |

|---|---|---|

| Definition | Decentralized machine learning training across multiple devices without sharing raw data. | Mathematical technique ensuring individual data privacy by adding noise to datasets or queries. |

| Primary Goal | Train models locally and aggregate updates to protect data locality. | Protects privacy by limiting data leakage during analysis or model training. |

| Data Exposure | Raw data stays on user devices; only model updates are shared. | Data can be centralized but is sanitized with privacy-preserving noise. |

| Privacy Guarantee | Relies on aggregation and secure protocols but can be vulnerable to inference attacks. | Quantifiable privacy loss controlled via privacy budget (e). |

| Use Cases | Mobile keyboard prediction, healthcare data collaboration, IoT device learning. | Statistical databases, machine learning model training, data publishing with privacy. |

| Advantages | Minimizes raw data transfer, reduces central data breaches. | Strong theoretical privacy guarantees with controlled noise addition. |

| Limitations | Communication overhead, potential model performance drop due to heterogeneity. | Noise addition can reduce data utility and model accuracy. |

Introduction to Federated Learning and Differential Privacy

Federated Learning enables decentralized model training by allowing multiple devices to collaboratively learn without sharing raw data, preserving user privacy and reducing data transfer risks. Differential Privacy offers a mathematical framework to quantify and limit the privacy leakage during data analysis by introducing controlled noise, ensuring individual data points cannot be reidentified. Combining Federated Learning with Differential Privacy enhances privacy protection by securing data both during transmission and model updating phases in machine learning systems.

Key Concepts: Definitions and Principles

Federated learning is a decentralized machine learning approach where models are trained across multiple devices or servers holding local data samples, without exchanging the data itself, ensuring data remains on the user's device. Differential privacy is a mathematical framework that provides strong privacy guarantees by introducing controlled random noise into the data or query results, preventing the identification of individual data points within a dataset. Both concepts prioritize data privacy, with federated learning focusing on data locality during model training and differential privacy ensuring privacy at the algorithmic level by protecting individual data contributions.

How Federated Learning Ensures Data Security

Federated Learning enhances data security by processing data locally on users' devices, eliminating the need to transfer raw data to a central server. This decentralized approach reduces the risk of data breaches, as only model updates, not sensitive information, are shared during training. Encrypted communication and secure aggregation techniques further protect these updates from interception or tampering, ensuring robust privacy preservation throughout the learning process.

The Role of Differential Privacy in Data Protection

Differential Privacy enhances data protection by introducing mathematical noise to datasets, ensuring individual user information remains confidential during analysis. In federated learning, it plays a critical role by safeguarding model updates exchanged between distributed devices without sharing raw data. This integration mitigates privacy risks while maintaining high utility in decentralized machine learning environments.

Core Differences: Federated Learning vs Differential Privacy

Federated Learning enables decentralized model training by aggregating local updates from multiple devices without sharing raw data, emphasizing data locality and collaboration across edge devices. Differential Privacy provides mathematical guarantees that individual data points remain unidentifiable within aggregated datasets by adding noise to protect privacy, prioritizing data anonymization and statistical privacy. The core difference lies in Federated Learning's distributed data processing versus Differential Privacy's focus on quantifiable privacy preservation through noise injection.

Use Cases: Real-World Applications and Industries

Federated Learning is widely used in healthcare for collaborative disease prediction across hospitals while preserving patient data privacy, and in finance for fraud detection without sharing sensitive client information. Differential Privacy is commonly applied in government statistics to release anonymized census data, and in tech companies to protect user data in personalized recommendations and ad targeting. Both technologies enable data-driven insights in sectors like telecommunications, IoT, and autonomous vehicles, enhancing privacy and regulatory compliance.

Privacy Risks and Threats: Comparing Both Approaches

Federated learning reduces privacy risks by keeping raw data localized on user devices, minimizing centralized data exposure and potential data breaches. Differential privacy introduces noise to datasets or query results, protecting individual data points even when data is aggregated or centralized, mitigating re-identification threats. While federated learning limits data transfer vulnerabilities, differential privacy offers mathematically quantifiable guarantees against inference attacks, highlighting complementary strengths in privacy protection.

Integrating Federated Learning with Differential Privacy

Integrating Federated Learning with Differential Privacy enhances data security by enabling collaborative model training across decentralized devices while ensuring individual data points remain confidential through noise addition and privacy budgets. This combination mitigates risks of data leakage in federated environments by applying rigorous mathematical guarantees, allowing scalable and privacy-preserving machine learning. Practical applications in healthcare and finance demonstrate improved model accuracy without compromising sensitive user information, making this integration critical for compliance with data protection regulations.

Challenges and Limitations of Both Techniques

Federated learning faces challenges such as expensive communication costs, potential model poisoning attacks, and the difficulty of handling heterogeneous data across decentralized devices. Differential privacy often suffers from a trade-off between privacy guarantees and model accuracy, with noise addition potentially degrading utility and limiting fine-grained analysis. Both techniques encounter limitations in scalability and robustness, requiring careful balance to ensure effective privacy protection without compromising machine learning performance.

Future Trends and Innovations in Data Privacy

Federated learning is evolving to enhance model accuracy while minimizing data exposure by enabling decentralized data processing across multiple devices, significantly reducing privacy risks. Differential privacy innovations focus on refining noise-adding techniques to balance data utility with robust privacy guarantees, enabling safer data sharing and analytics. Future trends include integrating federated learning with differential privacy to create hybrid models that optimize data privacy and machine learning performance for sectors like healthcare and finance.

Federated Learning Infographic

libterm.com

libterm.com