Hadoop is an open-source framework designed for distributed storage and processing of large data sets using simple programming models. It enables scalable, fault-tolerant data management across clusters of commodity hardware, making it essential for big data analytics and enterprise data solutions. Discover how Hadoop can transform your data strategy by exploring the rest of this article.

Table of Comparison

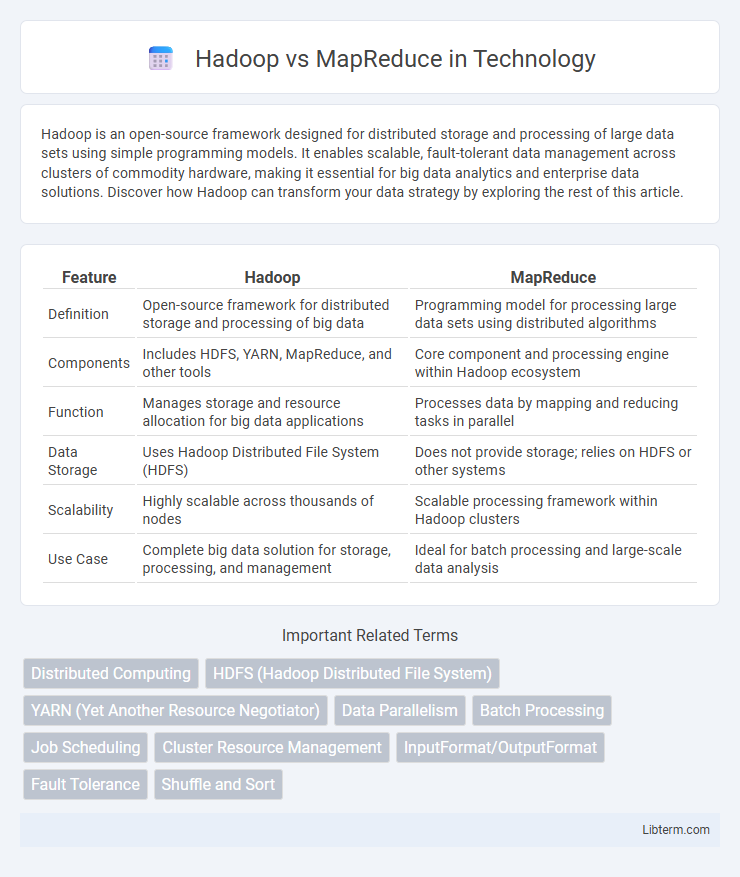

| Feature | Hadoop | MapReduce |

|---|---|---|

| Definition | Open-source framework for distributed storage and processing of big data | Programming model for processing large data sets using distributed algorithms |

| Components | Includes HDFS, YARN, MapReduce, and other tools | Core component and processing engine within Hadoop ecosystem |

| Function | Manages storage and resource allocation for big data applications | Processes data by mapping and reducing tasks in parallel |

| Data Storage | Uses Hadoop Distributed File System (HDFS) | Does not provide storage; relies on HDFS or other systems |

| Scalability | Highly scalable across thousands of nodes | Scalable processing framework within Hadoop clusters |

| Use Case | Complete big data solution for storage, processing, and management | Ideal for batch processing and large-scale data analysis |

Introduction to Hadoop and MapReduce

Hadoop is an open-source framework designed for distributed storage and processing of large datasets across clusters of commodity hardware, leveraging the Hadoop Distributed File System (HDFS) for scalable data storage. MapReduce is a programming model and processing technique within the Hadoop ecosystem that enables parallel computation by dividing tasks into map and reduce functions to efficiently handle big data workloads. Together, Hadoop and MapReduce form a powerful solution for scalable, fault-tolerant data processing in large-scale data analytics.

Core Concepts: Hadoop Explained

Hadoop is an open-source framework designed for distributed storage and processing of large data sets across clusters of computers using simple programming models. It consists of two main components: Hadoop Distributed File System (HDFS) for scalable data storage and MapReduce for parallel processing of data. MapReduce is a programming model within Hadoop that divides tasks into smaller sub-tasks, processes them in parallel, and aggregates the results, enabling efficient handling of big data workloads.

Understanding MapReduce Functionality

MapReduce is a programming model designed for processing large data sets with a distributed algorithm on a Hadoop cluster. It works by dividing tasks into two phases: the Map function processes input data and generates intermediate key-value pairs, while the Reduce function aggregates these pairs to produce the final output. Understanding MapReduce functionality is essential for optimizing data processing workflows within the Hadoop ecosystem, enabling scalable and efficient handling of big data.

Hadoop Architecture Overview

Hadoop architecture consists of the Hadoop Distributed File System (HDFS) for scalable storage and the MapReduce framework for distributed data processing. HDFS splits large datasets into blocks stored across multiple nodes, ensuring fault tolerance and high throughput. MapReduce processes data by dividing tasks into Map and Reduce phases, enabling parallel computation across the cluster for efficient handling of big data workloads.

MapReduce Processing Model

MapReduce processing model divides data into independent chunks processed in parallel by map tasks, which generate intermediate key-value pairs subsequently aggregated by reduce tasks to produce the final output. This model enhances scalability and fault tolerance by distributing workload across multiple nodes in a Hadoop cluster. Efficient handling of large-scale data sets with automatic re-execution of failed tasks makes MapReduce a foundational component of the Hadoop ecosystem.

Key Differences Between Hadoop and MapReduce

Hadoop is an open-source framework designed for distributed storage and processing of large data sets using clusters of commodity hardware, while MapReduce is a programming model and processing technique within the Hadoop ecosystem for parallel data processing. Hadoop comprises two main components: the Hadoop Distributed File System (HDFS) for scalable storage and the MapReduce engine for batch processing, whereas MapReduce specifically handles the execution of map and reduce tasks to transform and summarize data. The key difference lies in Hadoop providing the infrastructure platform including storage and resource management, whereas MapReduce serves as the computational model that runs on that platform to process data efficiently.

Performance Comparison: Hadoop vs MapReduce

Hadoop is an open-source framework that facilitates distributed storage and processing of large datasets across clusters, while MapReduce is a programming model within Hadoop designed for parallel data processing. In performance terms, Hadoop benefits from its ecosystem, including HDFS for data storage and YARN for resource management, optimizing job execution and fault tolerance. MapReduce alone may show limited efficiency without Hadoop's infrastructure, as Hadoop enhances performance through data locality and cluster scalability.

Use Cases and Applications

Hadoop excels in distributed storage and large-scale data processing, making it ideal for handling big data workloads like data warehousing, log processing, and machine learning pipelines. MapReduce, as a programming model within Hadoop, is specifically tailored for batch processing tasks such as indexing web pages, analyzing social media data, and performing ETL (Extract, Transform, Load) operations. Enterprises leverage Hadoop's ecosystem for scalable data storage and real-time analytics, while MapReduce optimizes parallel processing for complex computations across massive datasets.

Advantages and Limitations

Hadoop offers a comprehensive ecosystem for distributed storage and processing of big data, enabling scalability and fault tolerance through the Hadoop Distributed File System (HDFS) and resource management via YARN. MapReduce, a core component of Hadoop, excels in parallel processing of large datasets by breaking tasks into mappable and reducible operations, but it faces limitations with iterative algorithms and real-time processing demands. While Hadoop's architecture supports diverse data processing tools and simplifies cluster management, MapReduce's batch processing model can lead to high latency and is less efficient for complex data workflows compared to newer frameworks like Apache Spark.

Choosing Between Hadoop and MapReduce

Choosing between Hadoop and MapReduce depends on the scope and requirements of your big data project. Hadoop is a comprehensive ecosystem that includes HDFS for distributed storage, YARN for resource management, and supports multiple processing models, making it suitable for complex data workflows. MapReduce, as a programming model specifically for processing large datasets with parallel computation, is ideal for batch processing but limited compared to the broader capabilities of the Hadoop framework.

Hadoop Infographic

libterm.com

libterm.com