Model decay occurs when machine learning models lose accuracy over time due to changes in data patterns or environment. This phenomenon can impact your model's performance and reliability, leading to outdated or biased predictions. Explore the rest of this article to learn effective strategies to detect and prevent model decay.

Table of Comparison

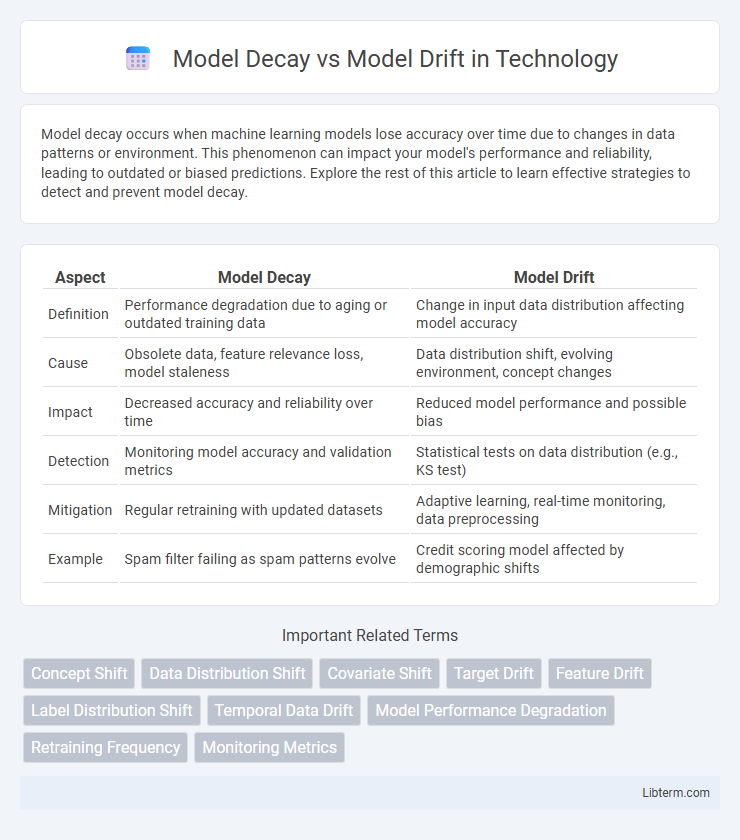

| Aspect | Model Decay | Model Drift |

|---|---|---|

| Definition | Performance degradation due to aging or outdated training data | Change in input data distribution affecting model accuracy |

| Cause | Obsolete data, feature relevance loss, model staleness | Data distribution shift, evolving environment, concept changes |

| Impact | Decreased accuracy and reliability over time | Reduced model performance and possible bias |

| Detection | Monitoring model accuracy and validation metrics | Statistical tests on data distribution (e.g., KS test) |

| Mitigation | Regular retraining with updated datasets | Adaptive learning, real-time monitoring, data preprocessing |

| Example | Spam filter failing as spam patterns evolve | Credit scoring model affected by demographic shifts |

Understanding Model Decay and Model Drift

Model decay refers to the gradual reduction in a machine learning model's predictive performance over time due to changes in underlying data distributions or environmental factors. Model drift specifically describes shifts in the statistical properties of input features (covariate drift) or relationships between inputs and outputs (concept drift) that cause the model to become less accurate. Understanding these phenomena is crucial for implementing effective monitoring, retraining, and adaptation strategies to maintain model reliability in dynamic real-world scenarios.

Key Differences Between Model Decay and Model Drift

Model decay refers to the gradual loss of model performance over time due to outdated data or changing underlying patterns, whereas model drift specifically describes the shift in data distribution that causes model predictions to become less accurate. Model decay encompasses both concept drift (changes in the relationship between input and output variables) and data drift (changes in input data distribution), while model drift mainly focuses on the data distribution changes alone. Understanding these distinctions helps in selecting appropriate monitoring and retraining strategies to maintain model accuracy in production environments.

Causes of Model Decay in Machine Learning

Model decay in machine learning primarily results from changes in data distribution over time, also known as covariate shift, where the input features evolve away from the original training data. External factors such as evolving user behavior, market trends, or sensor degradation lead to discrepancies between historical and current data patterns. Inadequate model retraining and failure to incorporate new data exacerbate performance degradation, distinguishing decay from model drift, which specifically involves changes in the relationship between input features and target variables.

Factors Leading to Model Drift

Model drift occurs when changes in data distribution cause a predictive model's performance to degrade over time, driven by factors such as evolving user behavior, seasonal trends, and external environmental shifts. Unlike model decay, which relates to the natural aging of model relevance, drift specifically results from statistical changes like feature distribution shifts, label distribution changes, or concept drift where the underlying relationship between inputs and targets evolves. Continuous monitoring of data patterns and retraining the model with updated datasets are essential to mitigate model drift and maintain predictive accuracy.

Real-World Examples of Model Decay

Model decay occurs when a machine learning model's performance deteriorates over time due to changes in the underlying data distribution, as seen in credit scoring systems where evolving economic conditions render earlier models less accurate. A prominent real-world example includes fraud detection models that become less effective as fraudsters develop new tactics, causing a gradual decline in detection rates. Retail demand forecasting models also experience decay when shifts in consumer behavior or market trends are not promptly incorporated, leading to inventory mismanagement and lost sales opportunities.

Detecting and Monitoring Model Drift

Detecting and monitoring model drift involves continuously tracking changes in data distribution and model performance metrics to identify deviations from original training conditions. Techniques such as statistical hypothesis testing, data drift detectors, and performance degradation thresholds enable early identification of drift, allowing timely model retraining or adjustment. Leveraging real-time analytics and automated alerts enhances proactive management of model decay, ensuring sustained accuracy and reliability in dynamic environments.

Impact of Model Decay on Predictive Performance

Model decay significantly reduces predictive performance by causing the model to deliver increasingly inaccurate outputs as the underlying data distributions change over time. This degradation occurs because the model's learned patterns become outdated, leading to errors and decreased reliability in predictions. Continuous monitoring and retraining are essential to mitigate the negative impact of model decay on forecast accuracy and decision-making processes.

Strategies to Mitigate Model Drift

Strategies to mitigate model drift include continuous monitoring of model performance using real-time data and automated alerts that detect deviations from expected behavior. Implementing retraining pipelines with up-to-date datasets ensures models adapt to new patterns while leveraging incremental learning techniques to reduce downtime. Leveraging robust feature engineering and validation frameworks also helps maintain model accuracy by addressing changing data distributions and concept drift.

Best Practices for Managing Model Decay and Drift

Regular monitoring of predictive performance metrics and retraining models with updated data are essential best practices for managing model decay and drift. Implementing automated alerts for significant deviations in model outputs enables timely identification of drift, while maintaining version control and documentation ensures transparency in model updates. Leveraging adaptive learning techniques and continuous evaluation frameworks further stabilizes model accuracy and responsiveness to changing data patterns.

Future Trends in Addressing Model Decay and Drift

Future trends in addressing model decay and drift emphasize the integration of continuous learning systems and real-time data monitoring to enhance adaptive capabilities. Advances in automated retraining frameworks and explainable AI enable quicker detection and mitigation of performance degradation in deployed models. The growth of edge computing and federated learning methods further supports decentralized, privacy-preserving updates to combat drift in complex, evolving data environments.

Model Decay Infographic

libterm.com

libterm.com