A load balancer efficiently distributes incoming network traffic across multiple servers to ensure reliability and optimal resource use. It enhances application performance and availability by preventing any single server from becoming overwhelmed. Explore the rest of this article to understand how a load balancer can improve your system's scalability and resilience.

Table of Comparison

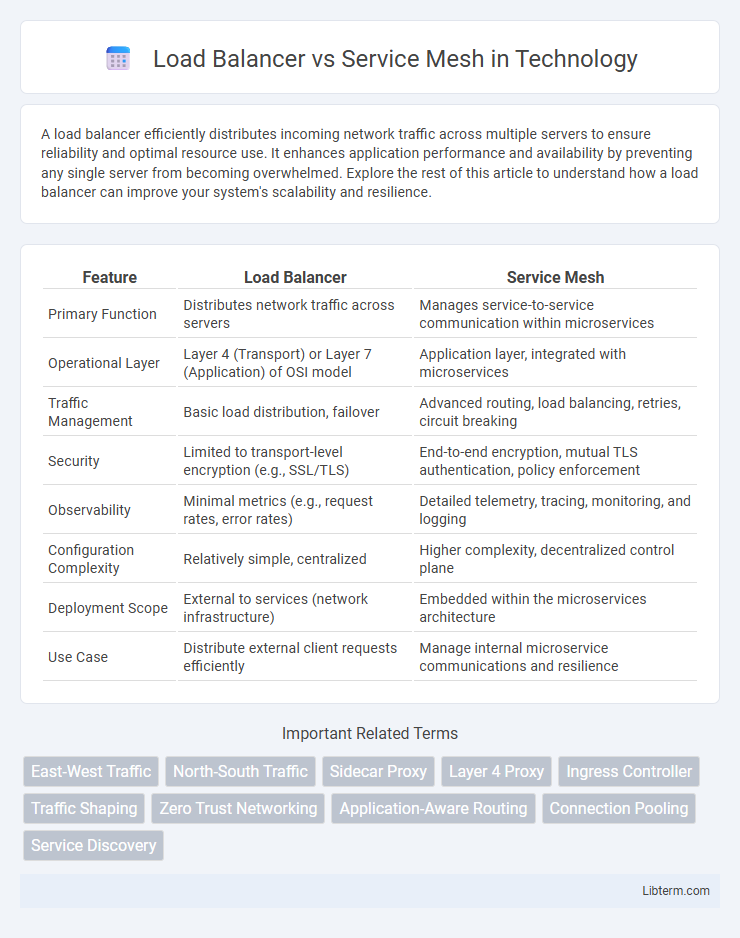

| Feature | Load Balancer | Service Mesh |

|---|---|---|

| Primary Function | Distributes network traffic across servers | Manages service-to-service communication within microservices |

| Operational Layer | Layer 4 (Transport) or Layer 7 (Application) of OSI model | Application layer, integrated with microservices |

| Traffic Management | Basic load distribution, failover | Advanced routing, load balancing, retries, circuit breaking |

| Security | Limited to transport-level encryption (e.g., SSL/TLS) | End-to-end encryption, mutual TLS authentication, policy enforcement |

| Observability | Minimal metrics (e.g., request rates, error rates) | Detailed telemetry, tracing, monitoring, and logging |

| Configuration Complexity | Relatively simple, centralized | Higher complexity, decentralized control plane |

| Deployment Scope | External to services (network infrastructure) | Embedded within the microservices architecture |

| Use Case | Distribute external client requests efficiently | Manage internal microservice communications and resilience |

Introduction to Load Balancers and Service Mesh

Load balancers distribute network or application traffic across multiple servers to ensure reliability and performance by preventing server overload and optimizing resource use. Service meshes provide a dedicated infrastructure layer for managing service-to-service communication, offering advanced features like traffic routing, load balancing, security, and observability within microservices architectures. While load balancers focus primarily on traffic distribution at the network level, service meshes deliver granular control and enhanced communication management inside complex distributed applications.

Core Functions: Load Balancer vs Service Mesh

Load balancers primarily manage traffic distribution across servers to ensure availability, scalability, and fault tolerance by directing client requests to the least busy or closest server. Service meshes handle inter-service communication within microservices architectures, providing advanced features such as service discovery, load balancing, traffic routing, security (mTLS), and observability through sidecar proxies. While load balancers optimize external traffic flow, service meshes enhance internal service-to-service interactions with fine-grained control and telemetry.

Key Differences in Architecture

Load balancers distribute network traffic evenly across multiple servers to ensure reliability and performance, operating primarily at the transport and network layers. Service meshes manage service-to-service communication within microservices architectures by providing advanced features such as traffic routing, security, and observability, functioning at the application layer via sidecar proxies. The key architectural difference lies in load balancers acting as centralized traffic distributors, while service meshes embed distributed proxies alongside services for granular control and communication management.

Traffic Management Capabilities

Load balancers primarily distribute incoming network traffic across multiple servers to ensure high availability and reliability, optimizing resource use and minimizing response times. Service meshes provide advanced traffic management features beyond load balancing, such as fine-grained routing, traffic splitting, fault injection, and observability at the service-to-service communication level within microservices architectures. Combining service mesh capabilities with traditional load balancers enables precise control over traffic flow, enhanced security policies, and improved resilience in complex cloud-native environments.

Scalability and Performance Impacts

Load balancers distribute network traffic efficiently across multiple servers, enhancing scalability by preventing overload on a single resource, which directly improves application performance under high demand. Service meshes offer fine-grained control over service-to-service communication, enabling dynamic routing, load balancing at the application layer, and observability that optimize microservices scalability and reduce latency. While load balancers excel in managing external traffic distribution, service meshes provide scalable internal traffic management and resilience, collectively driving improved system throughput and responsiveness.

Security Features Comparison

Load balancers primarily provide basic security features like SSL termination, DDoS protection, and IP whitelisting to safeguard network traffic. Service meshes enhance security by enabling mutual TLS authentication, fine-grained access control, and policy enforcement at the application layer, ensuring encrypted and authorized communication between microservices. Unlike load balancers, service meshes offer deeper visibility and security telemetry, facilitating real-time threat detection and compliance monitoring in complex service architectures.

Observability and Monitoring Tools

Load balancers primarily distribute network traffic efficiently across servers, providing basic health checks and metrics for server availability, while service meshes offer comprehensive observability and monitoring tools, such as detailed telemetry, distributed tracing, and fine-grained metrics at the microservice level. Service meshes integrate with tools like Prometheus, Grafana, and Jaeger to deliver real-time insights into service-to-service communication, latency, and error rates, enabling deep visibility into application performance. Observability in service meshes supports advanced monitoring of network policies, security enforcement, and resilience patterns, surpassing the limited scope of traditional load balancer monitoring capabilities.

Use Cases: When to Choose Load Balancer or Service Mesh

Load balancers are ideal for distributing incoming network traffic across multiple servers, ensuring high availability and scalability in scenarios like web applications and traditional client-server models. Service meshes are better suited for microservices architectures, providing fine-grained control over service-to-service communication, security, and observability. Choose load balancers for simple traffic routing needs and service meshes when advanced features like service discovery, traffic shaping, and telemetry are required.

Integration with Modern Cloud-Native Environments

Load balancers efficiently distribute incoming network traffic across multiple servers, ensuring high availability and scalability in cloud-native environments. Service meshes provide advanced microservices management by handling service-to-service communication, observability, and security through sidecar proxies within containerized infrastructures. Integration with Kubernetes and other orchestration platforms enhances both technologies' capabilities to support dynamic scaling, resiliency, and fine-grained traffic control in modern cloud-native applications.

Conclusion: Selecting the Right Solution

Choosing between a load balancer and a service mesh depends on the complexity and scale of your microservices architecture. Load balancers efficiently distribute network traffic at the transport or application layer, optimizing resource utilization and response time for straightforward deployments. Service meshes provide granular service-to-service communication management, security, observability, and resilience features essential for large-scale, dynamic microservices environments requiring advanced traffic control and monitoring.

Load Balancer Infographic

libterm.com

libterm.com