Throughput measures the rate at which data is successfully transmitted over a network or processed by a system, affecting overall performance and efficiency. High throughput ensures faster data transfer, reduced latency, and improved user experience in various applications, from streaming to cloud computing. Explore the article to understand how optimizing throughput can enhance your system's capabilities and reliability.

Table of Comparison

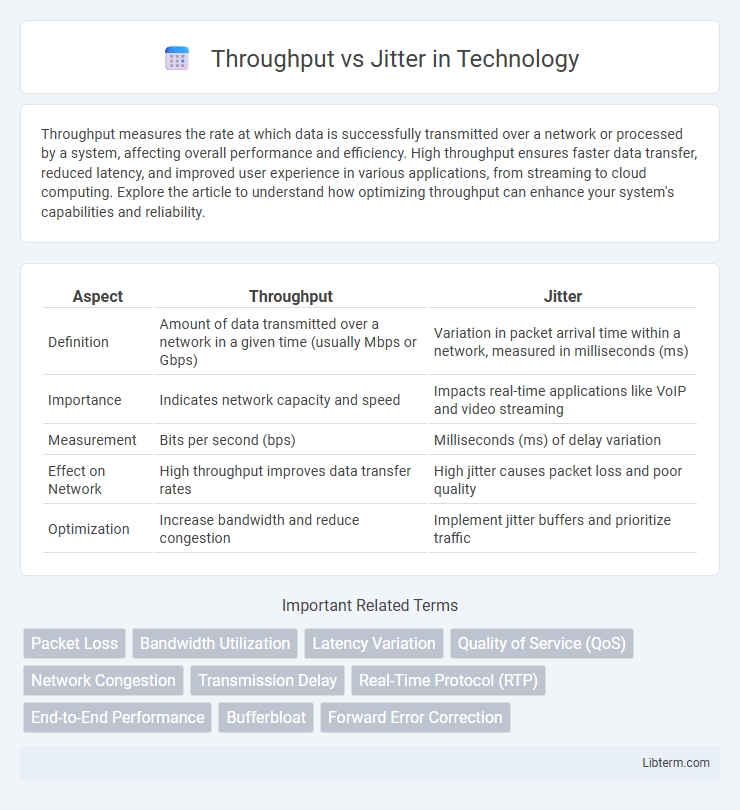

| Aspect | Throughput | Jitter |

|---|---|---|

| Definition | Amount of data transmitted over a network in a given time (usually Mbps or Gbps) | Variation in packet arrival time within a network, measured in milliseconds (ms) |

| Importance | Indicates network capacity and speed | Impacts real-time applications like VoIP and video streaming |

| Measurement | Bits per second (bps) | Milliseconds (ms) of delay variation |

| Effect on Network | High throughput improves data transfer rates | High jitter causes packet loss and poor quality |

| Optimization | Increase bandwidth and reduce congestion | Implement jitter buffers and prioritize traffic |

Understanding Throughput: Definition and Importance

Throughput refers to the rate at which data is successfully transmitted over a network, typically measured in bits per second (bps). It is a critical metric for evaluating network performance and ensuring efficient data transfer, impacting applications like streaming, gaming, and large file downloads. High throughput enables smoother user experiences by minimizing delays and maximizing bandwidth utilization.

What is Jitter? Key Concepts Explained

Jitter refers to the variation in packet arrival times in a network, causing irregular delays that impact data transmission quality. Unlike throughput, which measures the amount of data successfully delivered over a network per second, jitter focuses on the consistency and timing of these packets. High jitter can disrupt real-time applications like VoIP and online gaming, leading to choppy audio or lag.

Differences Between Throughput and Jitter

Throughput measures the amount of data successfully transmitted over a network in a given time, reflecting network capacity and efficiency, while jitter quantifies the variation in packet arrival times, impacting real-time communications like VoIP and video streaming. Throughput evaluates network speed and volume, whereas jitter assesses the consistency and stability of packet delivery. High throughput indicates efficient data transfer, but excessive jitter can cause delays and disruptions despite sufficient bandwidth.

How Throughput Affects Network Performance

Throughput directly impacts network performance by determining the maximum rate at which data is successfully transmitted, influencing the efficiency of applications relying on consistent data flow. Insufficient throughput leads to bottlenecks, causing delays and reduced quality of experience in bandwidth-intensive services like video streaming or online gaming. High throughput capabilities ensure smoother data transfer, minimizing latency and improving overall network responsiveness.

The Impact of Jitter on Real-Time Applications

Jitter, the variability in packet delay over a network, critically affects real-time applications such as VoIP, video conferencing, and online gaming, where consistent data flow is essential. High jitter causes packet loss, out-of-order packets, and latency spikes, resulting in degraded audio and video quality, interruptions, and poor user experience. Throughput measures the total data transmitted successfully, but even with high throughput, excessive jitter disrupts the smooth delivery required for real-time communications.

Measuring Throughput: Tools and Techniques

Measuring throughput involves analyzing the data transfer rate over a network, typically quantified in bits per second (bps) using tools like iPerf, Wireshark, and NetFlow. Techniques include conducting controlled traffic tests and monitoring real-time data streams to identify bottlenecks and ensure consistent network performance. Accurate throughput measurement is critical for optimizing bandwidth utilization and improving overall network efficiency.

Jitter Measurement Methods and Best Practices

Jitter measurement methods primarily include packet delay variation analysis, timestamp comparison, and statistical metrics like mean deviation and standard deviation of inter-arrival times. Best practices involve using high-resolution time-stamping tools and synchronized clocks to ensure accurate detection of latency fluctuations affecting real-time applications. Consistent monitoring combined with network simulation techniques helps in identifying jitter patterns and implementing corrective measures to maintain optimal throughput.

Common Causes of High Jitter and Low Throughput

High jitter and low throughput often stem from network congestion caused by excessive traffic overwhelming routers and switches. Packet loss due to faulty hardware or poor wireless signal quality also contributes to increased jitter and reduced data transfer rates. Insufficient bandwidth allocation and improper Quality of Service (QoS) settings degrade overall network performance, leading to unstable connections and slower throughput.

Optimizing Networks for Minimal Jitter and Maximum Throughput

Optimizing networks for minimal jitter and maximum throughput involves balancing packet transmission rates with delay variability to ensure smooth data flow, especially in real-time applications like VoIP and video streaming. Techniques such as traffic shaping, Quality of Service (QoS) policies, and adaptive buffering dynamically prioritize latency-sensitive packets, reducing jitter while maintaining high throughput. Leveraging advanced network protocols and hardware acceleration further enhances data transmission efficiency, minimizing packet loss and stabilizing network performance under varying traffic conditions.

Throughput vs. Jitter: Real-World Case Studies

Throughput measures the amount of data successfully transmitted over a network per unit time, while jitter refers to the variation in packet delay affecting real-time communication quality. Real-world case studies from VoIP and online gaming reveal that high throughput does not always guarantee low jitter, as network congestion and routing issues can cause significant delay variability. Optimizing both throughput and jitter is essential for ensuring smooth performance in latency-sensitive applications, highlighting the importance of balanced network management strategies.

Throughput Infographic

libterm.com

libterm.com