Container orchestration automates the deployment, scaling, and management of containerized applications, ensuring efficient resource utilization and high availability. It simplifies complex tasks like load balancing, service discovery, and automatic failover across distributed systems. Explore this article to discover how container orchestration can optimize your cloud infrastructure and streamline application delivery.

Table of Comparison

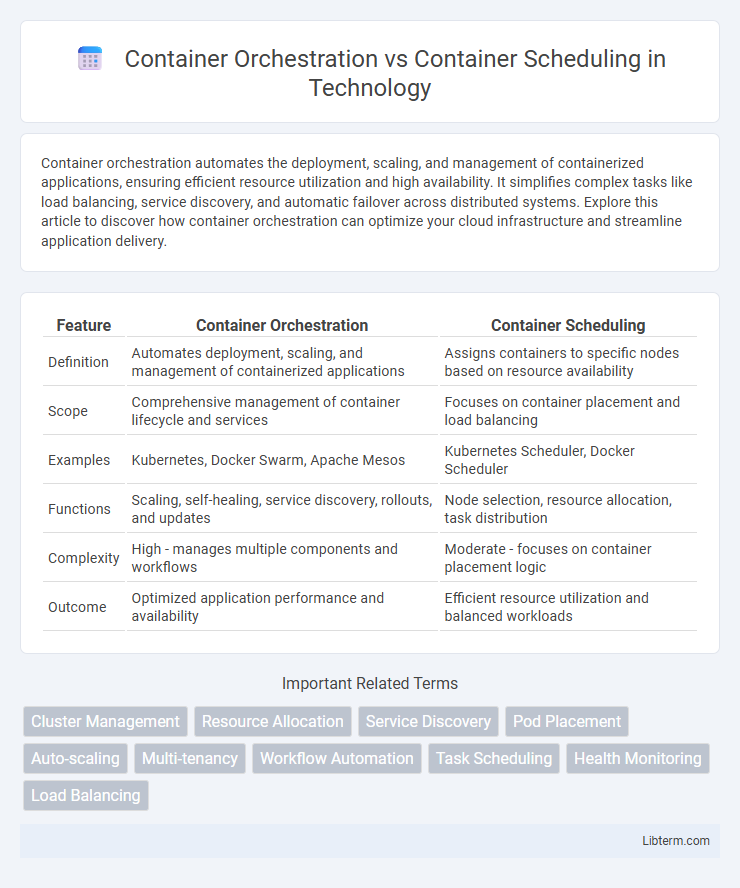

| Feature | Container Orchestration | Container Scheduling |

|---|---|---|

| Definition | Automates deployment, scaling, and management of containerized applications | Assigns containers to specific nodes based on resource availability |

| Scope | Comprehensive management of container lifecycle and services | Focuses on container placement and load balancing |

| Examples | Kubernetes, Docker Swarm, Apache Mesos | Kubernetes Scheduler, Docker Scheduler |

| Functions | Scaling, self-healing, service discovery, rollouts, and updates | Node selection, resource allocation, task distribution |

| Complexity | High - manages multiple components and workflows | Moderate - focuses on container placement logic |

| Outcome | Optimized application performance and availability | Efficient resource utilization and balanced workloads |

Introduction to Container Orchestration and Scheduling

Container orchestration automates the deployment, scaling, and management of containerized applications across clusters, ensuring efficient resource utilization and high availability. Container scheduling focuses specifically on assigning containers to appropriate nodes based on resource requirements, constraints, and policies to optimize workload distribution. Together, orchestration and scheduling enable robust and scalable container ecosystem management in environments like Kubernetes and Docker Swarm.

Defining Container Orchestration

Container orchestration automates the deployment, scaling, and management of containerized applications across multiple hosts, ensuring high availability and efficient resource utilization. It goes beyond container scheduling by coordinating networking, service discovery, load balancing, and storage integration within a distributed environment. Leading orchestration platforms like Kubernetes enable dynamic scaling, self-healing, and rolling updates to streamline complex container workflows.

What is Container Scheduling?

Container scheduling is the process of assigning containers to specific nodes within a cluster to optimize resource utilization and ensure application performance. It involves evaluating node capacity, workload requirements, and policies to determine the best placement for each container. Effective container scheduling improves scalability, availability, and reliability in containerized environments.

Key Differences Between Orchestration and Scheduling

Container orchestration manages the entire lifecycle of containers, including deployment, scaling, and networking, while container scheduling specifically focuses on placing containers on the most suitable nodes based on resource availability. Orchestration platforms like Kubernetes provide automated scheduling as a subset of their broader functionality, integrating monitoring, load balancing, and recovery processes. Scheduling optimizes resource utilization and workload distribution, but orchestration ensures overall system coordination and health management across distributed environments.

Core Components of Container Orchestration Systems

Container orchestration systems manage the deployment, scaling, and operations of containerized applications using core components such as the scheduler, cluster manager, and API server. The scheduler assigns containers to nodes based on resource availability and policies, while the cluster manager monitors the health and status of nodes to maintain desired application states. API servers provide a centralized control interface for users to interact with the orchestration system and automate container lifecycle management.

Common Container Scheduling Strategies

Container scheduling involves assigning containers to specific nodes within a cluster based on resource availability and constraints, while container orchestration manages the overall lifecycle, scaling, and health of containerized applications. Common container scheduling strategies include bin packing, which maximizes resource utilization by filling nodes to capacity; spread scheduling, which distributes containers evenly across nodes to enhance availability and fault tolerance; and priority-based scheduling, which allocates resources according to container importance or SLA requirements. Kubernetes, Docker Swarm, and Apache Mesos implement these strategies to optimize cluster efficiency and application performance.

Popular Tools: Kubernetes, Docker Swarm, and Others

Kubernetes, Docker Swarm, and Apache Mesos are popular tools in container orchestration and scheduling, each offering unique capabilities for managing containerized applications. Kubernetes excels in automated container deployment, scaling, and load balancing with its robust scheduling algorithms and resource management features. Docker Swarm provides a simpler, integrated scheduling solution tailored for Docker environments, while Apache Mesos offers a flexible, multi-framework scheduler supporting large-scale distributed systems.

Use Cases for Orchestration vs. Scheduling

Container orchestration manages the deployment, scaling, and operation of containerized applications across clusters, making it ideal for complex microservices architectures requiring automated load balancing and failover. Container scheduling focuses on assigning containers to specific nodes based on resource availability, suitable for optimizing resource utilization and workload distribution in smaller or static environments. Use cases for orchestration include multi-cloud deployments and automated recovery, while scheduling excels in managing container placement within single clusters.

Challenges and Considerations

Container orchestration involves automating the deployment, scaling, and management of containerized applications, while container scheduling focuses on assigning containers to specific nodes based on resource availability and constraints. Challenges in container orchestration include handling complex multi-container deployments, ensuring seamless service discovery, and maintaining system resilience under dynamic workloads. Container scheduling considerations demand effective resource allocation, balancing load across nodes, and optimizing for performance and cost-efficiency, often complicated by heterogeneous infrastructure and fluctuating demand.

Future Trends in Container Management

Future trends in container management emphasize advanced AI-driven container orchestration platforms that enhance real-time scalability and resource optimization across hybrid and multi-cloud environments. Edge computing integration will drive the evolution of container scheduling algorithms to support low-latency, location-aware deployments with enhanced fault tolerance. Emerging standards like Kubernetes Operators and serverless container models will further automate lifecycle management, enabling predictive scaling and self-healing capabilities.

Container Orchestration Infographic

libterm.com

libterm.com