Deadlock occurs when two or more processes are unable to proceed because each is waiting for the other to release resources, creating a standstill in system operations. Preventing and resolving deadlocks is essential for maintaining system efficiency and avoiding performance bottlenecks. Discover how to identify, handle, and prevent deadlocks to optimize your system's reliability and performance in the rest of the article.

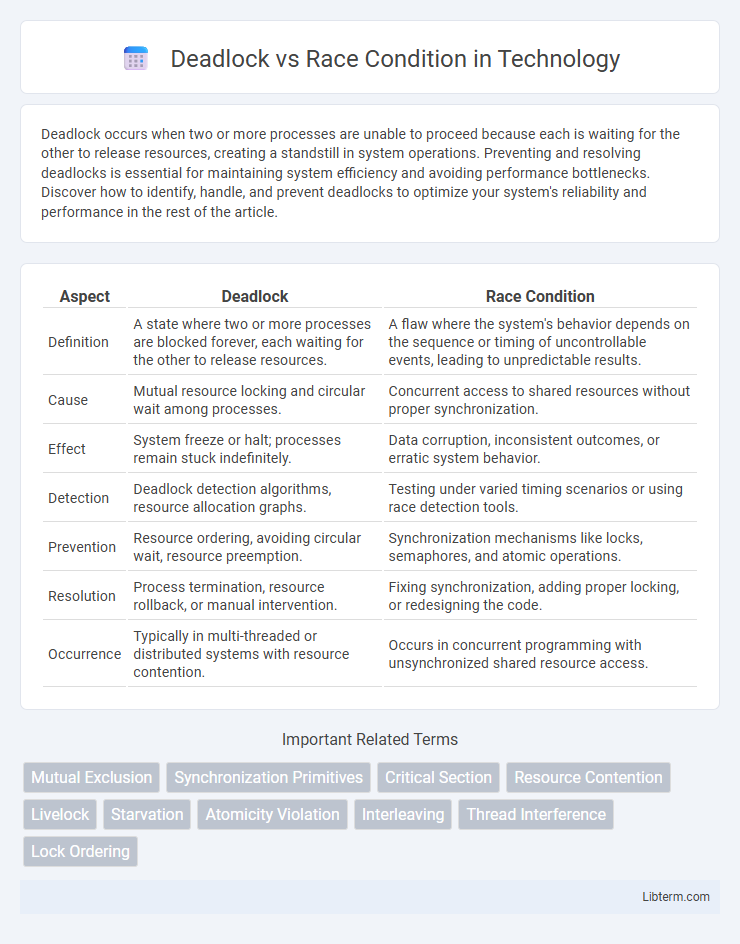

Table of Comparison

| Aspect | Deadlock | Race Condition |

|---|---|---|

| Definition | A state where two or more processes are blocked forever, each waiting for the other to release resources. | A flaw where the system's behavior depends on the sequence or timing of uncontrollable events, leading to unpredictable results. |

| Cause | Mutual resource locking and circular wait among processes. | Concurrent access to shared resources without proper synchronization. |

| Effect | System freeze or halt; processes remain stuck indefinitely. | Data corruption, inconsistent outcomes, or erratic system behavior. |

| Detection | Deadlock detection algorithms, resource allocation graphs. | Testing under varied timing scenarios or using race detection tools. |

| Prevention | Resource ordering, avoiding circular wait, resource preemption. | Synchronization mechanisms like locks, semaphores, and atomic operations. |

| Resolution | Process termination, resource rollback, or manual intervention. | Fixing synchronization, adding proper locking, or redesigning the code. |

| Occurrence | Typically in multi-threaded or distributed systems with resource contention. | Occurs in concurrent programming with unsynchronized shared resource access. |

Understanding Deadlock: Definition and Causes

Deadlock occurs when two or more processes are each waiting for the other to release resources, causing a standstill where none can proceed. This condition arises due to four necessary Coffman conditions: mutual exclusion, hold and wait, no preemption, and circular wait. Understanding these factors helps in designing systems that detect, prevent, or avoid deadlocks effectively.

What is a Race Condition? Key Concepts

A race condition occurs when multiple threads or processes access shared data concurrently, and the final outcome depends on the unpredictable timing of their execution. Key concepts include critical sections, where shared resources are accessed, and synchronization mechanisms like locks or semaphores that prevent conflicting operations. Race conditions lead to inconsistent or incorrect results, making concurrent programming error-prone without proper coordination.

Core Differences Between Deadlock and Race Condition

Deadlock occurs when two or more processes are blocked indefinitely, each waiting for resources held by the other, causing a complete halt in system progress. Race condition arises when multiple threads or processes concurrently access and manipulate shared data, leading to unpredictable and erroneous outcomes due to timing issues. The core difference lies in deadlock causing system stalling through resource contention, while race condition results in inconsistent data states from unsynchronized access.

Common Scenarios: When Deadlock and Race Condition Occur

Deadlock commonly occurs in scenarios where two or more processes or threads wait indefinitely for resources held by each other, such as in database transactions or multi-threaded applications with circular resource dependencies. Race conditions typically arise in situations involving concurrent access to shared variables or memory without proper synchronization, often seen in real-time systems or parallel programming when critical sections are not adequately protected. Both issues frequently manifest in multithreaded environments, but deadlocks involve resource contention leading to standstill, whereas race conditions result from timing errors causing inconsistent or unexpected behavior.

Symptoms and Impact on System Performance

Deadlock symptoms include complete halt of process execution and resource inaccessibility, causing system freezes and degraded throughput. Race conditions manifest as unpredictable behavior, data corruption, and inconsistent outputs due to concurrent access to shared resources. Both issues severely impact system performance by increasing latency, reducing reliability, and complicating debugging processes.

Deadlock Prevention Techniques

Deadlock prevention techniques focus on designing systems to avoid the necessary conditions for deadlock, such as mutual exclusion, hold and wait, no preemption, and circular wait. Methods include resource allocation strategies like assigning a strict order to resource requests and using timeout mechanisms to detect and release held resources. Implementing these strategies ensures that processes do not end up in a circular wait state, thereby maintaining system stability and process continuity.

Strategies to Avoid Race Conditions

Race conditions occur when multiple threads access shared data simultaneously without proper synchronization, leading to unpredictable outcomes. Strategies to avoid race conditions include using locks such as mutexes and semaphores to enforce exclusive access, implementing atomic operations to ensure indivisible modifications, and applying thread-safe programming techniques like synchronized blocks or concurrent data structures. Leveraging these methods enhances data consistency and system reliability in multi-threaded environments.

Real-World Examples: Deadlock vs Race Condition

Deadlock occurs in a dining philosophers scenario, where each philosopher holds one fork and waits indefinitely for the other, causing a system-wide halt. Race condition appears in online ticket booking systems when multiple users concurrently attempt to book the last available seat, leading to inconsistent or duplicate bookings. Both issues highlight synchronization challenges in concurrent programming but manifest distinctly in resource allocation versus data integrity contexts.

Best Practices for Synchronization in Concurrent Systems

Deadlocks and race conditions represent critical synchronization challenges in concurrent systems, requiring disciplined best practices to mitigate. Ensuring proper lock ordering and employing timeout mechanisms help prevent deadlocks, while using atomic operations and memory barriers reduces the risk of race conditions by maintaining data consistency. Leveraging high-level concurrency primitives such as semaphores, mutexes, and concurrent collections enhances synchronization, ensuring safe access to shared resources in multi-threaded environments.

Conclusion: Choosing the Right Solutions for Concurrency Issues

Deadlock and race conditions require distinct approaches for resolution; deadlocks often need resource ordering or deadlock detection algorithms, while race conditions demand synchronization mechanisms like locks or atomic operations. Implementing proper concurrency control strategies tailored to the specific issue ensures reliability and efficiency in multithreaded applications. Selecting the right solution depends on analyzing system behavior to prevent resource contention and maintain data consistency effectively.

Deadlock Infographic

libterm.com

libterm.com