Batch processing enables the efficient handling of large volumes of data by grouping tasks for automated execution, reducing manual intervention and processing time. This method is widely used in industries like finance, manufacturing, and IT to streamline workflows and improve productivity. Discover how mastering batch processing can transform your operations by reading the full article.

Table of Comparison

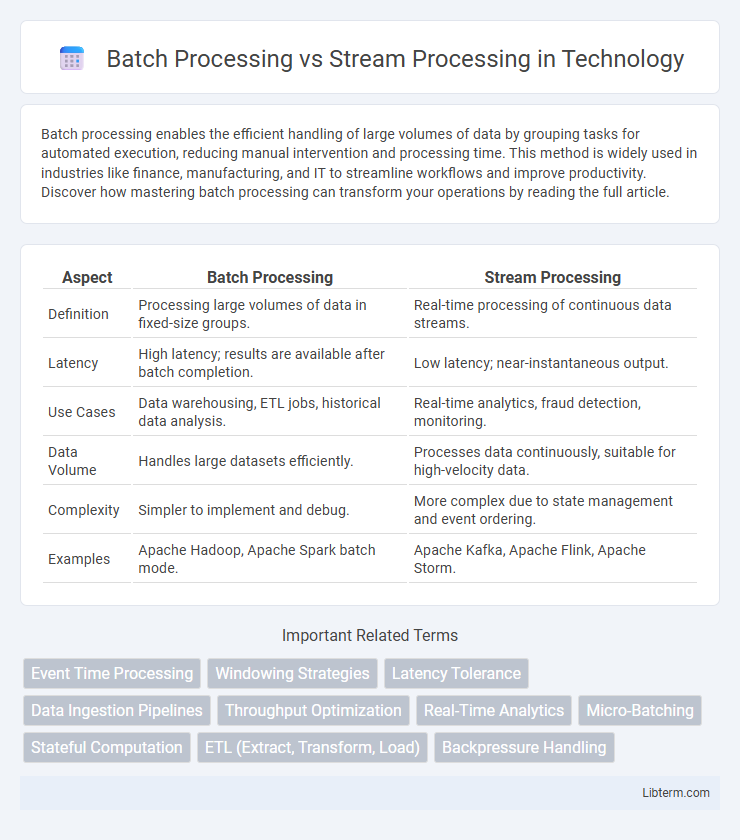

| Aspect | Batch Processing | Stream Processing |

|---|---|---|

| Definition | Processing large volumes of data in fixed-size groups. | Real-time processing of continuous data streams. |

| Latency | High latency; results are available after batch completion. | Low latency; near-instantaneous output. |

| Use Cases | Data warehousing, ETL jobs, historical data analysis. | Real-time analytics, fraud detection, monitoring. |

| Data Volume | Handles large datasets efficiently. | Processes data continuously, suitable for high-velocity data. |

| Complexity | Simpler to implement and debug. | More complex due to state management and event ordering. |

| Examples | Apache Hadoop, Apache Spark batch mode. | Apache Kafka, Apache Flink, Apache Storm. |

Introduction to Data Processing Paradigms

Batch processing handles large volumes of data collected over time, executing jobs in scheduled intervals for comprehensive analysis. Stream processing processes real-time data continuously, enabling immediate insights and rapid decision-making. These paradigms address diverse data workflow needs, optimizing performance based on latency, throughput, and use case requirements.

What is Batch Processing?

Batch processing involves collecting and storing large volumes of data over a period before processing it as a single group or batch. This method is highly efficient for handling massive datasets, enabling complex computations, data transformations, and bulk updates in industries like finance, retail, and telecommunications. Batch processing systems typically optimize throughput and resource utilization by executing jobs during off-peak hours, reducing real-time processing demands.

What is Stream Processing?

Stream processing is a data analysis method that processes real-time data continuously as it flows from sources like sensors, applications, or social media feeds. It enables immediate insights, event detection, and decision-making by handling high volumes of data with low latency using technologies such as Apache Kafka, Apache Flink, and Apache Storm. Stream processing contrasts with batch processing, which analyzes large datasets collected over time, making it ideal for scenarios requiring instant responsiveness and continuous monitoring.

Key Differences Between Batch and Stream Processing

Batch processing handles large volumes of data collected over time, processing it in scheduled intervals, which is ideal for historical data analysis and complex computations. Stream processing deals with continuous data flow, enabling real-time data analysis and immediate insights, suitable for applications requiring low-latency responses. The fundamental difference lies in latency and processing mode: batch processing offers high throughput with higher latency, while stream processing emphasizes low latency with continuous, incremental data handling.

Use Cases for Batch Processing

Batch processing is ideal for large-scale data operations where latency is not critical, such as end-of-day financial reconciliation, payroll systems, and bulk data transformation. It efficiently handles massive volumes of data through scheduled, automated jobs that run without user intervention, making it suitable for data warehousing and report generation. Use cases emphasize scenarios requiring comprehensive data aggregation, historical analysis, and offline processing to optimize resource utilization and ensure consistency.

Use Cases for Stream Processing

Stream processing enables real-time data analysis critical for fraud detection in financial transactions, where immediate response prevents losses. IoT applications leverage stream processing to monitor sensor data continuously, allowing proactive maintenance and operational efficiency. Social media platforms utilize stream processing to deliver personalized content and detect trending topics instantly, enhancing user engagement and experience.

Advantages of Batch Processing

Batch processing excels in handling large volumes of data efficiently by processing it in scheduled groups, minimizing resource contention and optimizing throughput. It offers reliability through fault-tolerant systems that ensure data consistency and accurate completion of complex computations. The ability to perform deep analytics and long-running transformations on historical data makes batch processing ideal for comprehensive reporting and archival purposes.

Advantages of Stream Processing

Stream processing offers real-time data analysis, enabling immediate decision-making and faster response to events compared to batch processing. It supports continuous data flow, making it ideal for applications like fraud detection, monitoring, and dynamic pricing where latency is critical. The ability to handle high-velocity and high-volume data with low latency ensures improved operational efficiency and timely insights.

Challenges and Limitations

Batch processing faces challenges such as latency issues due to processing large volumes of data at once, making it unsuitable for real-time analytics and time-sensitive applications. Stream processing struggles with handling data consistency, fault tolerance, and managing the complexity of processing continuous data flows at high velocity and volume. Both approaches require careful resource management and scalability considerations to balance performance and cost-effectiveness.

Choosing the Right Approach for Your Business

Batch processing excels in handling large volumes of data with high latency tolerance, making it ideal for businesses requiring comprehensive, periodic analysis and reporting. Stream processing enables real-time data analysis and immediate decision-making, suited for industries like finance, e-commerce, or IoT where low latency and continuous data flow are critical. Evaluating factors such as data velocity, volume, business objectives, and infrastructure capabilities ensures the selection of the optimal processing approach to maximize operational efficiency and insights.

Batch Processing Infographic

libterm.com

libterm.com