Real-time processing enables systems to analyze and respond to data instantly, ensuring timely decision-making and enhanced operational efficiency. This technology is critical in applications like financial trading, healthcare monitoring, and autonomous vehicles, where delays can lead to significant negative consequences. Discover how real-time processing can transform Your business by exploring the details in the full article.

Table of Comparison

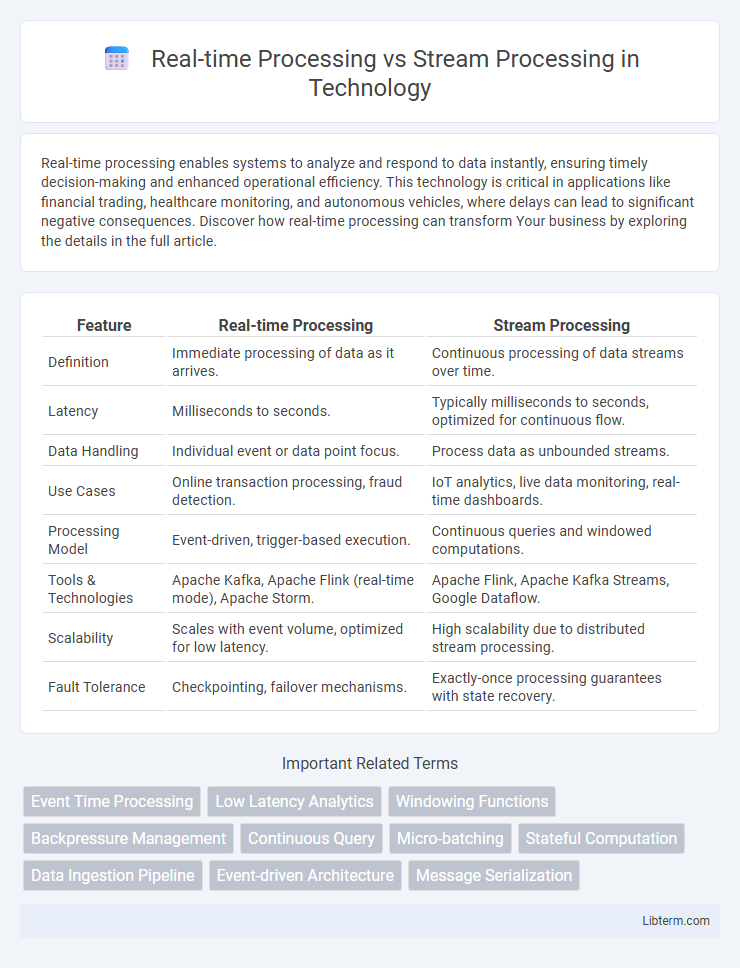

| Feature | Real-time Processing | Stream Processing |

|---|---|---|

| Definition | Immediate processing of data as it arrives. | Continuous processing of data streams over time. |

| Latency | Milliseconds to seconds. | Typically milliseconds to seconds, optimized for continuous flow. |

| Data Handling | Individual event or data point focus. | Process data as unbounded streams. |

| Use Cases | Online transaction processing, fraud detection. | IoT analytics, live data monitoring, real-time dashboards. |

| Processing Model | Event-driven, trigger-based execution. | Continuous queries and windowed computations. |

| Tools & Technologies | Apache Kafka, Apache Flink (real-time mode), Apache Storm. | Apache Flink, Apache Kafka Streams, Google Dataflow. |

| Scalability | Scales with event volume, optimized for low latency. | High scalability due to distributed stream processing. |

| Fault Tolerance | Checkpointing, failover mechanisms. | Exactly-once processing guarantees with state recovery. |

Introduction to Real-time and Stream Processing

Real-time processing involves immediate data handling to deliver instant insights or actions, ideal for applications demanding minimal latency such as financial trading or emergency response systems. Stream processing continuously ingests and analyzes data streams, enabling real-time analytics on massive datasets from sources like IoT devices, social media feeds, and log files. Both methods prioritize low-latency processing but differ in architecture: real-time processing emphasizes latency under strict deadlines, while stream processing handles unbounded data flows with scalable, event-driven models.

Defining Real-time Processing

Real-time processing is the immediate input, processing, and output of data, ensuring minimal latency to support instant decision-making and actions. It operates on individual data points or small batches, making it essential for applications like fraud detection, live monitoring, and autonomous systems. This contrasts with stream processing, which handles continuous data flows but may tolerate slight delays for aggregated analysis.

Understanding Stream Processing

Stream processing analyzes continuous data flows instantly to extract valuable insights and trigger immediate actions. It processes unbounded data streams with low latency, enabling real-time analytics, event detection, and complex event processing across diverse applications like IoT, finance, and telecommunications. Scalable frameworks such as Apache Kafka, Apache Flink, and Apache Storm provide robust capabilities for fault tolerance, stateful computation, and windowing functions essential for effective stream processing.

Key Differences Between Real-time and Stream Processing

Real-time processing involves handling data with minimal latency to provide immediate output or response, crucial for applications requiring instant decision-making such as fraud detection or emergency systems. Stream processing continuously ingests and processes data streams in near real-time, enabling scalable analytics on high-volume, unbounded data sources like sensor networks or social media feeds. Key differences include latency requirements, with real-time processing demanding strict time constraints, while stream processing emphasizes continuous data flow and scalability over immediate responsiveness.

Use Cases for Real-time Processing

Real-time processing is crucial for applications requiring immediate data analysis and response, such as fraud detection in financial transactions, real-time gaming leaderboards, and live monitoring of sensor data in IoT devices. It enables instant decision-making by processing data the moment it arrives, ensuring minimal latency and up-to-date insights. Use cases also include emergency alert systems, dynamic pricing in e-commerce, and real-time personalization in digital marketing campaigns.

Applications of Stream Processing

Stream processing enables real-time analysis of continuous data flows, making it ideal for applications like fraud detection in financial services, live monitoring of sensor networks in IoT, and personalized content delivery in digital marketing. Its ability to process and analyze data instantly supports operational decision-making and adaptive responses in industries such as telecommunications and healthcare. By handling high-velocity, high-volume data streams, stream processing platforms like Apache Kafka and Apache Flink drive real-time analytics and event-driven architectures.

Performance and Latency Comparison

Real-time processing systems deliver immediate data analysis with latency measured in milliseconds, making them ideal for applications requiring instant decision-making, such as fraud detection and autonomous vehicles. Stream processing handles continuous data flows and processes information with low latency, typically in seconds, optimizing throughput for large-scale event-driven architectures and IoT analytics. Performance in real-time processing emphasizes ultra-low latency and quick response times, whereas stream processing balances latency with scalability and fault tolerance in high-volume data environments.

Scalability and Infrastructure Requirements

Real-time processing demands robust infrastructure with low-latency capabilities to handle data instantly, often requiring high-performance computing resources and optimized network bandwidth for scalability. Stream processing scales horizontally by partitioning data streams, leveraging distributed systems like Apache Kafka or Apache Flink to manage vast, continuous data flows efficiently. Infrastructure for stream processing emphasizes elasticity and fault tolerance, enabling seamless scaling across clusters while maintaining data integrity and processing speed.

Choosing the Right Processing Method

Choosing the right processing method depends on the specific application requirements and data characteristics. Real-time processing excels in scenarios demanding immediate response and low latency, such as fraud detection or live monitoring, where each event is processed as it arrives. Stream processing is more suitable for continuous data flows requiring complex analytics over windows of time, like trend analysis or anomaly detection, enabling scalable and fault-tolerant handling of vast data streams.

Future Trends in Data Processing Technologies

Real-time processing and stream processing are evolving rapidly with advancements in edge computing, AI integration, and 5G connectivity driving future trends. The adoption of adaptive machine learning models for instantaneous data analytics and decision-making enhances system responsiveness and accuracy. Increasing deployment of distributed architectures and serverless computing optimizes scalability and reduces latency in handling massive event streams.

Real-time Processing Infographic

libterm.com

libterm.com