Scalability ensures your system can efficiently handle increased workload without compromising performance or user experience. It involves designing flexible infrastructure and software that can grow seamlessly with demand. Explore the full article to discover strategies for achieving effective scalability in your projects.

Table of Comparison

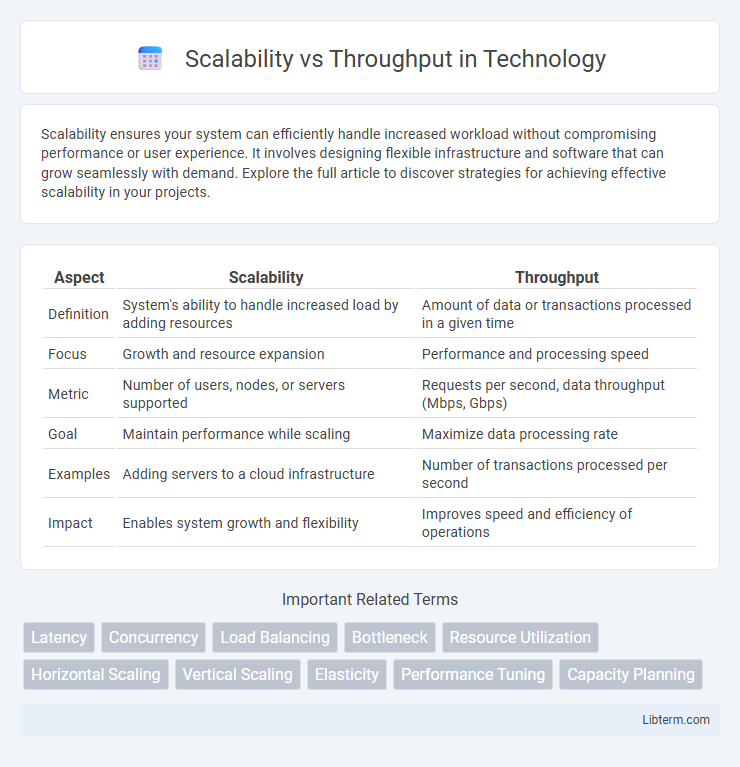

| Aspect | Scalability | Throughput |

|---|---|---|

| Definition | System's ability to handle increased load by adding resources | Amount of data or transactions processed in a given time |

| Focus | Growth and resource expansion | Performance and processing speed |

| Metric | Number of users, nodes, or servers supported | Requests per second, data throughput (Mbps, Gbps) |

| Goal | Maintain performance while scaling | Maximize data processing rate |

| Examples | Adding servers to a cloud infrastructure | Number of transactions processed per second |

| Impact | Enables system growth and flexibility | Improves speed and efficiency of operations |

Understanding Scalability: A Key Concept

Scalability refers to a system's ability to maintain or improve performance as demand increases, enabling the handling of higher workloads efficiently. Throughput measures the number of tasks or transactions processed within a specific time frame, reflecting system capacity. Understanding scalability involves recognizing how system architecture, resource allocation, and load balancing contribute to sustaining or enhancing throughput under growing demand.

Defining Throughput in Modern Systems

Throughput in modern systems measures the rate at which tasks or data units are processed within a given time frame, often quantified in transactions per second (TPS) or requests per second (RPS). It reflects the system's capacity to handle workload efficiently under current resource conditions, directly influencing user experience and performance benchmarks. Monitoring throughput enables optimization strategies to balance load distribution and resource allocation, ensuring scalable growth without compromising processing speed.

Core Differences Between Scalability and Throughput

Scalability measures a system's capacity to handle an increasing workload by adding resources, while throughput quantifies the actual amount of work processed within a given time frame. Scalability focuses on potential growth and resource flexibility, whereas throughput emphasizes performance efficiency and speed under current conditions. Understanding this distinction is crucial for optimizing system design and achieving balanced resource allocation.

Why Scalability Matters for Growing Businesses

Scalability matters for growing businesses because it ensures systems and processes can handle increased demand without performance degradation, supporting sustained growth and customer satisfaction. High scalability minimizes downtime and resource constraints, enabling seamless expansion across markets and platforms. Throughput measures task completion rate but without scalability, increased load can lead to bottlenecks, making scalability essential for long-term success.

The Role of Throughput in Performance Optimization

Throughput directly impacts performance optimization by measuring the number of tasks or transactions processed within a given time frame, providing a key indicator of system efficiency. High throughput is essential for scaling applications, as it ensures that increasing workloads can be handled without compromising response time or resource utilization. Optimizing throughput involves fine-tuning hardware, network bandwidth, and software concurrency to maximize data processing capabilities and support scalability goals.

Common Bottlenecks Affecting Throughput

Common bottlenecks affecting throughput include CPU limitations, memory bandwidth constraints, and network latency issues. Disk I/O speed often becomes a critical barrier in data-intensive applications, causing delays in processing and data transfer. Inefficient software design and concurrency management can further exacerbate throughput reduction despite scalable infrastructure.

Balancing Scalability and Throughput: Best Practices

Balancing scalability and throughput requires optimizing system architecture to handle increased loads without sacrificing performance, achieved by distributing workloads efficiently across scalable resources such as cloud infrastructure, load balancers, and microservices. Implementing asynchronous processing, caching strategies, and database sharding enhances throughput while maintaining system responsiveness under growing demand. Monitoring key performance metrics and employing auto-scaling policies ensure dynamic adjustment to traffic patterns, maintaining optimal balance between scalability and throughput.

Real-World Examples: Scalability vs Throughput

Netflix exemplifies scalability by efficiently handling millions of simultaneous streams worldwide, while maximizing throughput by delivering high-definition content with minimal buffering. Amazon Web Services scales infrastructure dynamically to support fluctuating workloads, ensuring high throughput for data processing during peak times like Prime Day. In manufacturing, Tesla optimizes assembly lines for scalability to increase vehicle output without compromising throughput, maintaining production speed and quality control.

Measuring Success: Essential Metrics and Tools

Measuring success in scalability involves tracking system response time, resource utilization, and load handling capacity under increasing demand, whereas throughput measurement focuses on the number of transactions or data processed per unit of time. Essential metrics include latency, requests per second, CPU and memory usage for scalability, alongside throughput rate, bandwidth, and error rates for throughput assessment. Tools such as Apache JMeter, Grafana, and New Relic provide comprehensive monitoring and visualization, enabling precise performance analysis and optimization strategies.

Future Trends in Scalability and Throughput

Future trends in scalability emphasize artificial intelligence-driven resource management that dynamically adjusts system capacity to meet varying demand. Advances in edge computing and 5G networks enable higher throughput by processing data closer to the source, reducing latency and bandwidth bottlenecks. Predictive analytics and machine learning optimize load balancing, ensuring systems maintain both scalability and throughput as user requirements and data volumes grow exponentially.

Scalability Infographic

libterm.com

libterm.com