Conditional expectation is the expected value of a random variable given that a certain condition or event has occurred, providing a refined prediction based on available information. It plays a crucial role in probability theory and statistics, especially in areas like stochastic processes, Bayesian inference, and decision-making models. Explore the rest of the article to deepen your understanding of conditional expectation and its practical applications.

Table of Comparison

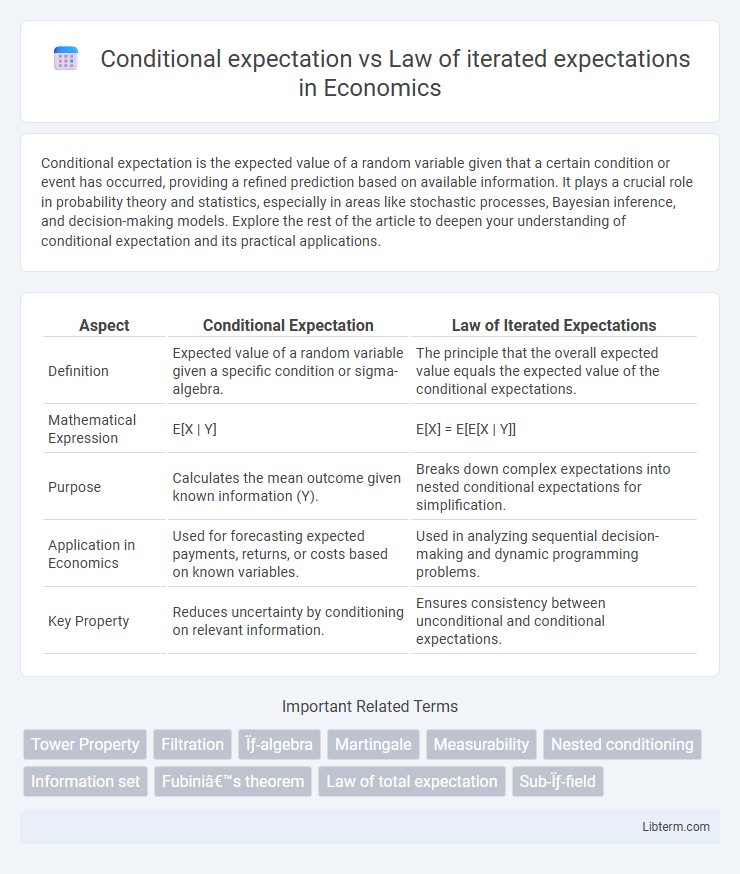

| Aspect | Conditional Expectation | Law of Iterated Expectations |

|---|---|---|

| Definition | Expected value of a random variable given a specific condition or sigma-algebra. | The principle that the overall expected value equals the expected value of the conditional expectations. |

| Mathematical Expression | E[X | Y] | E[X] = E[E[X | Y]] |

| Purpose | Calculates the mean outcome given known information (Y). | Breaks down complex expectations into nested conditional expectations for simplification. |

| Application in Economics | Used for forecasting expected payments, returns, or costs based on known variables. | Used in analyzing sequential decision-making and dynamic programming problems. |

| Key Property | Reduces uncertainty by conditioning on relevant information. | Ensures consistency between unconditional and conditional expectations. |

Introduction to Conditional Expectation

Conditional expectation represents the expected value of a random variable given a specific event or information set, capturing how uncertainty is updated when new data is available. It serves as the foundation for the Law of Iterated Expectations, which states that the unconditional expectation can be computed by taking the expected value of the conditional expectation across all possible conditions. This concept plays a critical role in probability theory and statistics, enabling more precise modeling of dependent random variables and sequential decision-making processes.

Defining the Law of Iterated Expectations

The Law of Iterated Expectations states that the expected value of a conditional expectation equals the unconditional expectation, expressed mathematically as E[E(Y|X)] = E(Y). This law emphasizes the fundamental property that conditioning on a random variable does not change the overall expected value, linking conditional and unconditional expectations in probability theory. It forms the basis for understanding hierarchical expectations and is pivotal in fields such as econometrics and statistics.

Mathematical Foundations and Notation

Conditional expectation, denoted as E(Y|X), represents the expected value of a random variable Y given another variable X, formalized through the Radon-Nikodym derivative in measure-theoretic probability. The Law of Iterated Expectations states that E(Y) = E[E(Y|X)], emphasizing the tower property of conditional expectations and the nested structure of s-algebras. This fundamental identity ensures consistency in multistage conditioning and underpins many results in stochastic processes and Bayesian inference.

Key Differences Between Conditional Expectation and LIE

Conditional expectation calculates the expected value of a random variable given specific information or events, focusing on a single conditioning event or sigma-algebra. The Law of Iterated Expectations (LIE) extends this concept by expressing the unconditional expectation as the expectation of the conditional expectation, effectively layering expectations across multiple stages of conditioning. Key differences include that conditional expectation is a function dependent on a conditioning sigma-algebra, whereas LIE is a fundamental theorem that ensures consistent averaging across nested information sets.

Practical Examples of Conditional Expectation

Conditional expectation quantifies the expected value of a random variable given specific information or an event, such as calculating the average sales revenue given the observed weather conditions. The Law of Iterated Expectations formalizes this by stating that the overall expectation equals the expectation of the conditional expectation, exemplified when predicting total insurance claims by first conditioning on policyholder age groups and then averaging across all groups. Practical applications span fields like finance, where portfolio returns are estimated conditioned on market states, and machine learning, where model predictions are refined by conditioning on observed features.

Applications of the Law of Iterated Expectations

The Law of Iterated Expectations (LIE) simplifies complex probability problems by breaking down expectations into nested conditional expectations, commonly applied in econometrics for unbiased estimation of regression parameters. In finance, LIE is used to price assets by evaluating expected returns given current information and iteratively conditioning on known variables. Risk management utilizes the law to calculate expected losses under different scenarios by sequentially conditioning on risk factors, enhancing decision-making accuracy.

Common Misconceptions and Pitfalls

Conditional expectation often leads to misconceptions when interpreted as a deterministic value rather than a random variable depending on the conditioning sigma-algebra. The Law of Iterated Expectations (LIE) states that the expectation of a conditional expectation equals the overall expectation, yet a common pitfall is misapplying the law by ignoring the requirement that the inner expectation is conditioned on a potentially coarser sigma-algebra. Misunderstanding these nuances can cause errors in statistical modeling and inference, particularly in hierarchical or sequential data structures where nested conditioning plays a crucial role.

Visualizing Conditional Expectation and LIE

Visualizing conditional expectation involves interpreting it as the average value of a random variable given specific information or conditions, often represented graphically as a conditional mean function or regression curve. The Law of Iterated Expectations (LIE) states that the overall expectation of a random variable equals the expectation of its conditional expectation given another variable, which can be visualized as a two-step averaging process, first conditioning on one variable and then averaging over the distribution of that variable. Graphical tools like contour plots, regression lines, or conditional density plots help illustrate how conditional expectations vary across different values, while demonstrating LIE highlights the layered structure of expectations in probabilistic models.

Real-world Use Cases in Data Science and Economics

Conditional expectation quantifies the expected value of a random variable given specific information, crucial for making data-driven predictions in machine learning models and risk assessment. The Law of Iterated Expectations ensures consistency in multistage decision-making processes by decomposing complex expectations into nested conditional expectations, enabling better model interpretability and sequential forecasting in economic policy analysis and time series modeling. In economics, these concepts underpin dynamic programming for optimal resource allocation, while data science applications leverage them for causal inference and improving reinforcement learning algorithms.

Summary and Further Reading

Conditional expectation represents the expected value of a random variable given a specific sigma-algebra or information set, serving as a fundamental concept in probability theory and statistics. The Law of Iterated Expectations (LIE) states that the unconditional expectation of a random variable equals the expectation of its conditional expectation, formally expressed as E(X) = E[E(X|Y)], ensuring consistency across nested information. For further reading, explore textbooks on probability theory such as "Probability and Measure" by Patrick Billingsley and research articles on martingales and sigma-algebras to deepen understanding of conditional structures and their applications.

Conditional expectation Infographic

libterm.com

libterm.com