Mastering grammar enhances your writing clarity and boosts communication skills crucial for academic and professional success. Understanding sentence structure, punctuation, and syntax allows you to convey ideas more effectively and avoid common errors that can confuse readers. Explore the rest of this article to sharpen your grammar expertise and elevate your language proficiency.

Table of Comparison

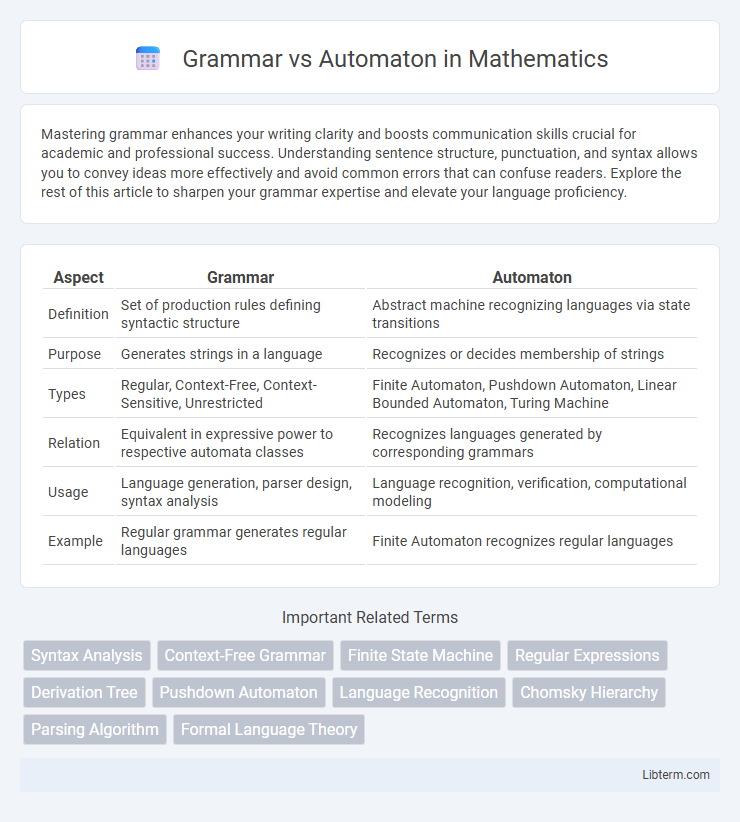

| Aspect | Grammar | Automaton |

|---|---|---|

| Definition | Set of production rules defining syntactic structure | Abstract machine recognizing languages via state transitions |

| Purpose | Generates strings in a language | Recognizes or decides membership of strings |

| Types | Regular, Context-Free, Context-Sensitive, Unrestricted | Finite Automaton, Pushdown Automaton, Linear Bounded Automaton, Turing Machine |

| Relation | Equivalent in expressive power to respective automata classes | Recognizes languages generated by corresponding grammars |

| Usage | Language generation, parser design, syntax analysis | Language recognition, verification, computational modeling |

| Example | Regular grammar generates regular languages | Finite Automaton recognizes regular languages |

Understanding Grammar: Definition and Types

Grammar is a structured set of rules that defines the syntax and structure of a language, enabling the generation of valid strings within that language. Types of grammar include regular, context-free, context-sensitive, and unrestricted grammars, each increasing in complexity and expressive power. Understanding these types is essential for computational linguistics and automata theory, as they correlate directly with the capabilities of finite automata, pushdown automata, linear-bounded automata, and Turing machines.

What is an Automaton? Key Concepts Explained

An automaton is a mathematical model used to represent and analyze the behavior of computational systems through states and transitions. Key concepts include states, which represent conditions or configurations, and transitions, which define rules for moving between states based on input symbols. Automata theory underpins the design of formal languages and supports the development of parsers, enabling efficient grammar recognition and processing.

Historical Evolution of Grammar and Automata Theory

The historical evolution of grammar and automata theory began with Noam Chomsky's 1956 introduction of generative grammar, which formalized syntactic structures using a hierarchy of grammars that correspond directly to classes of automata. This foundational link established that regular grammars align with finite automata, context-free grammars with pushdown automata, and unrestricted grammars with Turing machines, enabling computational linguistics and formal language processing to advance significantly. The development of automata theory further transformed the study of language by providing mathematical models for parsing and recognizing languages, thus bridging theoretical computer science and linguistics.

Grammar vs Automaton: Core Differences

Grammar defines a set of rules for generating strings in a language, while automaton is a computational model that recognizes or accepts those strings. Grammars are classified into types such as regular, context-free, context-sensitive, and recursively enumerable, each corresponding to a specific class of automata like finite automata, pushdown automata, linear bounded automata, and Turing machines respectively. The core difference lies in grammar's generative nature versus automaton's recognition and processing capability, making them complementary tools in formal language theory.

The Role of Formal Languages in Grammar and Automata

Formal languages serve as the foundation for both grammar and automata, defining sets of strings structured by specific syntactic rules. Grammars, such as context-free grammars, generate languages by applying production rules to produce valid strings, while automata, like finite automata and pushdown automata, recognize these languages by processing input strings through a series of states. The interplay between formal languages, grammars, and automata enables rigorous analysis and design of computational systems, programming languages, and parsing algorithms.

Applications of Grammar in Computer Science

Context-free grammars play a crucial role in compiler design by defining the syntax rules for programming languages, enabling efficient parsing and error detection. Regular grammars are widely used in lexical analysis to tokenize input strings, making them essential in constructing finite automata for pattern recognition. Formal grammars also underpin natural language processing algorithms, facilitating the development of language parsers and machine translation systems.

Real-World Use Cases of Automata

Automata theory plays a crucial role in designing and optimizing software for pattern recognition, such as lexical analyzers in compilers that tokenize programming languages based on grammar rules. Finite automata underpin various applications like network protocol analysis, where state machines model communication sequences for error detection and synchronization. Automata also enable efficient processing in text search algorithms, enabling rapid matching of strings within large datasets, demonstrating their practical advantage over mere grammatical descriptions.

How Grammars and Automata Interact

Grammars define formal languages through production rules that generate strings, while automata provide computational models that recognize these languages by processing input symbols. Context-free grammars correspond to pushdown automata, enabling parsing of nested structures such as programming languages, whereas regular grammars align with finite automata suitable for simpler pattern recognition. The interaction between grammars and automata underpins compiler design, enabling syntax analysis through automaton-based parsing algorithms derived from grammar rules.

Limitations and Challenges in Grammar and Automaton Approaches

Grammar-based approaches, such as context-free grammars, face limitations in capturing natural language ambiguities and complex dependencies, resulting in incomplete or overly rigid representations. Automaton-based models, including finite automata and pushdown automata, struggle with handling context-sensitive language features and often require extensive state expansion, leading to scalability challenges. Both approaches encounter difficulties in efficiently processing nested structures and long-distance dependencies inherent in natural languages, restricting their applicability in advanced linguistic applications.

Future Trends: Advancements in Grammar and Automata Research

Future advancements in grammar and automata research emphasize the integration of machine learning techniques to enhance syntactic parsing and language modeling. Neural network-driven automata models are expected to improve contextual understanding, enabling more accurate natural language processing applications. Continued exploration of formal grammar refining and automata optimization will drive innovations in computational linguistics and AI language systems.

Grammar Infographic

libterm.com

libterm.com