Non-convex shapes or functions are characterized by regions where a line segment between two points in the set lies partially outside the set, leading to complex geometries and challenges in optimization problems. Understanding non-convexity is crucial in fields such as machine learning, economics, and engineering, where solutions often require advanced techniques beyond those used for convex cases. Dive into the rest of the article to explore the implications of non-convexity and how it affects your problem-solving approaches.

Table of Comparison

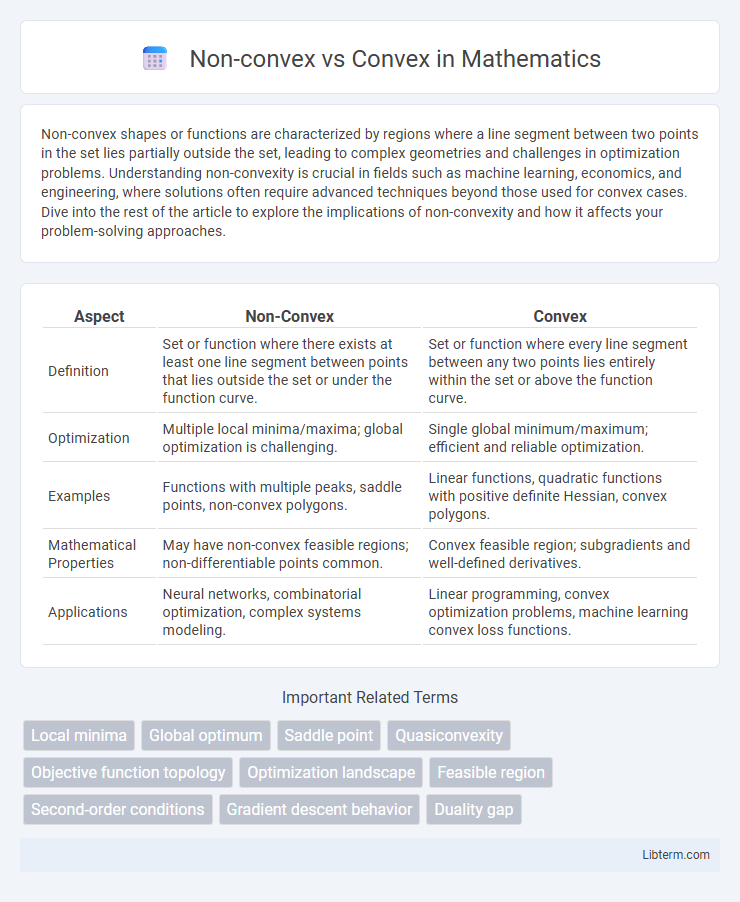

| Aspect | Non-Convex | Convex |

|---|---|---|

| Definition | Set or function where there exists at least one line segment between points that lies outside the set or under the function curve. | Set or function where every line segment between any two points lies entirely within the set or above the function curve. |

| Optimization | Multiple local minima/maxima; global optimization is challenging. | Single global minimum/maximum; efficient and reliable optimization. |

| Examples | Functions with multiple peaks, saddle points, non-convex polygons. | Linear functions, quadratic functions with positive definite Hessian, convex polygons. |

| Mathematical Properties | May have non-convex feasible regions; non-differentiable points common. | Convex feasible region; subgradients and well-defined derivatives. |

| Applications | Neural networks, combinatorial optimization, complex systems modeling. | Linear programming, convex optimization problems, machine learning convex loss functions. |

Introduction to Convex and Non-Convex Functions

Convex functions are defined by the property that a line segment between any two points on the function's graph lies above or on the graph, ensuring a single global minimum which simplifies optimization problems. Non-convex functions lack this property, often containing multiple local minima and maxima, making them more challenging for optimization algorithms to solve efficiently. Understanding the distinction between convex and non-convex functions is crucial in fields like machine learning, economics, and operations research, where optimization plays a central role.

Defining Convexity in Mathematical Terms

Convexity in mathematical terms is defined by a set or function where, for any two points within the domain, the line segment connecting them lies entirely within the set or under the graph of the function. A convex set satisfies the condition that for all points x and y, and any l in [0,1], the point lx + (1-l)y is also in the set, ensuring no indentations or "dips." Non-convex sets or functions violate this property, allowing for regions where line segments between two points partially lie outside the set or above the function graph, leading to multiple local minima in optimization contexts.

Non-Convex Functions: Key Characteristics

Non-convex functions exhibit multiple local minima and maxima, making optimization challenging due to the presence of numerous saddle points and local optima. These functions do not satisfy the convexity property, meaning the line segment between any two points on the graph may lie below or above the function curve. Non-convex optimization problems are common in machine learning, deep learning, and complex systems, requiring advanced algorithms like stochastic gradient descent or evolutionary methods for effective solutions.

Geometric Interpretation of Convex vs Non-Convex

Convex sets in geometry are defined by the property that any line segment connecting two points within the set lies entirely inside the set, forming shapes like polygons without indentations or holes. Non-convex sets, by contrast, contain at least one line segment between two points that passes outside the shape, creating indentations or disjoint regions. This geometric distinction critically affects optimization, as convex problems guarantee global minima while non-convex problems may have multiple local minima, complicating solution strategies.

Importance in Optimization Problems

Convex optimization problems guarantee global optima due to their well-defined structure, making them crucial in fields like machine learning and operations research. Non-convex problems, while more complex and prone to local minima, represent real-world scenarios such as neural network training and energy systems optimization. Understanding the distinction enables selecting appropriate algorithms, improving solution accuracy and computational efficiency in diverse applications.

Algorithms for Convex Optimization

Convex optimization algorithms leverage the property that any local minimum is a global minimum, ensuring convergence to the optimal solution efficiently using methods like gradient descent, interior-point methods, and proximal algorithms. Non-convex optimization problems pose challenges due to multiple local minima and saddle points, often requiring heuristic or metaheuristic approaches such as genetic algorithms or simulated annealing. Advanced convex optimization techniques exploit strong duality and KKT conditions to solve high-dimensional problems in machine learning, signal processing, and control systems with guaranteed optimality.

Challenges in Non-Convex Optimization

Non-convex optimization presents significant challenges due to the presence of multiple local minima, saddle points, and flat regions, which complicate convergence to a global optimum. Unlike convex problems where any local minimum is global, non-convex problems require advanced techniques such as stochastic gradient methods, initialization strategies, and heuristic algorithms to navigate complex landscapes effectively. The inherent difficulty in guaranteeing solution optimality and stability makes non-convex optimization crucial yet challenging in fields like machine learning, signal processing, and operational research.

Applications in Machine Learning and Data Science

Convex optimization problems dominate machine learning due to their guaranteed global optima and efficient solvability, particularly in support vector machines, logistic regression, and linear programming. Non-convex problems arise in deep learning, where neural network training involves highly non-convex loss landscapes with many local minima and saddle points, necessitating heuristic methods like stochastic gradient descent. Understanding problem convexity helps data scientists select appropriate algorithms, balancing computational complexity and solution quality for applications such as clustering, matrix factorization, and reinforcement learning.

Advantages and Limitations of Convex and Non-Convex Approaches

Convex approaches benefit from guaranteed global optima and efficient solvability due to their well-defined mathematical properties, making them ideal for problems like linear programming and convex optimization. Non-convex approaches, while often more computationally challenging and prone to local minima, offer greater flexibility and expressiveness for complex problems such as deep learning and combinatorial optimization. The key limitation of convex methods lies in their inability to model intricate, real-world scenarios requiring non-linear representations, whereas non-convex methods may suffer from convergence issues and higher computational costs.

Future Trends and Research Directions

Future trends in optimization emphasize developing advanced algorithms for non-convex problems, leveraging machine learning and deep learning to handle complex, high-dimensional data far beyond traditional convex frameworks. Research is increasingly exploring hybrid models that integrate convex optimization techniques with non-convex approaches to balance computational efficiency and solution quality. Emerging directions also focus on scalable methods for global optimization in non-convex landscapes, aiming to solve real-world problems in fields like artificial intelligence, finance, and engineering more effectively.

Non-convex Infographic

libterm.com

libterm.com