An eigenvector is a non-zero vector that changes by a scalar factor when a linear transformation is applied, representing a direction that remains invariant under that transformation. It plays a crucial role in fields such as physics, computer science, and data analysis, particularly in principal component analysis and stability studies. Discover how understanding eigenvectors can enhance your grasp of complex systems by exploring the rest of the article.

Table of Comparison

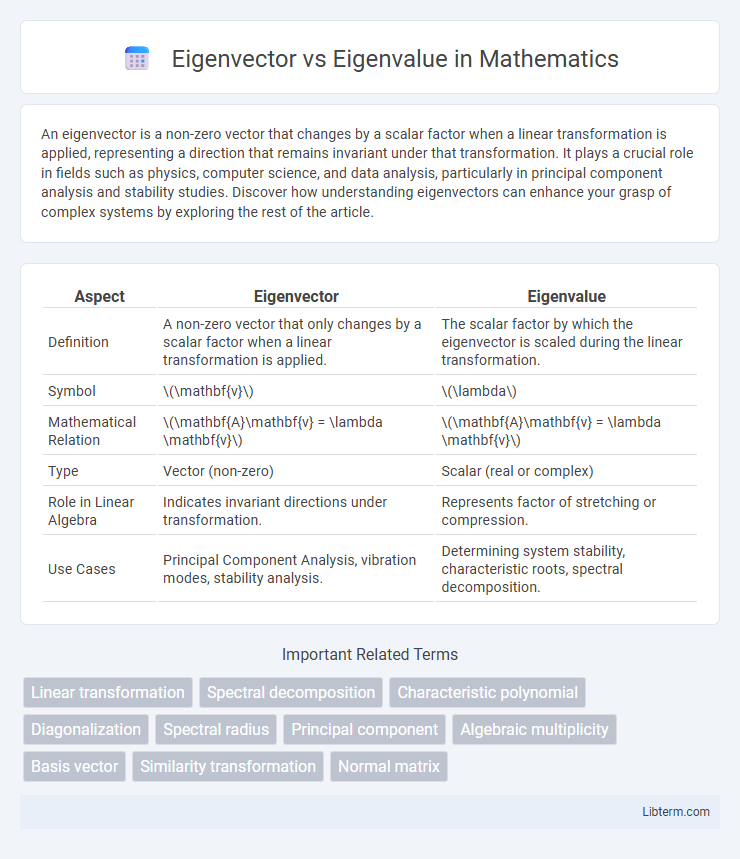

| Aspect | Eigenvector | Eigenvalue |

|---|---|---|

| Definition | A non-zero vector that only changes by a scalar factor when a linear transformation is applied. | The scalar factor by which the eigenvector is scaled during the linear transformation. |

| Symbol | \(\mathbf{v}\) | \(\lambda\) |

| Mathematical Relation | \(\mathbf{A}\mathbf{v} = \lambda \mathbf{v}\) | \(\mathbf{A}\mathbf{v} = \lambda \mathbf{v}\) |

| Type | Vector (non-zero) | Scalar (real or complex) |

| Role in Linear Algebra | Indicates invariant directions under transformation. | Represents factor of stretching or compression. |

| Use Cases | Principal Component Analysis, vibration modes, stability analysis. | Determining system stability, characteristic roots, spectral decomposition. |

Introduction to Eigenvectors and Eigenvalues

Eigenvectors are non-zero vectors that, when multiplied by a square matrix, change only in scale and not in direction, serving as fundamental components in linear transformations. Eigenvalues are the scalars associated with these eigenvectors, representing the factor by which the eigenvector is scaled during the transformation. Understanding eigenvectors and eigenvalues is crucial for applications in systems of differential equations, stability analysis, and dimensionality reduction techniques like Principal Component Analysis (PCA).

Defining Eigenvectors: Meaning and Significance

Eigenvectors represent non-zero vectors that, when multiplied by a square matrix, result in a scalar multiple of themselves, reflecting intrinsic directions preserved by the transformation. Their significance lies in revealing fundamental properties of linear transformations, such as identifying invariant subspaces and simplifying matrix operations through diagonalization. Understanding eigenvectors helps in various applications, including stability analysis, vibration modes in engineering, and principal component analysis in data science.

What Are Eigenvalues? Core Concepts Explained

Eigenvalues are scalar values that represent the magnitude by which an eigenvector is scaled during a linear transformation represented by a matrix. They play a crucial role in matrix theory and have applications in stability analysis, quantum mechanics, and principal component analysis (PCA). Determining eigenvalues involves solving the characteristic equation, det(A - lI) = 0, where A is the matrix and l represents the eigenvalues.

Mathematical Representation of Eigenvectors and Eigenvalues

Eigenvectors are non-zero vectors that change by only a scalar factor when a linear transformation is applied, mathematically represented as Av = lv, where A is a square matrix, v is the eigenvector, and l is the corresponding eigenvalue. The eigenvalue l is a scalar indicating the magnitude of the vector's scaling under the transformation, while the eigenvector v points in the direction that remains invariant. Solving the characteristic equation det(A - lI) = 0 yields eigenvalues, and substituting each eigenvalue back into (A - lI)v = 0 produces the associated eigenvectors.

How to Calculate Eigenvectors and Eigenvalues

To calculate eigenvalues, solve the characteristic equation det(A - lI) = 0, where A is a square matrix, l represents eigenvalues, and I is the identity matrix of the same size as A. Once the eigenvalues (l) are determined, substitute each l back into the equation (A - lI)v = 0 to solve for the corresponding eigenvector v, which involves finding the null space of the matrix (A - lI). This process requires techniques from linear algebra such as matrix operations, determinant calculation, and solving systems of linear equations.

Key Differences Between Eigenvectors and Eigenvalues

Eigenvectors are non-zero vectors that change direction by only a scalar factor when a linear transformation is applied, while eigenvalues are those scalar factors representing the magnitude of stretching or compression. Eigenvectors provide the directions of invariant subspaces under the transformation, whereas eigenvalues quantify the magnitude of transformation effects along those directions. The key difference is that eigenvectors define the axes of transformation, and eigenvalues measure how much the transformation scales or shrinks vectors along those axes.

Real-World Applications: Eigenvectors vs Eigenvalues

Eigenvectors identify directions along which linear transformations act by stretching or compressing, while eigenvalues quantify the magnitude of these transformations, making both essential in real-world applications like facial recognition, where eigenfaces represent key features. In structural engineering, eigenvalues indicate natural frequencies of vibration, and corresponding eigenvectors reveal mode shapes, critical for predicting resonant behavior in buildings and bridges. Financial portfolio optimization uses eigenvalues to assess risk and eigenvectors to determine asset allocation, enhancing investment strategies through principal component analysis.

Importance in Machine Learning and Data Science

Eigenvectors and eigenvalues are fundamental in machine learning and data science for dimensionality reduction techniques like Principal Component Analysis (PCA), enabling efficient data representation by identifying principal directions of variance. Eigenvalues quantify the magnitude of variance along each eigenvector, helping prioritize features that contribute most to data structure and improving model interpretability. This spectral decomposition aids in noise reduction, feature extraction, and enhances algorithms for clustering, recommendation systems, and neural network optimization.

Common Misconceptions and Clarifications

Eigenvectors and eigenvalues are often confused, but eigenvectors are non-zero vectors whose direction remains unchanged by a linear transformation, whereas eigenvalues are the scalar factors by which eigenvectors are scaled. A common misconception is that eigenvalues correspond to the magnitude of eigenvectors, but eigenvalues represent scaling factors, not vector lengths. Clarifying that eigenvectors can have any non-zero magnitude and that eigenvalues can be complex numbers helps prevent misunderstanding in matrix analysis and applications.

Conclusion: Choosing Between Eigenvector and Eigenvalue

Choosing between eigenvector and eigenvalue depends on the application context; eigenvalues quantify the magnitude of transformation, while eigenvectors reveal the direction associated with that magnitude. For dimensionality reduction techniques like PCA, eigenvectors identify principal components capturing data variance, whereas eigenvalues indicate the explained variance by each component. Understanding both is essential for accurately interpreting linear transformations, stability analysis, and feature extraction in machine learning and engineering.

Eigenvector Infographic

libterm.com

libterm.com