A basis vector is a fundamental element in a vector space that, when combined through scalar multiplication, can represent any vector in that space uniquely. These vectors are linearly independent and span the entire space, providing a coordinate system to describe geometric and algebraic structures. Explore the full article to deepen your understanding of basis vectors and their critical role in mathematics.

Table of Comparison

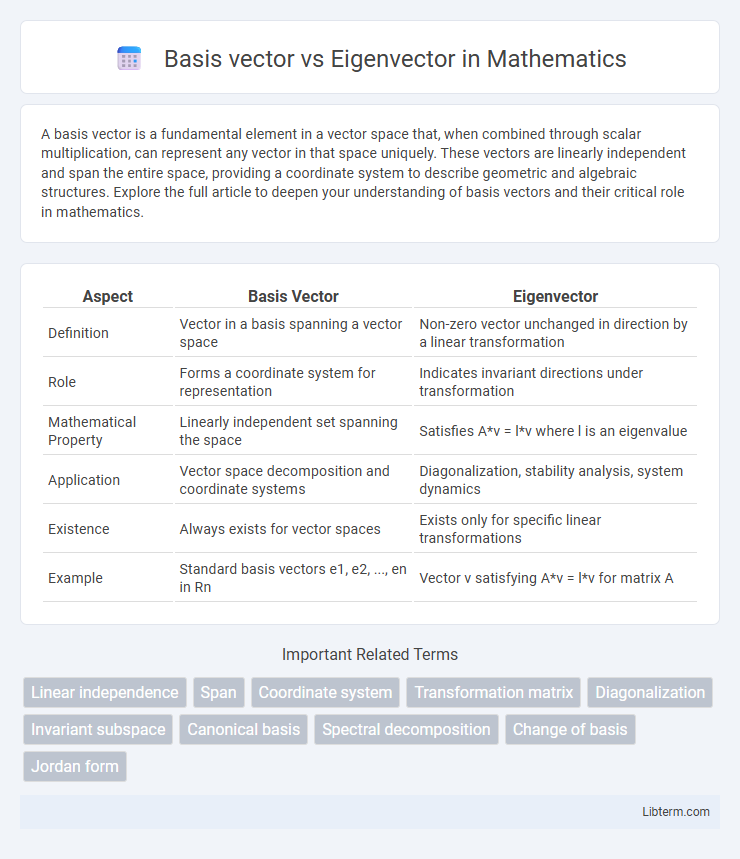

| Aspect | Basis Vector | Eigenvector |

|---|---|---|

| Definition | Vector in a basis spanning a vector space | Non-zero vector unchanged in direction by a linear transformation |

| Role | Forms a coordinate system for representation | Indicates invariant directions under transformation |

| Mathematical Property | Linearly independent set spanning the space | Satisfies A*v = l*v where l is an eigenvalue |

| Application | Vector space decomposition and coordinate systems | Diagonalization, stability analysis, system dynamics |

| Existence | Always exists for vector spaces | Exists only for specific linear transformations |

| Example | Standard basis vectors e1, e2, ..., en in Rn | Vector v satisfying A*v = l*v for matrix A |

Introduction to Basis Vectors and Eigenvectors

Basis vectors form a fundamental set of vectors in a vector space that are linearly independent and span the entire space, allowing any vector to be expressed as a unique linear combination of these vectors. Eigenvectors are special vectors associated with a linear transformation or matrix that only scale by a corresponding eigenvalue during the transformation, revealing intrinsic properties such as stability and invariant directions. Understanding basis vectors and eigenvectors is crucial for fields like linear algebra, quantum mechanics, and principal component analysis, where they provide frameworks for vector representation and transformation analysis.

Defining Basis Vectors in Linear Algebra

Basis vectors in linear algebra are a set of linearly independent vectors that span a vector space, allowing every vector in that space to be expressed uniquely as a linear combination of these basis vectors. Each basis vector serves as a fundamental building block defining the coordinate system of the vector space, often represented in standard Cartesian coordinates. Eigenvectors, in contrast, are specific vectors associated with a linear transformation that remain scalar multiples of themselves when the transformation is applied, crucial for understanding the transformation's behavior but not necessarily forming a basis unless they span the space.

What Are Eigenvectors?

Eigenvectors are non-zero vectors that, when a linear transformation represented by a matrix is applied, change only in scale and not in direction. They satisfy the equation A*v = l*v, where A is a square matrix, v is the eigenvector, and l is the corresponding eigenvalue. Unlike basis vectors, which form a standard orthonormal set spanning a vector space, eigenvectors reveal intrinsic geometric properties of linear operators by identifying invariant directions under transformation.

Mathematical Representation: Basis Vectors vs Eigenvectors

Basis vectors form a coordinate system spanning a vector space, represented by standard unit vectors like \(\mathbf{e}_1, \mathbf{e}_2, \ldots, \mathbf{e}_n\) in \(\mathbb{R}^n\). Eigenvectors, in contrast, satisfy the equation \(A\mathbf{v} = \lambda \mathbf{v}\), where \(A\) is a linear transformation, \(\lambda\) is the eigenvalue, and \(\mathbf{v}\) is a non-zero vector that only scales under \(A\). While basis vectors provide a framework for vector decomposition, eigenvectors reveal invariant directions critical for diagonalization and spectral analysis of matrices.

Geometric Interpretation of Basis Vectors

Basis vectors in a vector space form a set of linearly independent vectors that span the entire space, allowing any vector to be expressed as a unique linear combination of these basis vectors. Geometrically, basis vectors define the coordinate system axes, setting the direction and scale for the space, and providing a framework for vector decomposition and transformation. Eigenvectors differ as they represent directions invariant to specific linear transformations, often identifying principal directions of stretch or compression within the vector space.

Geometric Meaning of Eigenvectors

Eigenvectors represent directions in a vector space that remain invariant under a linear transformation, only scaled by the corresponding eigenvalue, thereby revealing fundamental geometric features such as axes of stretching or compression. Basis vectors form a coordinate system that spans the vector space, providing a reference framework but not necessarily capturing intrinsic transformation properties. The geometric significance of eigenvectors lies in their ability to identify essential transformation directions, critical in applications like principal component analysis and stability analysis.

Basis Vectors in Different Vector Spaces

Basis vectors in different vector spaces provide a minimal set of linearly independent vectors that span the entire space, enabling unique vector representation through linear combinations. In Euclidean spaces, standard basis vectors correspond to coordinate axes, while in function spaces, basis vectors may consist of functions like polynomials or Fourier series components. The choice of basis vectors significantly impacts computational efficiency and the geometric interpretation within each vector space.

The Significance of Eigenvectors in Transformations

Eigenvectors play a crucial role in linear transformations by indicating invariant directions that remain scaled but not rotated during the transformation. Unlike basis vectors, which provide a coordinate framework in vector spaces, eigenvectors reveal intrinsic properties of transformation matrices, enabling the decomposition of complex operations into simpler, interpretable forms. This significance is fundamental in applications such as stability analysis, quantum mechanics, and principal component analysis, where understanding transformation behavior is essential.

Key Differences Between Basis Vectors and Eigenvectors

Basis vectors form a set of linearly independent vectors that span a vector space, providing a coordinate system for representing any vector within that space. Eigenvectors, associated with a specific linear transformation or matrix, remain directionally unchanged except for a scalar factor called the eigenvalue. Unlike basis vectors, which can be arbitrarily chosen, eigenvectors are intrinsic to a transformation and reveal fundamental properties such as invariant directions and modes of transformation.

Applications: When to Use Basis Vectors vs Eigenvectors

Basis vectors are essential for defining coordinate systems and representing any vector in a given vector space, making them ideal for tasks like computer graphics, linear transformations, and data representation. Eigenvectors are crucial in identifying invariant directions under linear transformations, widely used in stability analysis, vibration modes in mechanical systems, and principal component analysis (PCA) in machine learning. Choose basis vectors for general vector space construction and representation, while eigenvectors are preferred for analyzing system behavior, dimensionality reduction, and extracting meaningful patterns from data.

Basis vector Infographic

libterm.com

libterm.com