Disorder disrupts the natural order and balance in various systems, leading to chaos and inefficiency. Understanding the root causes and effects of disorder can help you implement effective strategies for restoration and control. Explore the rest of this article to uncover practical solutions for managing disorder in your environment.

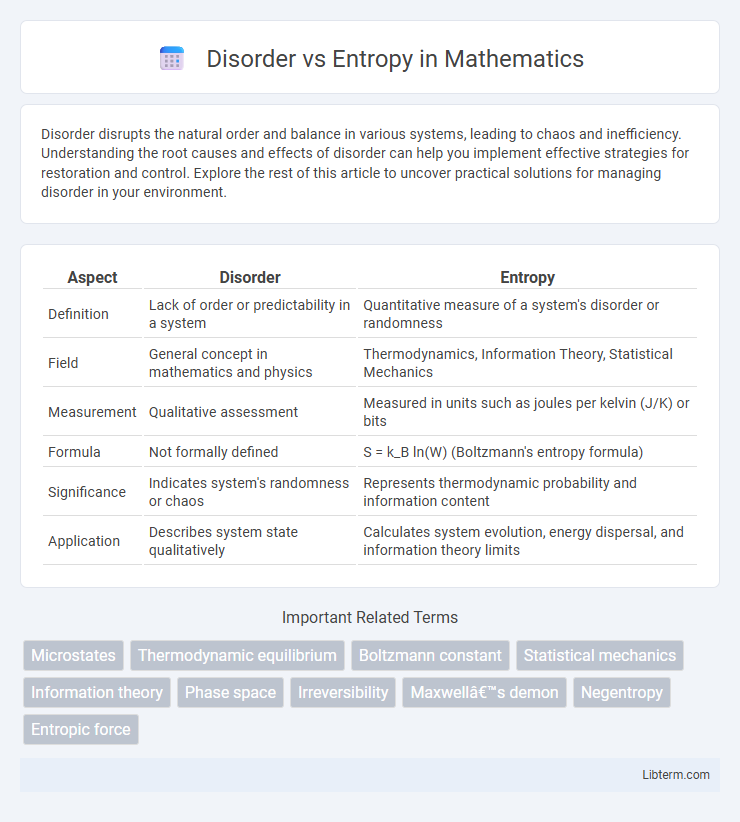

Table of Comparison

| Aspect | Disorder | Entropy |

|---|---|---|

| Definition | Lack of order or predictability in a system | Quantitative measure of a system's disorder or randomness |

| Field | General concept in mathematics and physics | Thermodynamics, Information Theory, Statistical Mechanics |

| Measurement | Qualitative assessment | Measured in units such as joules per kelvin (J/K) or bits |

| Formula | Not formally defined | S = k_B ln(W) (Boltzmann's entropy formula) |

| Significance | Indicates system's randomness or chaos | Represents thermodynamic probability and information content |

| Application | Describes system state qualitatively | Calculates system evolution, energy dispersal, and information theory limits |

Understanding Disorder: A Scientific Perspective

Disorder in scientific terms refers to the arrangement and unpredictability of particles within a system, closely tied to the concept of entropy, which quantifies the degree of randomness or chaos at a molecular level. Entropy measures how energy disperses and spreads out in physical systems, increasing as disorder rises, a principle central to the Second Law of Thermodynamics. Understanding disorder through entropy provides insights into natural processes, from chemical reactions to the evolution of the universe.

Defining Entropy in Thermodynamics

Entropy in thermodynamics quantifies the degree of disorder or randomness within a system, representing the number of microscopic configurations corresponding to a macrostate. It measures energy dispersal at a specific temperature, where higher entropy indicates greater molecular chaos and less available energy for work. This concept distinguishes disorder as the qualitative perception, while entropy provides a precise, quantifiable thermodynamic property describing system irreversibility.

Historical Development of Disorder and Entropy Concepts

The historical development of disorder and entropy concepts traces back to the 19th century with Rudolf Clausius introducing entropy as a measure of energy dispersal in thermodynamics, contrasting with earlier, more qualitative notions of disorder in physical systems. Ludwig Boltzmann later provided a statistical interpretation linking entropy to the number of microscopic configurations, formalizing the probabilistic nature of disorder at the atomic level. This evolution from vague disorder to precise entropy underpins modern thermodynamic and information theory frameworks, emphasizing the deep connection between microscopic states and macroscopic observables.

Key Differences Between Disorder and Entropy

Disorder refers to the level of randomness or lack of organization in a system, often perceived subjectively, while entropy is a precise thermodynamic quantity measuring the number of microstates corresponding to a macrostate. Entropy quantifies the irreversible dispersal of energy and increase in system uncertainty, grounded in the second law of thermodynamics. Unlike disorder, entropy has formal mathematical definitions and units, such as joules per kelvin (J/K), making it an objective physical property.

Misconceptions: Disorder vs. Entropy

Entropy is often misconceived as simply disorder, but it fundamentally measures the number of microscopic configurations consistent with a system's macroscopic state. Unlike the vague notion of disorder, entropy quantifies molecular randomness and energy dispersal based on statistical mechanics. Misunderstanding entropy as just chaos overlooks its precise role in predicting spontaneous processes and thermodynamic equilibrium.

Mathematical Representation of Disorder and Entropy

Disorder and entropy are mathematically represented using probability distributions and statistical mechanics principles, where disorder correlates with the number of microstates O accessible to a system, and entropy S is quantified as S = k_B ln O, with k_B representing Boltzmann's constant. In information theory, entropy is defined by Shannon's formula H = - p_i log p_i, expressing uncertainty or disorder in a probabilistic system. These representations quantify disorder as a measure of randomness or the spread of possible configurations within a given system.

Entropy Beyond Physics: Information Theory

Entropy in information theory measures the uncertainty or unpredictability of data, quantifying the amount of information content in messages or signals. Unlike physical entropy related to thermodynamic disorder, informational entropy assesses the efficiency of data compression and transmission by calculating the average minimum number of bits needed to encode a source. This concept, pioneered by Claude Shannon, has become foundational in fields like telecommunications, cryptography, and machine learning for optimizing data storage and communication systems.

Real-World Examples of Disorder and Entropy

In real-world examples, disorder manifests as the gradual rusting of iron, where molecular structures break down into less organized forms, demonstrating increased entropy. The melting of ice into water presents entropy by transforming a structured solid into a more random liquid state. Biological processes like protein denaturation highlight disorder, where the loss of functional shape corresponds to higher entropy at the molecular level.

Importance of Entropy in Natural Processes

Entropy quantifies the degree of disorder or randomness in a system, serving as a fundamental concept in thermodynamics and statistical mechanics. Its importance lies in driving natural processes towards equilibrium, where energy disperses and systems evolve spontaneously in the direction of increasing entropy. This principle explains phenomena such as the diffusion of gases, heat flow, and the irreversibility of chemical reactions, underscoring entropy's central role in the natural progression of physical and biological systems.

Disorder, Entropy, and the Arrow of Time

Disorder in physical systems is quantitatively described by entropy, a fundamental concept in thermodynamics representing the number of microscopic configurations corresponding to a macroscopic state. Entropy increases over time in isolated systems, defining the arrow of time and establishing the directionality from order to disorder. This irreversible progression manifests in phenomena like heat flow and entropy production, linking microscopic disorder to the macroscopic experience of time's passage.

Disorder Infographic

libterm.com

libterm.com