Gradient descent is a fundamental optimization algorithm used in machine learning and deep learning to minimize the cost function by iteratively moving towards the steepest descent direction. It adjusts model parameters step-by-step to reduce errors, enhancing predictive accuracy. Discover how gradient descent works and why it's essential for improving your models by reading the rest of this article.

Table of Comparison

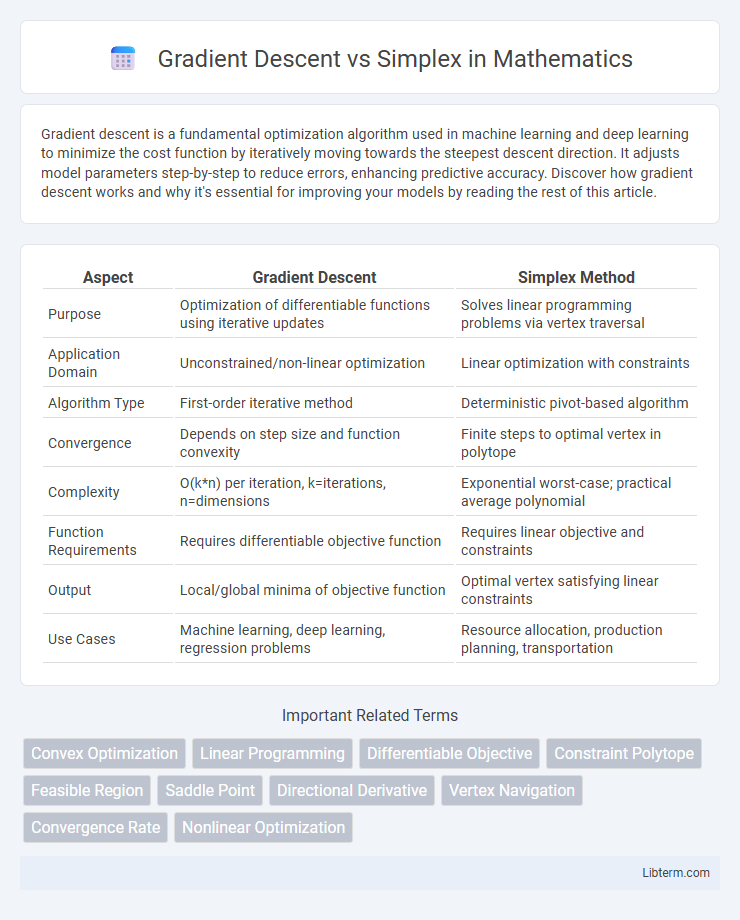

| Aspect | Gradient Descent | Simplex Method |

|---|---|---|

| Purpose | Optimization of differentiable functions using iterative updates | Solves linear programming problems via vertex traversal |

| Application Domain | Unconstrained/non-linear optimization | Linear optimization with constraints |

| Algorithm Type | First-order iterative method | Deterministic pivot-based algorithm |

| Convergence | Depends on step size and function convexity | Finite steps to optimal vertex in polytope |

| Complexity | O(k*n) per iteration, k=iterations, n=dimensions | Exponential worst-case; practical average polynomial |

| Function Requirements | Requires differentiable objective function | Requires linear objective and constraints |

| Output | Local/global minima of objective function | Optimal vertex satisfying linear constraints |

| Use Cases | Machine learning, deep learning, regression problems | Resource allocation, production planning, transportation |

Introduction to Gradient Descent and Simplex

Gradient Descent is an iterative optimization algorithm primarily used to minimize differentiable functions by moving in the direction of the steepest descent based on gradient calculations. The Simplex method, by contrast, solves linear programming problems by traversing vertices of the feasible region defined by constraints to find an optimal solution. Both techniques are fundamental in optimization, with Gradient Descent suited for continuous and differentiable problems, while Simplex excels in linear, constrained optimization scenarios.

Core Principles of Gradient Descent

Gradient Descent operates by iteratively moving in the direction of the steepest negative gradient of a differentiable objective function to find local minima efficiently. It relies on calculating partial derivatives to update parameters with a fixed or adaptive learning rate, optimizing continuous, convex, or non-convex functions. In contrast, the Simplex method is a geometric, vertex-based optimization approach designed for linear programming problems, exploring feasible solution vertices without utilizing gradient information.

Fundamentals of Simplex Method

The Simplex method is a linear programming algorithm designed to find the optimal solution for problems with linear constraints by moving along the edges of the feasible region defined by these constraints. Unlike Gradient Descent, which iteratively moves towards the minimum of a function using derivatives, the Simplex method operates on vertices of the polytope that represents the solution space. It systematically evaluates adjacent vertices in a structured way to improve the objective function until reaching the maximum or minimum, making it highly effective for linear optimization problems.

Types of Problems Solved

Gradient Descent effectively solves large-scale continuous optimization problems, particularly those involving differentiable functions such as machine learning model training and convex optimization. The Simplex method excels in solving linear programming problems characterized by linear objective functions and linear constraints, commonly found in operations research and resource allocation. While Gradient Descent adapts well to non-linear and high-dimensional spaces, Simplex is specifically designed for linear systems and guarantees finding an optimal vertex solution when one exists.

Mathematical Foundations Compared

Gradient Descent relies on calculus principles, utilizing the gradient vector of a function to iteratively move towards a local minimum by following the steepest descent path. The Simplex method, rooted in linear algebra and convex analysis, solves linear programming problems by traversing the vertices of the feasible region defined by linear constraints to find the optimal solution. While Gradient Descent handles differentiable, often non-linear optimization problems, the Simplex algorithm excels in linear optimization with guaranteed convergence on polyhedral feasible sets.

Convergence Behavior and Speed

Gradient Descent typically converges faster on smooth, differentiable convex functions due to its use of gradient information to guide each iteration towards the optimal solution. The Simplex method, while robust for linear programming problems, often shows slower convergence when dealing with high-dimensional or non-differentiable problems as it relies on vertex evaluations of the feasible region. In practice, Gradient Descent outperforms Simplex in speed and efficiency for large-scale, continuous optimization tasks, whereas Simplex excels in exact solutions for linear constraints with fewer variables.

Computational Complexity Analysis

Gradient Descent generally exhibits a per-iteration computational complexity of O(n) for n parameters, favoring scalability in high-dimensional problems by leveraging gradient information to efficiently converge. The Simplex algorithm's worst-case complexity is exponential, O(2^n), though it often performs polynomially in practice for solving linear programming problems, making it less predictable on large-scale tasks. When comparing both, Gradient Descent offers a more consistent and scalable approach to optimization in continuous, differentiable settings, whereas Simplex excels in structured linear constraints but suffers from computational unpredictability.

Strengths and Limitations

Gradient Descent excels in optimizing differentiable functions by iteratively moving towards the minimum using gradient information, making it highly effective for large-scale and continuous problems but prone to getting stuck in local minima. The Simplex method is a powerful algorithm for linear programming, reliably finding global optima by traversing the vertices of the feasible region, though it is limited to linear problems and can be computationally intensive for very large dimensions. Gradient Descent's reliance on smooth gradient fields limits its use in non-differentiable or highly irregular spaces, whereas Simplex lacks applicability in non-linear or non-convex optimization scenarios.

Real-World Applications

Gradient Descent excels in optimizing large-scale machine learning models, including deep neural networks and regression tasks, by efficiently minimizing loss functions through iterative parameter updates. The Simplex method is widely applied in operations research and linear programming problems, such as resource allocation in manufacturing, transportation logistics, and supply chain management, where it identifies optimal solutions within linear constraints. Both algorithms address distinct problem types, with Gradient Descent suited to continuous, non-linear optimization, while Simplex targets linear optimization challenges in practical industries.

Choosing the Right Method

Choosing the right optimization method depends on the problem's characteristics and constraints; Gradient Descent excels in continuous, differentiable functions with well-defined gradients, making it ideal for large-scale machine learning models. Simplex is better suited for linear programming problems where the objective function and constraints are linear, offering a geometric approach to find optimal vertices in feasible regions. For non-convex or discrete optimization, Hybrid methods or specialized algorithms may outperform both, highlighting the importance of problem structure in method selection.

Gradient Descent Infographic

libterm.com

libterm.com