Linearity refers to a relationship between variables where changes in one variable produce proportional changes in another, maintaining a constant rate across the range. This concept is crucial in fields such as mathematics, physics, and engineering, where systems are often modeled using linear equations to simplify analysis and predictions. Explore this article to understand how linearity impacts various applications and why it matters to your problem-solving approach.

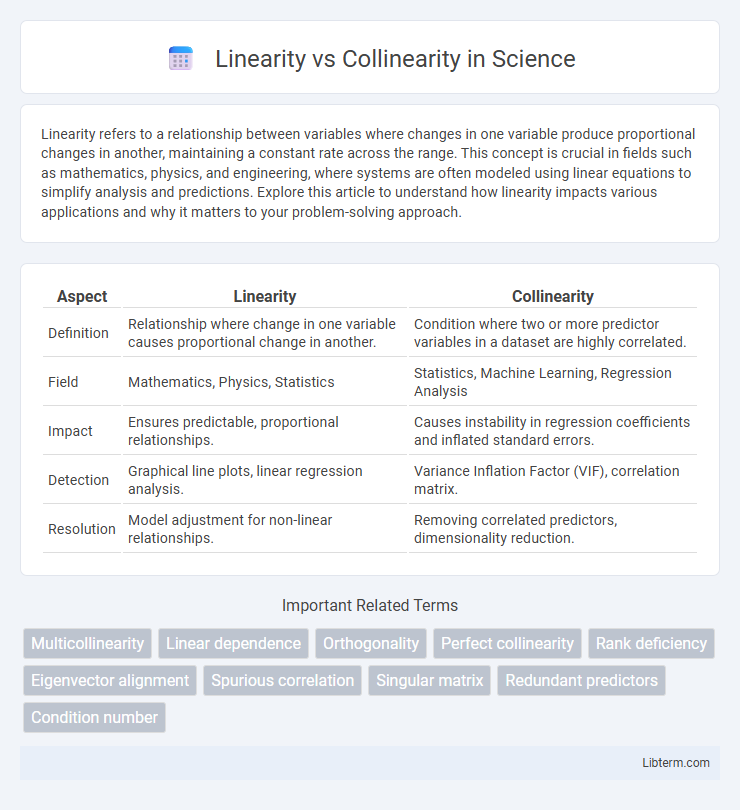

Table of Comparison

| Aspect | Linearity | Collinearity |

|---|---|---|

| Definition | Relationship where change in one variable causes proportional change in another. | Condition where two or more predictor variables in a dataset are highly correlated. |

| Field | Mathematics, Physics, Statistics | Statistics, Machine Learning, Regression Analysis |

| Impact | Ensures predictable, proportional relationships. | Causes instability in regression coefficients and inflated standard errors. |

| Detection | Graphical line plots, linear regression analysis. | Variance Inflation Factor (VIF), correlation matrix. |

| Resolution | Model adjustment for non-linear relationships. | Removing correlated predictors, dimensionality reduction. |

Understanding Linearity: Definition and Importance

Linearity refers to a relationship between variables where the change in the dependent variable is directly proportional to the change in one or more independent variables, represented by a straight line on a graph. Understanding linearity is crucial in fields like statistics and machine learning because it simplifies modeling, interpretation, and prediction accuracy. Non-linearity can complicate analyses and require more complex models, making linearity a foundational concept for data analysis and regression techniques.

What is Collinearity? Basics and Significance

Collinearity occurs when two or more predictor variables in a regression model are highly correlated, leading to redundancy in the data and unstable coefficient estimates. Understanding collinearity is crucial because it inflates standard errors, reduces the statistical power of hypothesis tests, and complicates interpretation of individual variable effects. Detecting collinearity often involves calculating variance inflation factors (VIF) or examining correlation matrices to ensure reliable regression analysis and valid inference.

Key Differences Between Linearity and Collinearity

Linearity refers to the relationship between variables where one variable changes proportionally with another, typically indicated by a straight-line graph in regression analysis. Collinearity describes a condition where two or more predictor variables in a multiple regression model are highly correlated, causing redundancy and instability in coefficient estimates. The key difference lies in linearity representing the form of relationship between dependent and independent variables, while collinearity concerns the intercorrelation among independent variables themselves.

Visualizing Linearity vs Collinearity

Visualizing linearity involves plotting data points to identify a straight-line relationship between variables, often using scatterplots with regression lines to assess how well data fits a linear model. In contrast, visualizing collinearity focuses on detecting high correlations among predictor variables, commonly through correlation matrices, variance inflation factor (VIF) plots, or pairwise scatterplots that reveal overlapping patterns indicating multicollinearity. Effective visualization helps distinguish whether variables independently contribute to a model (linearity) or if redundancy exists among predictors (collinearity), guiding data preprocessing and model interpretation.

Effects of Linearity on Statistical Models

Linearity in statistical models ensures that predictor variables have a consistent, additive effect on the outcome, simplifying interpretation and prediction accuracy. Violations of linearity can lead to biased parameter estimates, reducing model validity and increasing the risk of incorrect inferences. Maintaining linearity assumptions improves model fit, enhances predictive performance, and supports reliable hypothesis testing in regression analyses.

Impact of Collinearity in Regression Analysis

Collinearity occurs when two or more predictor variables in a regression model are highly correlated, leading to instability in coefficient estimates and inflated standard errors. This statistical phenomenon undermines the interpretability of individual predictors, making it difficult to determine their true impact on the dependent variable. Severe collinearity reduces model precision, increases variance of coefficient estimates, and may result in misleading conclusions about variable significance in regression analysis.

Detecting Linearity and Collinearity in Data

Detecting linearity in data involves assessing the relationship between variables using scatterplots, correlation coefficients, and residual analysis to ensure a straight-line pattern exists. Collinearity is identified by examining Variance Inflation Factor (VIF) scores, tolerance values, and condition indices, which indicate whether predictor variables are highly correlated. Proper detection involves combining visual tools with statistical metrics to differentiate true linear relationships from collinear dependencies that may affect model stability.

Common Misconceptions About Linearity and Collinearity

Linearity refers to the relationship between variables where the change in the dependent variable is proportional to the change in independent variables, often represented in regression models. Collinearity, on the other hand, describes a scenario where two or more predictor variables are highly correlated, leading to redundancy and instability in coefficient estimates. A common misconception is confusing linearity with collinearity, assuming that a linear relationship between variables implies no multicollinearity issues, whereas collinearity concerns interdependencies among predictors, not the form of their relationship with the outcome.

Solutions to Address Collinearity Issues

To address collinearity issues in regression analysis, techniques such as variance inflation factor (VIF) diagnostics help identify problematic predictors. Ridge regression and principal component analysis (PCA) effectively reduce multicollinearity by regularizing coefficients or transforming correlated variables into uncorrelated components. Variable selection methods like LASSO and stepwise regression also enhance model stability by excluding or combining collinear features.

Best Practices for Managing Linearity and Collinearity in Analysis

Linearity in regression models refers to the direct proportional relationship between independent and dependent variables, while collinearity occurs when independent variables are highly correlated, distorting coefficient estimates. Best practices for managing linearity include applying transformations such as logarithmic or polynomial terms to improve model fit and using residual plots to verify assumptions. To address collinearity, techniques like variance inflation factor (VIF) analysis should identify problematic predictors, followed by removing redundant variables, combining correlated features through principal component analysis, or employing regularization methods like ridge regression.

Linearity Infographic

libterm.com

libterm.com