Classification is a vital process in organizing data into predefined categories based on shared features, enabling efficient decision-making and pattern recognition. It plays a critical role in fields like machine learning, data mining, and information retrieval by helping systems automatically identify and label new data. Explore the rest of the article to understand how classification techniques can enhance your data analysis strategy.

Table of Comparison

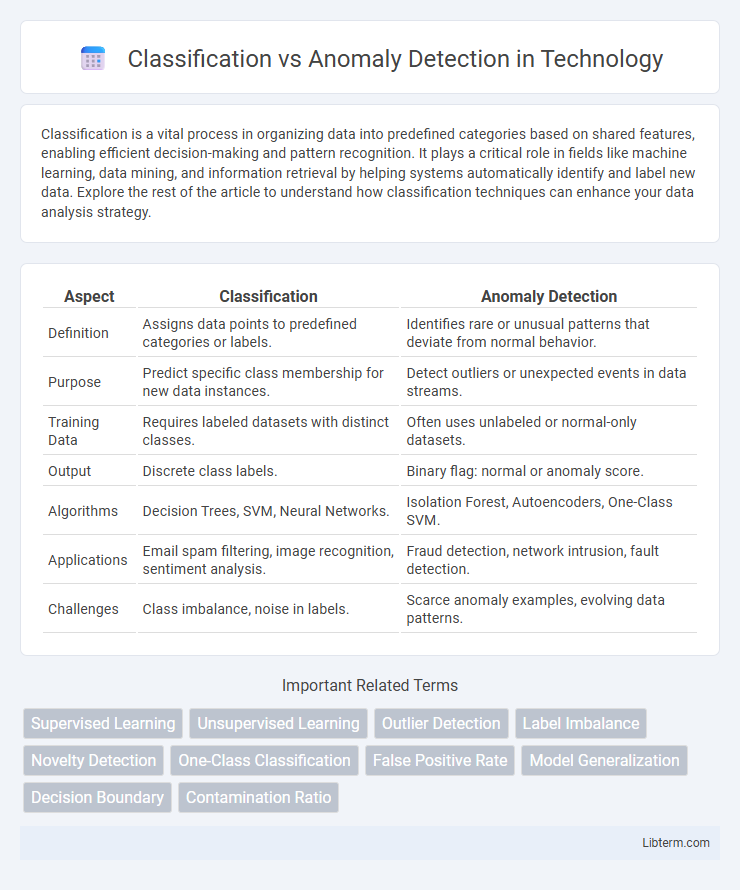

| Aspect | Classification | Anomaly Detection |

|---|---|---|

| Definition | Assigns data points to predefined categories or labels. | Identifies rare or unusual patterns that deviate from normal behavior. |

| Purpose | Predict specific class membership for new data instances. | Detect outliers or unexpected events in data streams. |

| Training Data | Requires labeled datasets with distinct classes. | Often uses unlabeled or normal-only datasets. |

| Output | Discrete class labels. | Binary flag: normal or anomaly score. |

| Algorithms | Decision Trees, SVM, Neural Networks. | Isolation Forest, Autoencoders, One-Class SVM. |

| Applications | Email spam filtering, image recognition, sentiment analysis. | Fraud detection, network intrusion, fault detection. |

| Challenges | Class imbalance, noise in labels. | Scarce anomaly examples, evolving data patterns. |

Introduction to Classification and Anomaly Detection

Classification involves categorizing data points into predefined classes based on labeled training datasets, enabling predictive modeling across various applications such as image recognition and spam detection. Anomaly detection focuses on identifying data points that deviate significantly from the norm, often used for fraud detection, network security, and fault diagnosis. Both techniques rely on machine learning algorithms but serve distinct purposes: classification separates known categories, while anomaly detection highlights unusual or novel patterns in data.

Defining Classification: Concepts and Applications

Classification is a supervised learning technique used to categorize data into predefined classes based on labeled training examples. It involves algorithms such as decision trees, support vector machines, and neural networks to assign input data to specific categories with high accuracy. Common applications include spam detection, medical diagnosis, and image recognition, where precise categorization of data points is critical for decision-making.

Understanding Anomaly Detection: Key Principles

Anomaly detection identifies patterns in data that deviate significantly from the norm, indicating potential errors, fraud, or novel events. It relies on defining a baseline of normal behavior through statistical models, clustering, or machine learning techniques to distinguish outliers effectively. Unlike classification, which requires labeled data for known categories, anomaly detection excels in recognizing previously unseen irregularities without explicit supervision.

Core Differences Between Classification and Anomaly Detection

Classification assigns predefined labels to data based on learned patterns from a labeled training set, enabling the prediction of known categories with high accuracy. Anomaly detection identifies data points that deviate significantly from the normal data distribution, often without prior labeling, and is used to detect rare or novel events. The core difference lies in classification's reliance on labeled examples for all classes versus anomaly detection's focus on unsupervised or semi-supervised identification of outliers in predominantly normal data.

Algorithms Used in Classification

Classification algorithms primarily include decision trees, support vector machines (SVM), k-nearest neighbors (KNN), logistic regression, and neural networks, each designed to assign input data into predefined categories based on labeled training datasets. These algorithms leverage features extracted from the data to create models that predict class membership with high accuracy, optimizing performance metrics such as precision, recall, and F1-score. Ensemble methods like random forests and gradient boosting further enhance classification performance by combining multiple weak classifiers to reduce bias and variance.

Techniques for Anomaly Detection

Techniques for anomaly detection include statistical methods that model data distribution to identify outliers, machine learning algorithms such as isolation forests and support vector machines that learn normal patterns to detect deviations, and deep learning approaches like autoencoders and recurrent neural networks that capture complex temporal or spatial data relationships. Unlike classification, which categorizes data into predefined labels, anomaly detection emphasizes identifying rare, unexpected patterns without prior labeling. This distinction enables anomaly detection methods to effectively handle unlabeled data and uncover novel or emerging anomalies in domains such as cybersecurity, fraud detection, and predictive maintenance.

Evaluation Metrics: Comparing Performance

Classification evaluation metrics include accuracy, precision, recall, F1-score, and ROC-AUC, which quantify how well a model distinguishes between known categories. Anomaly detection metrics often emphasize precision, recall, and the Area Under the Precision-Recall Curve (AUPRC) due to the typically imbalanced nature of anomalies versus normal data. Comparing performance requires selecting appropriate metrics based on the specific task, with anomaly detection demanding careful attention to false positives and false negatives to ensure reliable identification of outliers.

Use Cases: When to Choose Classification vs Anomaly Detection

Classification excels in scenarios where data labels are available and predefined categories exist, such as email spam filtering, medical diagnosis, or credit scoring. Anomaly detection is preferred for identifying unusual patterns or rare events without labeled data, useful in fraud detection, network security breaches, and equipment fault monitoring. Choosing between classification and anomaly detection depends on the availability of labeled datasets and whether the task involves categorizing known classes or discovering novel, suspicious instances.

Challenges and Limitations of Each Approach

Classification challenges include the need for extensive labeled data and difficulty handling imbalanced datasets, often resulting in biased model performance. Anomaly detection struggles with defining normal behavior due to the scarcity of representative anomaly examples and high false positive rates from dynamic data environments. Both approaches face limitations in adaptability and generalization across diverse, evolving real-world datasets.

Future Trends in Classification and Anomaly Detection

Future trends in classification emphasize the integration of deep learning models with explainable AI to enhance model transparency and accuracy in complex data environments. Anomaly detection is advancing towards real-time analytics powered by edge computing and unsupervised learning techniques to identify rare events in dynamic datasets. Both fields increasingly leverage hybrid approaches combining supervised and unsupervised methods for improved adaptability and performance in diverse applications such as cybersecurity, finance, and healthcare.

Classification Infographic

libterm.com

libterm.com