Failover is a critical process in IT systems designed to automatically switch to a redundant or standby system when the primary system fails, ensuring continuous availability and minimizing downtime. This mechanism plays a vital role in maintaining data integrity and seamless user experiences during unexpected outages. Discover how failover strategies can enhance your system's reliability and protect your operations by exploring the rest of this article.

Table of Comparison

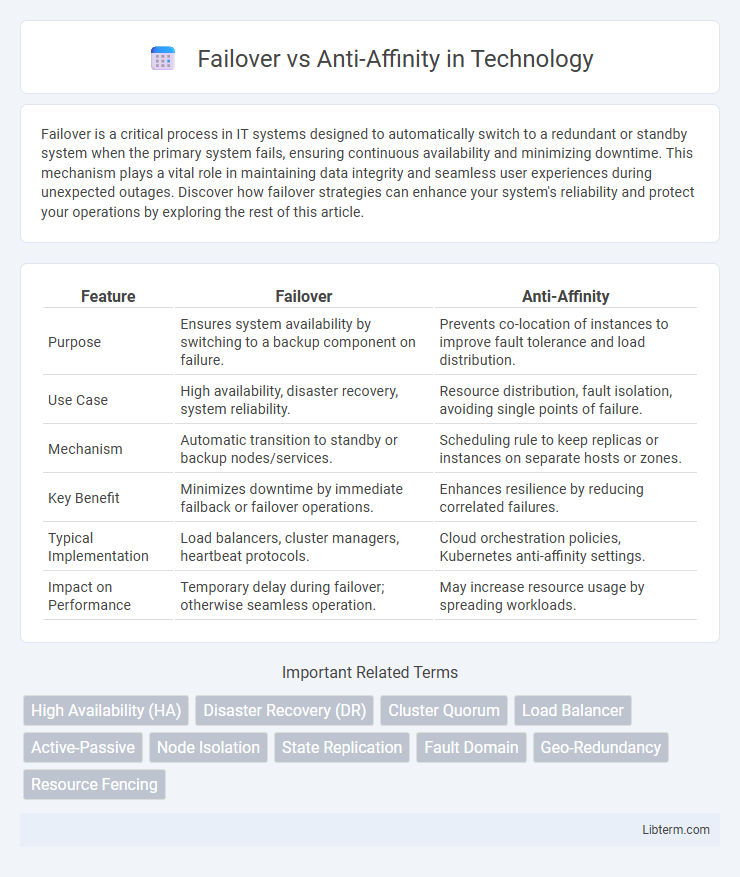

| Feature | Failover | Anti-Affinity |

|---|---|---|

| Purpose | Ensures system availability by switching to a backup component on failure. | Prevents co-location of instances to improve fault tolerance and load distribution. |

| Use Case | High availability, disaster recovery, system reliability. | Resource distribution, fault isolation, avoiding single points of failure. |

| Mechanism | Automatic transition to standby or backup nodes/services. | Scheduling rule to keep replicas or instances on separate hosts or zones. |

| Key Benefit | Minimizes downtime by immediate failback or failover operations. | Enhances resilience by reducing correlated failures. |

| Typical Implementation | Load balancers, cluster managers, heartbeat protocols. | Cloud orchestration policies, Kubernetes anti-affinity settings. |

| Impact on Performance | Temporary delay during failover; otherwise seamless operation. | May increase resource usage by spreading workloads. |

Understanding Failover: Definition and Purpose

Failover is the automatic switching to a redundant or standby system upon the failure or abnormal termination of the primary system to ensure continuous availability and minimize downtime. It plays a critical role in disaster recovery and high-availability environments by maintaining service continuity and data integrity. Understanding failover mechanisms, such as active-active or active-passive configurations, is essential for optimizing system resilience and operational reliability.

What is Anti-Affinity? Core Concepts Explained

Anti-affinity is a scheduling policy in cloud and virtualization environments that ensures specific virtual machines or workloads are placed on separate physical hosts to minimize risk and improve fault tolerance. Unlike failover, which involves automatic switching to a standby system after a failure, anti-affinity proactively prevents resource co-location that could lead to simultaneous downtime. This core concept enhances system availability by reducing single points of failure and distribution of workloads across infrastructure.

Key Differences Between Failover and Anti-Affinity

Failover primarily ensures high availability by automatically switching to a backup system or server when the primary one fails, minimizing downtime and service interruptions. Anti-affinity, on the other hand, is a deployment strategy that prevents related instances or workloads from being placed on the same physical host or failure domain, enhancing fault tolerance by reducing the risk of simultaneous failures. The key difference lies in failover's reactive approach to failure and anti-affinity's proactive distribution of resources to prevent single points of failure.

How Failover Mechanisms Enhance System Availability

Failover mechanisms enhance system availability by automatically detecting failures and switching operations to backup systems, minimizing downtime and ensuring continuous service delivery. This proactive response reduces the risk of total system outages by maintaining operational redundancy and quick recovery. Failover strategies are critical for high-availability architectures, enabling seamless transition during hardware or software faults.

The Role of Anti-Affinity in Preventing Resource Collocation

Anti-affinity policies play a crucial role in preventing resource collocation by ensuring that critical workloads or virtual machines are distributed across different physical hosts or failure domains, reducing the risk of simultaneous failures. Unlike failover mechanisms that activate backup resources after a failure, anti-affinity proactively enhances resilience by minimizing the exposure of workloads to a single point of failure. This strategic separation improves availability and fault tolerance in cloud environments and data centers, making anti-affinity essential for high-availability architectures.

Use Cases: When to Use Failover vs Anti-Affinity

Failover is essential for high-availability environments where automatic recovery from hardware or software failures is critical, such as in database clusters and enterprise-grade web services. Anti-affinity is ideal for workload distribution, ensuring that critical virtual machines or containers do not run on the same physical host, thus minimizing the risk of simultaneous failures due to host-level issues. Choosing failover is appropriate when immediate service continuity is required, while anti-affinity is best for optimizing fault tolerance by spreading risk across multiple hosts.

Benefits of Implementing Failover Strategies

Failover strategies enhance system reliability by automatically redirecting workloads to backup resources during failures, minimizing downtime and ensuring continuous service availability. Implementing failover mechanisms improves fault tolerance, reduces data loss risks, and supports business continuity for critical applications. These strategies provide rapid recovery, contributing to improved user experience and operational resilience compared to anti-affinity policies, which primarily focus on workload distribution rather than failure recovery.

Advantages of Anti-Affinity Policies in Cloud Environments

Anti-affinity policies in cloud environments enhance application resilience by distributing virtual machine instances across separate physical hosts, reducing the risk of simultaneous failures. They improve fault tolerance and availability by preventing resource contention and isolating workloads, ensuring continuous service operation. This approach also optimizes load balancing and minimizes downtime during maintenance or unexpected outages.

Common Challenges and Trade-offs

Failover and anti-affinity policies help enhance application availability and fault tolerance but introduce challenges such as resource allocation conflicts and increased management complexity. Failover optimizes for rapid recovery by switching workloads to standby resources, potentially causing resource contention and downtime during transitions. Anti-affinity enforces workload distribution to avoid single points of failure but may reduce resource utilization efficiency and limit scheduling flexibility.

Best Practices for Combining Failover and Anti-Affinity

Combining failover and anti-affinity best practices involves strategically placing workloads to ensure high availability and fault tolerance by avoiding single points of failure. Implementing failover mechanisms alongside anti-affinity policies ensures that critical applications are distributed across multiple nodes or data centers, preventing simultaneous outages while maintaining service continuity. Leveraging tools like Kubernetes Pod Anti-Affinity with robust failover orchestration enables optimized resource utilization and minimized downtime in distributed environments.

Failover Infographic

libterm.com

libterm.com