Caching improves website performance by temporarily storing frequently accessed data to reduce server load and decrease page load times. Efficient caching strategies can lead to faster user experiences and lower bandwidth consumption. Explore the rest of the article to learn how optimizing caching can enhance your website's speed and reliability.

Table of Comparison

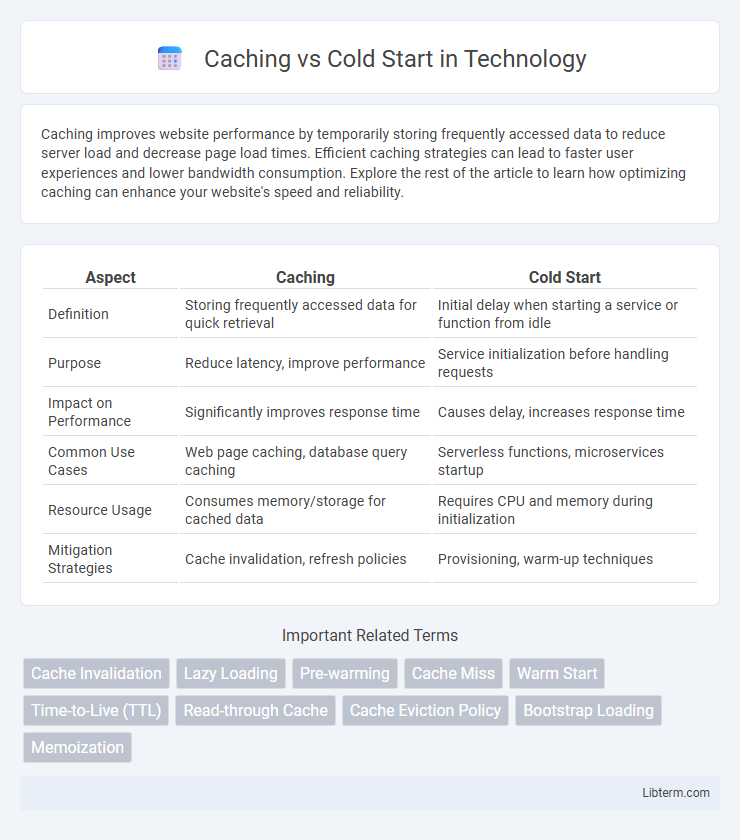

| Aspect | Caching | Cold Start |

|---|---|---|

| Definition | Storing frequently accessed data for quick retrieval | Initial delay when starting a service or function from idle |

| Purpose | Reduce latency, improve performance | Service initialization before handling requests |

| Impact on Performance | Significantly improves response time | Causes delay, increases response time |

| Common Use Cases | Web page caching, database query caching | Serverless functions, microservices startup |

| Resource Usage | Consumes memory/storage for cached data | Requires CPU and memory during initialization |

| Mitigation Strategies | Cache invalidation, refresh policies | Provisioning, warm-up techniques |

Introduction to Caching and Cold Start

Caching involves storing frequently accessed data or computations temporarily to accelerate response times and reduce system load, enhancing overall application performance. Cold start refers to the initial delay experienced when a serverless function or application instance is started from scratch, often leading to slower response times during the first invocation. Understanding the trade-offs between caching strategies and cold start optimization is crucial for designing efficient, responsive systems in cloud and serverless environments.

Understanding the Cold Start Problem

The cold start problem occurs when a serverless function or container is invoked for the first time, causing latency as the environment initializes and loads necessary resources. Caching mitigates cold start delays by storing frequently accessed data or pre-warmed instances, reducing initialization time and improving response speed. Understanding cold starts is crucial for optimizing performance in cloud computing, especially in event-driven architectures.

What is Caching?

Caching involves storing frequently accessed data temporarily to reduce latency and improve application performance by minimizing direct access to slower storage layers or computational resources. It enhances responsiveness in cloud computing and serverless environments by enabling faster data retrieval, thereby mitigating the impact of cold starts that occur when systems initialize resources from scratch. Effective caching strategies can significantly optimize resource usage, reduce operational costs, and improve user experience through quicker load times and smoother execution.

Key Differences Between Caching and Cold Start

Caching stores frequently accessed data in memory to reduce latency and improve performance during repeated requests. Cold start occurs when a system or application initializes without prior cached data, leading to higher latency due to resource loading and setup. The key difference lies in caching minimizing startup time by reusing data, while cold start represents the initial delay experienced before any cache is established.

Impact of Cold Start on Performance

Cold starts significantly degrade application performance by introducing latency during the initialization of serverless functions or container instances, as resources are allocated and runtime environments are set up from scratch. This delay contrasts with caching, where pre-warmed instances or stored data reduce response times by avoiding repetitive compute or data retrieval operations. Minimizing cold starts through strategies like provisioned concurrency or optimized initialization routines directly enhances user experience by maintaining low latency and consistent throughput.

How Caching Mitigates Cold Start Issues

Caching significantly reduces cold start latency by storing pre-initialized resources or computation results, enabling faster retrieval for subsequent requests. This optimization minimizes overhead associated with initializing functions or loading dependencies in serverless environments or distributed systems. By leveraging cache hits, applications maintain consistent performance and improve response times during high-demand periods.

Common Caching Strategies

Common caching strategies like time-to-live (TTL), write-through, and write-back significantly reduce cold start latency in serverless environments by storing frequently accessed data closer to the compute layer. TTL caches ensure data freshness by expiring entries after a predetermined period, while write-through caches update both cache and backend synchronously, providing consistency. Write-back caches improve performance by delaying backend updates, minimizing cold start impact but requiring sophisticated cache invalidation to avoid stale data.

Challenges and Limitations of Caching

Caching improves application performance by storing frequently accessed data, but it faces significant challenges such as cache invalidation, data staleness, and memory constraints. Cache consistency problems arise when underlying data changes rapidly, leading to outdated information being served. Furthermore, limited cache size and eviction policies can cause critical data to be prematurely removed, impacting application reliability and user experience.

Best Practices for Managing Cold Start and Caching

Implementing efficient caching strategies, such as pre-warming caches and using in-memory data stores like Redis, significantly reduces cold start latency in serverless applications. Leveraging techniques like function warm-up, provisioned concurrency in AWS Lambda, and cache invalidation policies ensures consistent performance and minimizes delays during initial invocations. Monitoring cold start metrics and optimizing container initialization code further enhances response times and resource utilization.

Conclusion: Choosing the Right Approach

Choosing between caching and cold start depends on application requirements such as latency tolerance, resource availability, and traffic patterns. Caching reduces latency by storing frequently accessed data in memory, providing faster response times at the cost of increased memory usage. Cold start delays occur when initializing resources on demand, suitable for infrequent requests but potentially impacting user experience during initial load.

Caching Infographic

libterm.com

libterm.com