Serverless computing allows you to run applications without managing servers, automatically scaling resources based on demand and reducing operational complexity. This approach improves cost efficiency by charging only for actual usage and accelerates development by abstracting infrastructure management. Explore the rest of the article to understand how serverless computing can transform your IT strategy and boost application performance.

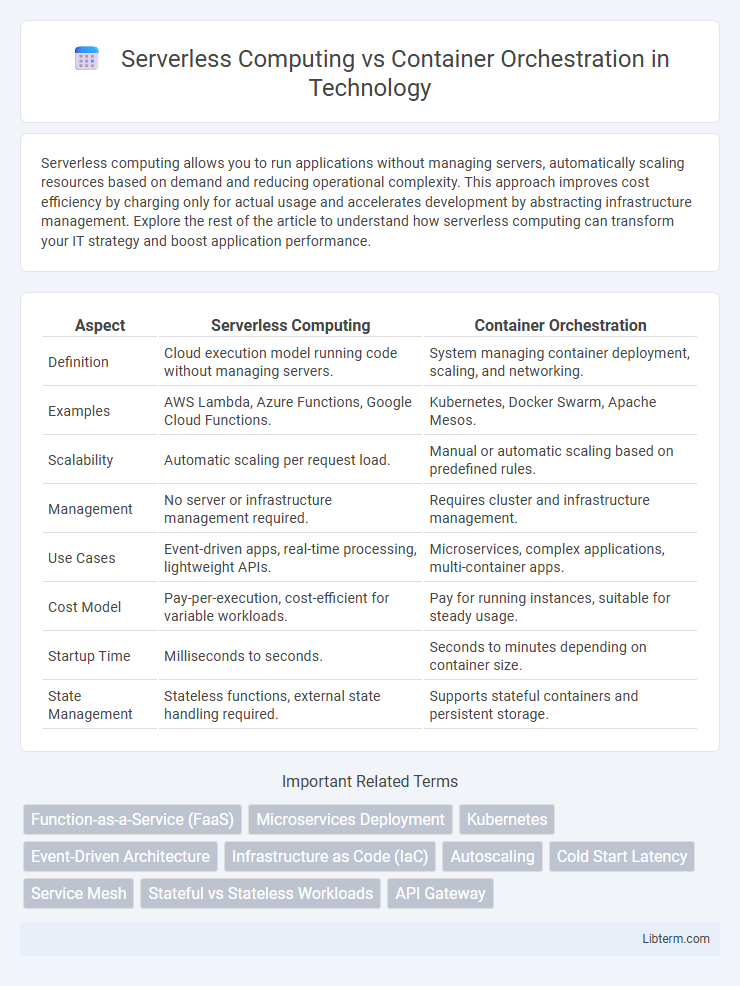

Table of Comparison

| Aspect | Serverless Computing | Container Orchestration |

|---|---|---|

| Definition | Cloud execution model running code without managing servers. | System managing container deployment, scaling, and networking. |

| Examples | AWS Lambda, Azure Functions, Google Cloud Functions. | Kubernetes, Docker Swarm, Apache Mesos. |

| Scalability | Automatic scaling per request load. | Manual or automatic scaling based on predefined rules. |

| Management | No server or infrastructure management required. | Requires cluster and infrastructure management. |

| Use Cases | Event-driven apps, real-time processing, lightweight APIs. | Microservices, complex applications, multi-container apps. |

| Cost Model | Pay-per-execution, cost-efficient for variable workloads. | Pay for running instances, suitable for steady usage. |

| Startup Time | Milliseconds to seconds. | Seconds to minutes depending on container size. |

| State Management | Stateless functions, external state handling required. | Supports stateful containers and persistent storage. |

Introduction to Serverless Computing

Serverless computing enables developers to run applications without managing underlying infrastructure, automatically scaling resources based on demand. It abstracts server management, allowing focus on code execution triggered by events, reducing operational overhead and costs. This model contrasts with container orchestration, where developers handle container deployment, scaling, and maintenance on clusters.

Overview of Container Orchestration

Container orchestration automates the deployment, management, scaling, and networking of containerized applications, using platforms like Kubernetes, Docker Swarm, and Apache Mesos. It enables efficient resource utilization by dynamically managing container lifecycles, ensuring high availability and load balancing across clusters. Key features of container orchestration include automated rollout and rollback, service discovery, and self-healing capabilities to maintain system reliability.

Key Differences Between Serverless and Containers

Serverless computing abstracts server management by automatically scaling functions in response to demand, charging only for actual execution time, while container orchestration manages discrete, persistent container workloads across clusters using tools like Kubernetes. Serverless platforms eliminate infrastructure management and offer event-driven execution, whereas container orchestration provides greater control over environment configuration, resource allocation, and allows stateful and long-running applications. The primary differences lie in operational complexity, scalability granularity, and cost models, with serverless optimizing for stateless, ephemeral tasks and containers supporting complex, customizable, and stateful services.

Scalability: Serverless vs Container Orchestration

Serverless computing offers automatic, fine-grained scalability by dynamically allocating resources based on real-time demand without manual intervention, ideal for unpredictable or variable workloads. Container orchestration platforms such as Kubernetes provide scalable infrastructure by managing clusters across nodes, supporting complex, stateful applications requiring consistent resource allocation and control. Serverless excels in rapid scaling for event-driven architectures, whereas container orchestration delivers scalability with greater configurability and persistent environment management.

Use Cases for Serverless Computing

Serverless computing excels in event-driven applications, such as real-time data processing, microservices, and backend APIs, where scalability and reduced operational management are critical. It supports dynamic workloads typical in IoT, mobile backends, and automated workflows, enabling rapid deployment without infrastructure provisioning. In contrast to container orchestration, serverless abstracts server management entirely, optimizing costs and resource allocation for unpredictable or variable demand scenarios.

Use Cases for Container Orchestration

Container orchestration excels in managing complex microservices architectures by automating the deployment, scaling, and operation of containerized applications. It is ideal for use cases involving continuous integration and continuous delivery (CI/CD) pipelines, multi-container applications, and hybrid or multi-cloud environments requiring consistent container management. Enterprises leveraging Kubernetes or Docker Swarm benefit from enhanced resource utilization, seamless load balancing, and robust fault tolerance in their production-grade workloads.

Cost Comparison: Serverless vs Containers

Serverless computing reduces costs by charging based on actual usage with no need to pay for idle resources, making it ideal for variable workloads and short-lived tasks. Container orchestration, while offering more control and consistent performance, often requires paying for reserved infrastructure regardless of utilization, leading to potentially higher baseline costs. Cost efficiency depends on workload patterns, with serverless excelling in dynamic scaling scenarios and containers being more economical for steady, predictable workloads.

Security Considerations in Both Approaches

Serverless computing reduces the attack surface by abstracting infrastructure management, limiting exposure to operating system vulnerabilities, but it introduces security challenges related to function execution environment and event-driven triggers. Container orchestration platforms like Kubernetes require robust configuration and patch management to mitigate risks from container escape, network attacks, and privilege escalation. Both approaches demand strong identity and access management (IAM), runtime security monitoring, and compliance with encryption standards to protect sensitive data and workloads effectively.

Choosing the Right Model for Your Workload

Serverless computing offers automatic scaling and reduced operational overhead, ideal for event-driven and intermittent workloads with unpredictable traffic patterns. Container orchestration, such as Kubernetes, provides granular control over resource allocation and is better suited for complex, stateful applications requiring consistent performance. Choosing the right model depends on workload characteristics: prioritize serverless for agility and cost-efficiency in bursty tasks, while container orchestration fits sustained, scalable services demanding fine-tuned management.

Future Trends in Serverless and Containerization

Serverless computing is poised to evolve with enhanced AI integration and greater support for multi-cloud environments, optimizing automatic scaling and resource management. Container orchestration platforms like Kubernetes will continue advancing towards more sophisticated security features and seamless hybrid cloud deployments. Both technologies are converging towards enabling more efficient, cost-effective, and resilient application development in increasingly complex cloud infrastructures.

Serverless Computing Infographic

libterm.com

libterm.com