Distributed cache improves application performance by storing frequently accessed data across multiple servers, reducing latency and balancing workload effectively. This approach enhances scalability and fault tolerance, ensuring seamless user experiences even during peak traffic. Explore the rest of the article to understand how implementing a distributed cache can optimize your system architecture.

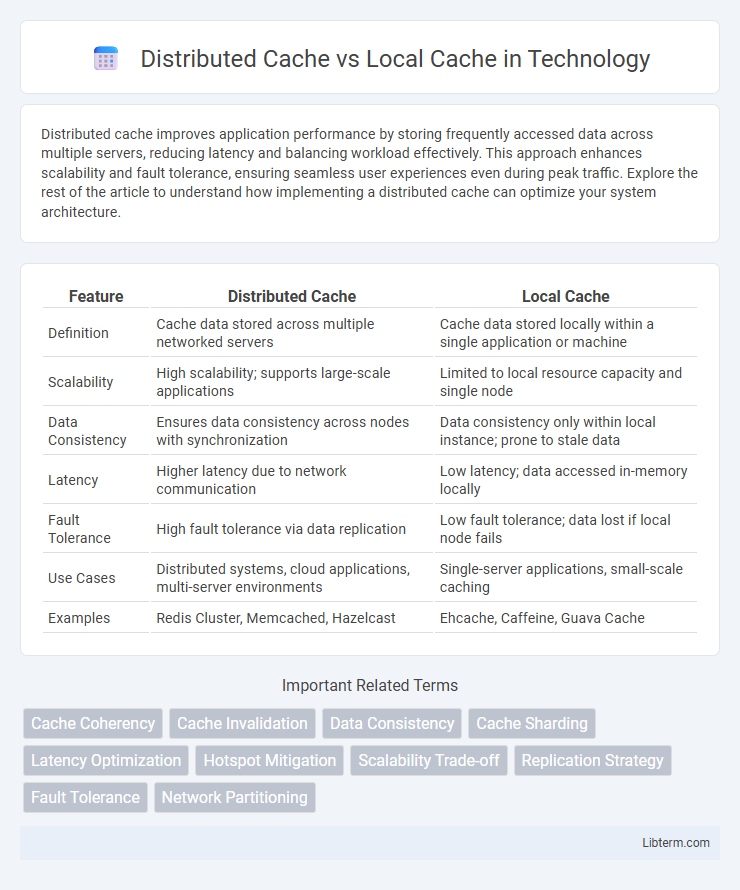

Table of Comparison

| Feature | Distributed Cache | Local Cache |

|---|---|---|

| Definition | Cache data stored across multiple networked servers | Cache data stored locally within a single application or machine |

| Scalability | High scalability; supports large-scale applications | Limited to local resource capacity and single node |

| Data Consistency | Ensures data consistency across nodes with synchronization | Data consistency only within local instance; prone to stale data |

| Latency | Higher latency due to network communication | Low latency; data accessed in-memory locally |

| Fault Tolerance | High fault tolerance via data replication | Low fault tolerance; data lost if local node fails |

| Use Cases | Distributed systems, cloud applications, multi-server environments | Single-server applications, small-scale caching |

| Examples | Redis Cluster, Memcached, Hazelcast | Ehcache, Caffeine, Guava Cache |

Introduction to Distributed and Local Cache

Distributed cache stores data across multiple servers to ensure high availability and scalability, reducing latency in large-scale applications. Local cache resides on a single server or client, offering faster access to frequently used data but limited by system memory and not shared across instances. Choosing between distributed and local cache depends on application requirements for speed, consistency, and scalability.

What is a Distributed Cache?

A distributed cache is a high-performance caching system that spreads cached data across multiple servers or nodes, enabling fast and scalable access to shared data in large-scale applications. It ensures data consistency and availability by replicating or partitioning the cache, supporting fault tolerance and load balancing in distributed environments. Common distributed cache solutions include Redis, Memcached, and Apache Ignite, which enhance latency reduction and throughput for cloud-native and microservices architectures.

What is a Local Cache?

A local cache stores data directly on the application server, enabling rapid access with minimal latency by keeping frequently used information close to the processing unit. Unlike distributed caches, local caches do not share data across multiple servers, reducing network overhead but limiting scalability. This approach is ideal for applications requiring quick, transient data retrieval within a single instance.

Key Differences Between Distributed and Local Cache

Distributed cache stores data across multiple servers, enabling high availability and scalability for large-scale applications, whereas local cache resides on a single machine, offering faster access speeds but limited by individual system resources. Distributed caches support data consistency and synchronization across nodes, while local caches are isolated and prone to stale data without explicit update mechanisms. Key differences include fault tolerance, capacity, latency, and complexity of implementation, with distributed caches being preferable for distributed systems and local caches suitable for single-node performance optimization.

Performance Comparison: Distributed vs Local Cache

Local cache offers faster data retrieval due to its proximity within the application memory, minimizing latency and improving response times. Distributed cache, while introducing slight network overhead, enables data sharing across multiple nodes, enhancing scalability and fault tolerance in large-scale systems. Performance comparison reveals local cache excels in single-instance speed, whereas distributed cache optimizes multi-instance coordination and consistency.

Scalability Considerations in Caching

Distributed cache offers enhanced scalability by allowing data storage and retrieval across multiple nodes, which supports high availability and fault tolerance in large-scale systems. Local cache provides faster access times by storing data directly within the application instance but faces limitations in scalability due to memory constraints and data synchronization challenges. For applications with heavy read loads and dynamic scaling requirements, distributed caching solutions like Redis or Memcached ensure consistent performance and data consistency across distributed environments.

Data Consistency and Synchronization

Distributed cache systems ensure data consistency across multiple nodes by using synchronization protocols such as write-through, write-behind, or distributed locking, minimizing stale data issues in large-scale applications. Local cache offers faster access times but struggles with synchronization and consistency due to isolated memory storage, leading to potential data divergence in multi-instance environments. Implementing cache invalidation strategies and coherence mechanisms is critical for maintaining accurate and synchronized data states between local and distributed caches.

Use Cases for Distributed Cache

Distributed cache excels in scenarios requiring data consistency and scalability across multiple servers, such as large-scale web applications, real-time analytics platforms, and e-commerce sites handling millions of users. It supports fast data retrieval in microservices architectures by sharing cached data across nodes, reducing database load and improving fault tolerance. Use cases also include session state management in distributed environments and caching results of expensive computations to enhance system responsiveness.

Use Cases for Local Cache

Local cache excels in scenarios requiring ultra-low latency data retrieval, such as session management in web applications or frequently accessed configuration settings. It is ideal for single-instance applications or microservices where data consistency across multiple nodes is not critical. Use cases include caching user preferences, temporary computation results, and reducing database load in real-time gaming or financial applications.

Choosing the Right Cache for Your Application

Choosing the right cache depends on application scale and data consistency needs; distributed cache systems like Redis or Memcached offer scalability and shared access across multiple servers, ideal for cloud-based or microservices architectures. Local caches provide ultra-fast access by storing data in-memory on individual nodes but risk stale data and limited capacity, making them suitable for single-server applications or scenarios where data synchronization is minimal. Evaluating factors such as latency requirements, fault tolerance, and update frequency ensures optimal performance and resource utilization in caching strategies.

Distributed Cache Infographic

libterm.com

libterm.com