Efficient serverless function scheduling is essential for optimizing cloud resource utilization and reducing execution latency. By intelligently managing invocation timing and workload distribution, businesses can significantly improve the performance and cost-effectiveness of their serverless applications. Discover how advanced scheduling strategies can enhance Your cloud operations by reading the full article.

Table of Comparison

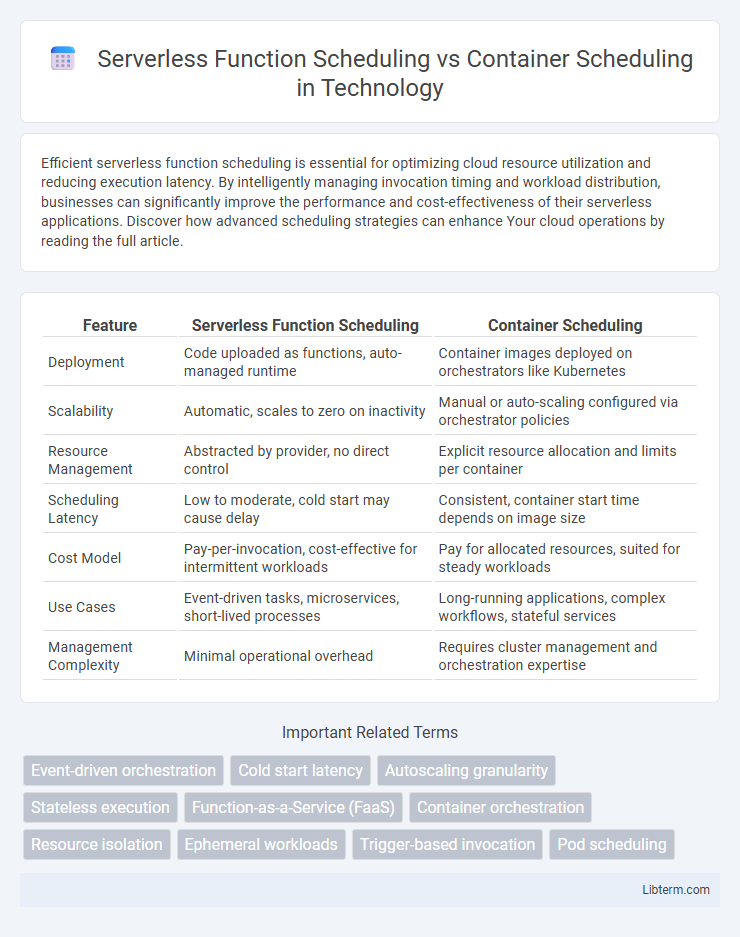

| Feature | Serverless Function Scheduling | Container Scheduling |

|---|---|---|

| Deployment | Code uploaded as functions, auto-managed runtime | Container images deployed on orchestrators like Kubernetes |

| Scalability | Automatic, scales to zero on inactivity | Manual or auto-scaling configured via orchestrator policies |

| Resource Management | Abstracted by provider, no direct control | Explicit resource allocation and limits per container |

| Scheduling Latency | Low to moderate, cold start may cause delay | Consistent, container start time depends on image size |

| Cost Model | Pay-per-invocation, cost-effective for intermittent workloads | Pay for allocated resources, suited for steady workloads |

| Use Cases | Event-driven tasks, microservices, short-lived processes | Long-running applications, complex workflows, stateful services |

| Management Complexity | Minimal operational overhead | Requires cluster management and orchestration expertise |

Introduction to Serverless and Container Scheduling

Serverless function scheduling automates resource allocation by executing code in response to events without the need to manage underlying infrastructure, optimizing for rapid scaling and cost-efficiency. Container scheduling orchestrates containerized applications by distributing workloads across a cluster, ensuring high availability and resource utilization through platforms like Kubernetes. Understanding the distinctions between serverless and container scheduling is crucial for selecting the best orchestration method based on application requirements and operational complexity.

Core Concepts: Serverless Functions Explained

Serverless functions operate on an event-driven model, automatically scaling and executing code in response to triggers without managing underlying infrastructure, contrasting with container scheduling which requires predefined resource allocation and lifecycle management. Core concepts of serverless focus on granular function execution, statelessness, and ephemeral runtime environments that optimize for rapid scaling and cost efficiency. This model enables developers to deploy individual functions independently, enhancing agility and reducing operational overhead compared to container orchestration platforms like Kubernetes.

Core Concepts: Container Scheduling Fundamentals

Container scheduling fundamentals involve allocating resources, managing workloads, and optimizing performance across clusters using orchestrators like Kubernetes and Docker Swarm. This process requires precise control over container lifecycle, resource constraints, and network configurations to ensure scalability and high availability. Effective container scheduling balances load distribution, fault tolerance, and operational efficiency through policies such as affinity rules and priority classes.

Key Differences Between Serverless and Container Scheduling

Serverless function scheduling dynamically allocates resources per function invocation, optimizing for rapid scaling and granular billing based on execution time, whereas container scheduling assigns fixed resources to containers, supporting long-running processes with consistent resource allocation. Serverless environments abstract infrastructure management, enabling event-driven scaling without direct control over the underlying compute instances, while container scheduling requires orchestrators like Kubernetes to manage container lifecycle, networking, and persistent storage. The key difference lies in the operational model: serverless prioritizes ephemeral, stateless execution tailored to function calls, contrasting with container scheduling's focus on orchestrating durable, stateful applications and services.

Scalability Comparison: Serverless vs Container Scheduling

Serverless function scheduling automatically scales based on event triggers, allowing near-instantaneous adjustment to workload fluctuations without manual intervention. Container scheduling requires predefined scaling policies and infrastructure management, limiting responsiveness to sudden traffic spikes. Serverless platforms optimize resource usage by charging only for executed functions, whereas container scheduling often involves maintaining idle resources to accommodate peak loads.

Cost Efficiency Analysis

Serverless function scheduling eliminates the need for provisioning and managing infrastructure, resulting in cost savings by charging based on actual execution time and resource usage. Container scheduling often incurs higher operational costs due to fixed resource allocation and overhead of continuous server management. Cost efficiency favors serverless scheduling for variable and unpredictable workloads, while container scheduling may be more economical for sustained, high-throughput applications.

Performance and Latency Considerations

Serverless function scheduling offers rapid scaling and low-latency execution by abstracting infrastructure management, minimizing cold start delays with provisioned concurrency. Container scheduling provides granular resource allocation and consistent performance, benefiting workloads requiring custom runtime environments and persistent connections. Performance optimization in serverless relies on efficient event triggers and stateless execution, while container scheduling excels in latency-sensitive applications through dedicated resource provisioning and optimized orchestration frameworks like Kubernetes.

Use Cases: When to Use Serverless or Containers

Serverless function scheduling excels in event-driven applications, rapid scaling requirements, and unpredictable workloads, making it ideal for lightweight APIs, microservices, and real-time data processing. Container scheduling is better suited for long-running processes, complex applications with multiple dependencies, and scenarios requiring full control over the runtime environment, such as batch processing, machine learning training, and legacy application modernization. Choosing serverless optimizes cost and scalability for transient tasks, while containers provide flexibility and consistency for persistent, stateful workloads.

Security Implications of Scheduling Approaches

Serverless function scheduling inherently reduces the attack surface by abstracting underlying infrastructure, minimizing exposed system components and limiting persistent running states vulnerable to exploitation. Container scheduling demands rigorous security configurations such as namespace isolation, image scanning, and runtime security to mitigate risks associated with container escape and shared kernel vulnerabilities. Both approaches require integration of identity and access management policies, but serverless functions often offer more granular, function-level permissions, enhancing security posture in multi-tenant environments.

Future Trends in Application Scheduling

Future trends in application scheduling emphasize increased automation and dynamic resource allocation, where serverless function scheduling leverages event-driven models for instant scalability and granular billing, contrasting with container scheduling's focus on long-running workloads and orchestration at the cluster level. Advances in AI-powered schedulers promise enhanced workload prediction and optimization, enabling seamless hybrid models combining serverless functions and containers to maximize efficiency and reduce latency. Integration with edge computing further drives innovation, supporting real-time processing by dynamically scheduling serverless functions closer to data sources while containers manage stateful, complex applications across distributed environments.

Serverless Function Scheduling Infographic

libterm.com

libterm.com