Monolithic deployment involves packaging an entire application into a single, unified codebase and running it as one cohesive unit. This approach simplifies development and testing but can lead to challenges in scalability and maintenance as the application grows. Discover how monolithic deployment impacts your software architecture and whether it's the right fit for your next project by reading on.

Table of Comparison

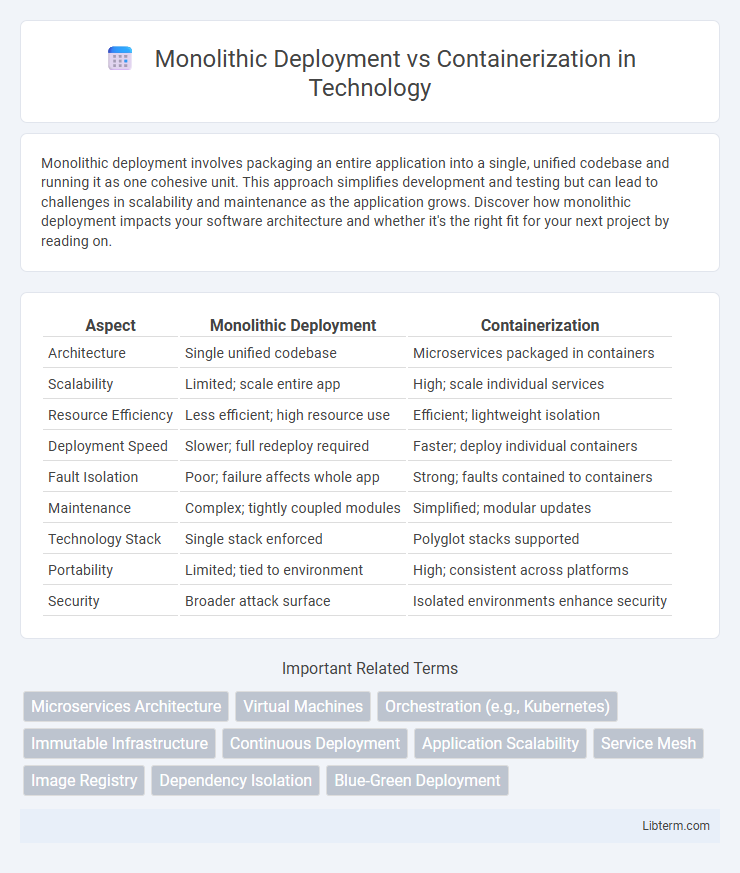

| Aspect | Monolithic Deployment | Containerization |

|---|---|---|

| Architecture | Single unified codebase | Microservices packaged in containers |

| Scalability | Limited; scale entire app | High; scale individual services |

| Resource Efficiency | Less efficient; high resource use | Efficient; lightweight isolation |

| Deployment Speed | Slower; full redeploy required | Faster; deploy individual containers |

| Fault Isolation | Poor; failure affects whole app | Strong; faults contained to containers |

| Maintenance | Complex; tightly coupled modules | Simplified; modular updates |

| Technology Stack | Single stack enforced | Polyglot stacks supported |

| Portability | Limited; tied to environment | High; consistent across platforms |

| Security | Broader attack surface | Isolated environments enhance security |

Introduction to Monolithic Deployment and Containerization

Monolithic deployment refers to building and deploying applications as a single, unified unit where all components are tightly integrated and run as one process. Containerization, on the other hand, encapsulates applications and their dependencies into lightweight, portable containers that can run consistently across different computing environments. Containerization enhances scalability and agility by isolating services, whereas monolithic deployment often leads to challenges in maintenance and scaling due to its unified structure.

Understanding Monolithic Architectures

Monolithic architectures consolidate all application components into a single codebase, enabling straightforward deployment but presenting challenges in scalability and maintenance. This tightly coupled structure limits flexibility, as changes in one module may affect the entire system, leading to longer development cycles and increased risk of downtime. Understanding monolithic deployment highlights the contrast with containerization, which decouples services into independent, scalable units for enhanced agility and resilience.

What is Containerization?

Containerization is a lightweight virtualization method that packages applications along with their dependencies, libraries, and configuration files into isolated units called containers. Unlike monolithic deployment, which bundles all components into a single executable, containerization enables consistent environments across development, testing, and production by leveraging container runtimes like Docker or Kubernetes. This approach enhances scalability, portability, and resource efficiency, allowing microservices to run independently while sharing the host operating system kernel.

Key Differences Between Monolithic Deployment and Containerization

Monolithic deployment involves packaging an entire application into a single executable or binary, resulting in tight coupling between components, whereas containerization isolates applications in lightweight, portable containers that encapsulate code and dependencies. Monolithic systems often face challenges with scalability and continuous deployment, while containers enable microservices architecture, allowing independent scaling and faster iteration. Resource utilization differs significantly: monolithic applications run as one process on an OS, while containers share the OS kernel but maintain isolated runtime environments.

Pros and Cons of Monolithic Deployment

Monolithic deployment offers simplicity in development and testing due to its unified codebase and straightforward debugging process, which can speed up initial release cycles. However, it poses challenges in scalability and flexibility, as updates or failures in one part of the application require redeploying the entire system, increasing downtime risk. Moreover, the tightly coupled architecture complicates adoption of newer technologies and hinders continuous integration and deployment practices.

Advantages and Disadvantages of Containerization

Containerization offers significant advantages such as enhanced portability, efficient resource utilization, and accelerated deployment cycles by encapsulating applications and their dependencies in lightweight, consistent environments. It enables simplified scalability and isolation, reducing conflicts between different software components and improving system reliability. However, containerization introduces complexities in orchestration, security management, and monitoring, requiring specialized tools and expertise to handle container lifecycle and network configurations effectively.

Performance and Scalability Comparison

Monolithic deployment typically offers faster communication between components due to in-process calls, resulting in lower latency and higher performance for tightly-coupled applications. Containerization provides superior scalability by enabling independent service scaling, efficient resource utilization, and rapid deployment of microservices, enhancing flexibility under varying loads. Performance in containerized environments can be affected by overhead from container orchestration and network virtualization, but optimized container strategies minimize this impact while maximizing horizontal scalability.

Security Considerations in Both Approaches

Monolithic deployment consolidates an entire application into a single unit, increasing the attack surface due to limited isolation, which can lead to broader security breaches if one component is compromised. Containerization enhances security by encapsulating services in isolated environments, reducing the blast radius of attacks and enabling granular access controls through orchestrators like Kubernetes. However, container security requires diligent management of image vulnerabilities, runtime threats, and infrastructure hardening to prevent exploitation within container clusters.

Real-World Use Cases: Monolithic vs Containerized Systems

Monolithic deployment remains prevalent in legacy applications such as enterprise resource planning (ERP) systems and traditional banking software due to their stability and simplicity. Containerization, exemplified by Docker and Kubernetes, drives agility in sectors like e-commerce and financial technology by enabling microservices architecture, continuous integration, and rapid scaling. Organizations like Netflix and Spotify leverage containerized systems to enhance deployment speed, resilience, and resource optimization compared to monolithic counterparts.

Choosing the Right Approach for Your Project

Monolithic deployment consolidates an entire application into a single executable, offering straightforward development and simpler debugging, ideal for smaller projects with limited scalability needs. Containerization, using technologies like Docker and Kubernetes, enables modular, scalable, and portable application components, facilitating continuous integration and deployment in complex, distributed systems. Selecting the right approach depends on project size, scalability requirements, resource availability, and the need for rapid updates or microservices architecture.

Monolithic Deployment Infographic

libterm.com

libterm.com