Concurrency enables multiple tasks to run simultaneously, improving program efficiency and responsiveness. It is essential for optimizing performance in multi-core processors and handling asynchronous operations. Explore the rest of the article to understand how concurrency can enhance your software development skills.

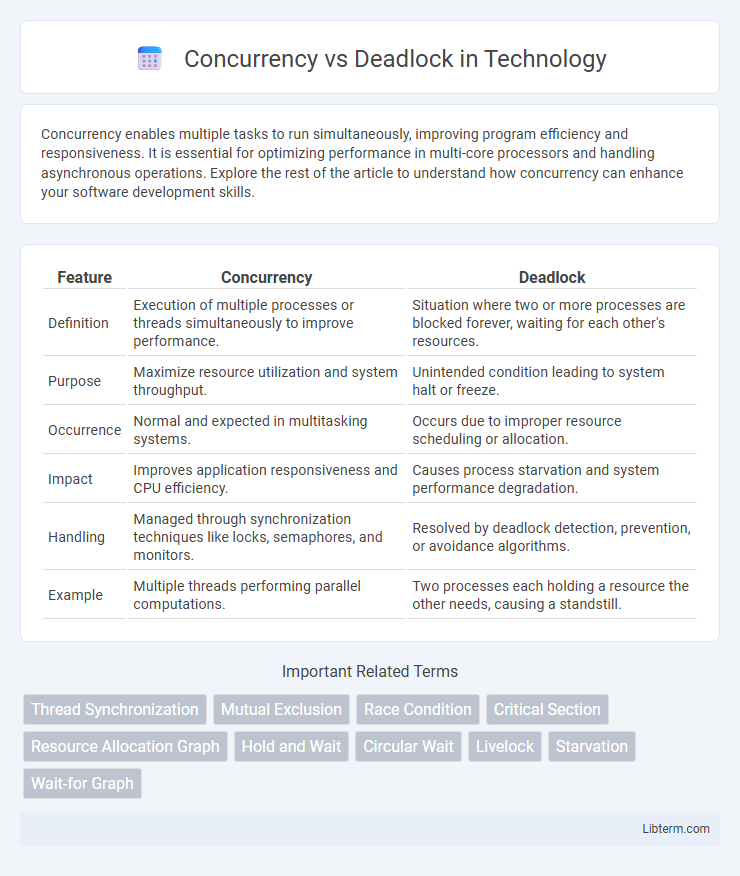

Table of Comparison

| Feature | Concurrency | Deadlock |

|---|---|---|

| Definition | Execution of multiple processes or threads simultaneously to improve performance. | Situation where two or more processes are blocked forever, waiting for each other's resources. |

| Purpose | Maximize resource utilization and system throughput. | Unintended condition leading to system halt or freeze. |

| Occurrence | Normal and expected in multitasking systems. | Occurs due to improper resource scheduling or allocation. |

| Impact | Improves application responsiveness and CPU efficiency. | Causes process starvation and system performance degradation. |

| Handling | Managed through synchronization techniques like locks, semaphores, and monitors. | Resolved by deadlock detection, prevention, or avoidance algorithms. |

| Example | Multiple threads performing parallel computations. | Two processes each holding a resource the other needs, causing a standstill. |

Introduction to Concurrency and Deadlock

Concurrency allows multiple processes or threads to execute simultaneously, improving system efficiency and resource utilization. Deadlock occurs when two or more processes are unable to proceed because each is waiting for the other to release resources, causing a complete halt in system operation. Understanding the mechanisms behind concurrency and deadlock is essential for designing robust multi-threaded and distributed systems.

Understanding Concurrency in Computing

Concurrency in computing enables multiple processes or threads to execute simultaneously, improving resource utilization and system throughput. It involves managing shared resources and synchronizing operations to avoid conflicts and ensure correct program behavior. Effective concurrency control minimizes latency and enhances performance without causing deadlock, a state where competing processes wait indefinitely for resources.

Key Concepts: Processes, Threads, and Resources

Processes and threads represent independent and lightweight units of execution, respectively, that compete for system resources such as CPU time, memory, and I/O devices. Concurrency enables multiple threads or processes to run simultaneously, improving system efficiency and resource utilization. Deadlock occurs when competing processes or threads hold resources while waiting indefinitely for others, causing a system halt or significant performance degradation.

What is Deadlock?

Deadlock is a state in concurrent computing where two or more processes are unable to proceed because each is waiting for the other to release resources. This stalemate occurs when processes hold resources while simultaneously waiting for resources held by others, creating a cycle of dependencies with no resolution. Deadlocks are critical to identify and resolve in operating systems and multithreaded applications to ensure efficient resource allocation and system performance.

Causes of Deadlock in Concurrent Systems

Deadlock in concurrent systems occurs primarily due to four conditions: mutual exclusion, hold and wait, no preemption, and circular wait. Mutual exclusion prevents simultaneous resource access, while hold and wait allows processes to hold resources while waiting for others. The no preemption condition stops resources from being forcibly taken away, and circular wait creates a closed chain of processes, each waiting on a resource held by the next.

Concurrency Control Techniques

Concurrency control techniques manage simultaneous execution of transactions to prevent conflicts and maintain data integrity in multiuser database systems. Methods such as locking protocols, timestamp ordering, and optimistic concurrency control ensure serializability by coordinating access to shared resources. Effective concurrency control minimizes deadlocks by using deadlock detection, prevention, or avoidance strategies, thus enhancing system performance and reliability.

Deadlock Prevention and Avoidance Strategies

Deadlock prevention strategies focus on structurally negating at least one of the Coffman conditions by resource ordering, preemption, or avoiding circular wait, ensuring that processes cannot enter a deadlock state. Deadlock avoidance algorithms like Banker's Algorithm dynamically assess resource allocation requests against system states to maintain safe conditions that prevent deadlock occurrence. Both strategies aim to maintain system concurrency without halting processes, but prevention enforces strict resource allocation rules while avoidance relies on runtime analysis.

Real-World Examples of Concurrency and Deadlock

In real-world applications, concurrency is exemplified by multiple users accessing a banking system simultaneously, allowing transactions to be processed in parallel without interference. Deadlock occurs in scenarios such as database systems where two transactions hold locks on resources the other needs, causing both to wait indefinitely. Understanding these examples highlights the importance of designing systems that manage resource allocation and synchronization effectively to prevent deadlocks while enabling concurrent operations.

Impact of Deadlock on System Performance

Deadlock severely degrades system performance by causing processes to become indefinitely blocked, preventing resource allocation and execution progress. This stagnation leads to reduced CPU utilization and increased response times, ultimately lowering overall throughput. Effective deadlock detection and prevention mechanisms are critical to maintaining optimal system efficiency and stability.

Best Practices for Handling Concurrency and Deadlock

Effective handling of concurrency requires implementing synchronization mechanisms such as locks, semaphores, and atomic operations to ensure data consistency and prevent race conditions. Best practices include using deadlock detection algorithms, applying resource ordering, and employing timeout strategies to mitigate deadlock risks. Optimizing transaction design and leveraging concurrency control models like optimistic or pessimistic locking further enhance system reliability and performance.

Concurrency Infographic

libterm.com

libterm.com