Serverless computing allows developers to build and run applications without managing server infrastructure, enhancing scalability and reducing operational costs. This model automatically allocates resources based on demand, ensuring efficient performance and faster deployment. Explore the rest of the article to discover how serverless computing can transform your development process.

Table of Comparison

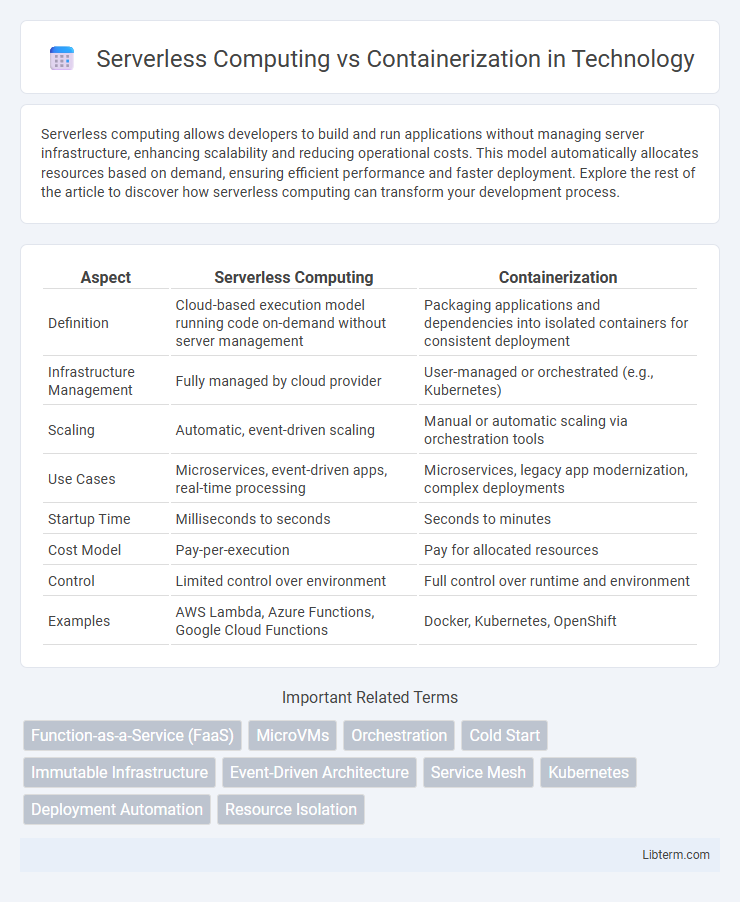

| Aspect | Serverless Computing | Containerization |

|---|---|---|

| Definition | Cloud-based execution model running code on-demand without server management | Packaging applications and dependencies into isolated containers for consistent deployment |

| Infrastructure Management | Fully managed by cloud provider | User-managed or orchestrated (e.g., Kubernetes) |

| Scaling | Automatic, event-driven scaling | Manual or automatic scaling via orchestration tools |

| Use Cases | Microservices, event-driven apps, real-time processing | Microservices, legacy app modernization, complex deployments |

| Startup Time | Milliseconds to seconds | Seconds to minutes |

| Cost Model | Pay-per-execution | Pay for allocated resources |

| Control | Limited control over environment | Full control over runtime and environment |

| Examples | AWS Lambda, Azure Functions, Google Cloud Functions | Docker, Kubernetes, OpenShift |

Introduction to Serverless Computing and Containerization

Serverless computing enables developers to build and run applications without managing server infrastructure, automatically scaling resources based on demand while charging only for actual usage. Containerization packages applications and their dependencies into lightweight, portable containers that run consistently across different environments, improving deployment speed and resource efficiency. Both technologies streamline cloud application development but serve distinct purposes: serverless abstracts infrastructure management, whereas containers offer control over the runtime environment.

Key Differences Between Serverless and Containers

Serverless computing eliminates the need for server management by automatically scaling functions based on demand, whereas containerization involves packaging applications and their dependencies into isolated environments that run consistently across different computing platforms. Serverless abstracts infrastructure, offering micro-billing and event-driven execution, while containers provide full control over the runtime environment, supporting complex, long-running applications. Resource allocation in serverless is dynamic and managed by the cloud provider, contrasting with containers that require manual scaling and orchestration through platforms like Kubernetes.

Architecture Overview: Serverless vs Containerization

Serverless computing architecture abstracts infrastructure management by automatically provisioning and scaling function-based execution environments, enabling developers to focus solely on code without handling servers. Containerization encapsulates applications and dependencies within lightweight, isolated containers orchestrated by platforms like Kubernetes, allowing precise control over deployment, scaling, and resource allocation. Serverless emphasizes event-driven, ephemeral functions with granular scaling, while containerization supports persistent, stateful applications with customizable runtime environments.

Cost Comparison: Serverless vs Containers

Serverless computing offers a pay-as-you-go pricing model, automatically scaling resources and charging only for actual usage, which can lead to significant cost savings for intermittent workloads. Containerization typically requires provisioning and managing virtual machines or servers, resulting in fixed costs regardless of workload fluctuations. For sustained, predictable workloads, containers may provide cost efficiency, while serverless excels in reducing expenses for variable or unpredictable traffic patterns.

Scalability and Performance Considerations

Serverless computing offers automatic scaling and resource allocation based on demand, minimizing idle capacity and optimizing cost efficiency, making it ideal for unpredictable workloads. Containerization provides more control over scalability, allowing precise resource management and consistent performance across various environments, which benefits applications requiring steady, high-throughput processing. Performance considerations highlight that serverless may introduce cold start latency, while containers enable faster startup times and better tuning for performance-critical applications.

Security Implications of Serverless and Containers

Serverless computing enhances security by abstracting infrastructure management, reducing attack surfaces, and automatically applying patches, but it introduces challenges in function-level permissions and potential cold start vulnerabilities. Containerization offers strong isolation through container-specific namespaces and control groups, yet requires diligent configuration management to prevent container escape and insecure image usage. Both models demand robust identity and access controls, continuous monitoring, and adherence to security best practices to mitigate risks inherent in multi-tenant and dynamic environments.

Application Use Cases for Serverless and Containers

Serverless computing excels in event-driven applications, real-time data processing, and API backends where automatic scaling and reduced infrastructure management are critical. Containerization is ideal for complex, stateful applications requiring consistent environments across development, testing, and production, such as microservices architectures and legacy application modernization. Use cases for serverless include chatbot services and IoT data ingestion, while containers dominate in multi-cloud deployments and customizable runtime environments.

Deployment and Management Complexity

Serverless computing abstracts infrastructure management by automatically handling deployment, scaling, and maintenance, significantly reducing operational complexity for developers. Containerization provides greater control over deployment environments through encapsulated applications but requires managing orchestration tools like Kubernetes, increasing the complexity of resource allocation and scaling. Choosing between serverless and containerization depends on balancing ease of deployment against the need for fine-grained control over application lifecycle and infrastructure management.

Vendor Lock-In and Ecosystem Support

Serverless computing often results in higher vendor lock-in due to reliance on proprietary APIs and platform-specific functions, making migration between providers challenging. Containerization, supported by open standards like Docker and Kubernetes, offers greater ecosystem flexibility and portability across cloud environments. Ecosystem support for containers includes extensive tooling and community-driven resources, whereas serverless ecosystems are more confined to individual cloud vendors' offerings.

Future Trends in Serverless Computing and Containerization

Future trends in serverless computing include enhanced integration with AI-driven automation, improved multi-cloud portability, and granular cost optimization through per-invocation billing models. Containerization advances are focusing on better orchestration with Kubernetes enhancements, increased security via runtime isolation, and seamless hybrid cloud deployments for scalable microservices architecture. Both technologies are converging toward more efficient, developer-centric platforms that prioritize scalability, reduced operational overhead, and real-time analytics capabilities.

Serverless Computing Infographic

libterm.com

libterm.com