In-memory cache stores data directly within the system's RAM, enabling lightning-fast access and significantly improving application performance by reducing database load. This technique is critical for businesses that require real-time data retrieval and seamless user experiences. Discover how leveraging in-memory caching can transform your system's speed and efficiency by reading the full article.

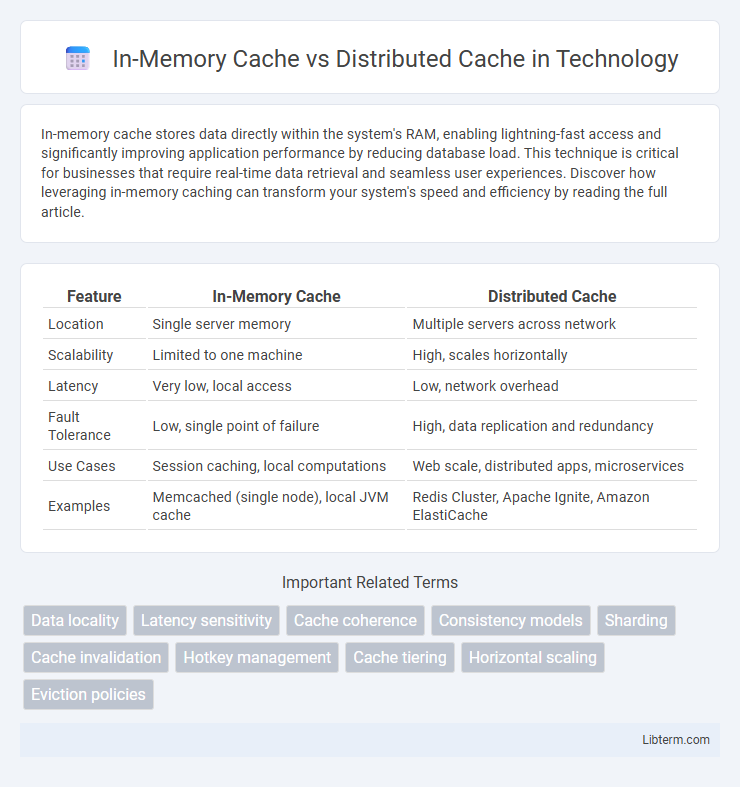

Table of Comparison

| Feature | In-Memory Cache | Distributed Cache |

|---|---|---|

| Location | Single server memory | Multiple servers across network |

| Scalability | Limited to one machine | High, scales horizontally |

| Latency | Very low, local access | Low, network overhead |

| Fault Tolerance | Low, single point of failure | High, data replication and redundancy |

| Use Cases | Session caching, local computations | Web scale, distributed apps, microservices |

| Examples | Memcached (single node), local JVM cache | Redis Cluster, Apache Ignite, Amazon ElastiCache |

Introduction to Caching Mechanisms

In-memory cache stores data directly within the application's RAM, providing ultra-fast access to frequently used information, ideal for single-server environments. Distributed cache systems, such as Redis or Memcached, distribute data across multiple servers to ensure scalability, fault tolerance, and higher availability in large-scale applications. Both caching mechanisms significantly reduce database load and improve application response times by minimizing data retrieval latency.

What is In-Memory Cache?

In-memory cache stores data directly in the RAM of a single server, enabling rapid access and low latency for frequently requested information. It is ideal for applications needing high-speed data retrieval within a localized environment but lacks scalability across multiple nodes. Common examples include Redis and Memcached, which provide fast caching solutions for real-time data processing on a single machine.

What is Distributed Cache?

Distributed cache is a data storage system that spreads cached data across multiple networked servers, enabling high availability, scalability, and fault tolerance for applications with large or fluctuating workloads. Unlike in-memory caches that store data locally within a single machine's memory, distributed caches allow rapid data access and synchronization across different nodes, reducing latency in distributed computing environments. Key technologies implementing distributed caching include Redis, Memcached, and Hazelcast, which are widely used in cloud-native, microservices, and big data architectures.

Key Differences Between In-Memory and Distributed Cache

In-memory cache stores data directly within the RAM of a single application or server, offering extremely low latency and high-speed data access ideal for localized caching needs. Distributed cache spreads cached data across multiple servers or nodes, enabling scalability and fault tolerance essential for large-scale, high-availability systems. Key differences include in-memory cache's limitation to a single node with potential data loss upon failure, whereas distributed cache ensures data replication and consistency across a cluster, supporting horizontal scalability and resilience.

Performance Considerations

In-memory cache offers ultra-low latency and high throughput by storing data directly in the RAM of a single server, making it ideal for applications requiring rapid access to frequently used data with minimal delay. Distributed cache spreads data across multiple nodes, enhancing scalability and fault tolerance but potentially introducing network latency that can impact performance under heavy loads or complex queries. Evaluating data access patterns, consistency requirements, and system architecture is crucial to optimizing cache performance and ensuring efficient resource utilization.

Scalability and Flexibility

In-memory caches offer high-speed data retrieval with low latency, making them ideal for single-node applications but limited in scalability due to constrained memory resources. Distributed caches provide horizontal scalability by spreading data across multiple nodes, ensuring flexibility to handle increasing workloads and fault tolerance. This architecture supports dynamic scaling and improved data availability, making it suitable for large-scale, cloud-based environments.

Data Consistency and Reliability

In-memory cache offers low-latency data access by storing data locally within a single application instance, but it faces challenges in ensuring data consistency across distributed environments. Distributed cache systems, such as Redis or Memcached clusters, enhance reliability and data consistency through synchronization mechanisms and replication across multiple nodes. Implementing strong consistency models and fault tolerance in distributed caches prevents stale data and data loss, critical for applications requiring high availability and accuracy.

Use Cases for In-Memory Cache

In-memory cache excels in scenarios requiring ultra-fast data retrieval and low-latency access, such as real-time analytics, session management, and caching frequently accessed data within a single application instance. Its ability to store data directly in the application's memory allows for quick read/write cycles, making it ideal for high-throughput operations where data consistency is managed locally. Use cases include caching user preferences, temporary data storage during API processing, and accelerating database query performance in microservices architectures.

Use Cases for Distributed Cache

Distributed cache is ideal for applications requiring high scalability, fault tolerance, and data consistency across multiple nodes, such as large-scale e-commerce platforms and real-time analytics systems. It enables faster data retrieval in microservices architectures by sharing cached data across distributed servers, reducing latency and offloading database workloads. Use cases include session management in web applications, leaderboards in gaming, and caching frequently accessed data in cloud-native environments.

Choosing the Right Cache Solution

In-memory cache offers ultra-fast data retrieval by storing data directly within a single application's RAM, ideal for low-latency and high-throughput scenarios with a limited user base. Distributed cache, such as Redis or Memcached clusters, provides scalability and fault tolerance by sharing cached data across multiple nodes, making it suitable for large-scale applications requiring consistency and high availability. Selecting the right cache solution depends on factors including application size, latency requirements, data consistency needs, and infrastructure complexity.

In-Memory Cache Infographic

libterm.com

libterm.com