Fog nodes enhance computing efficiency by processing data closer to its source, reducing latency and bandwidth usage in IoT and edge computing environments. These nodes play a crucial role in managing data traffic between devices and cloud servers, ensuring faster decision-making and improved system reliability. Explore the rest of the article to discover how fog nodes can optimize Your network architecture.

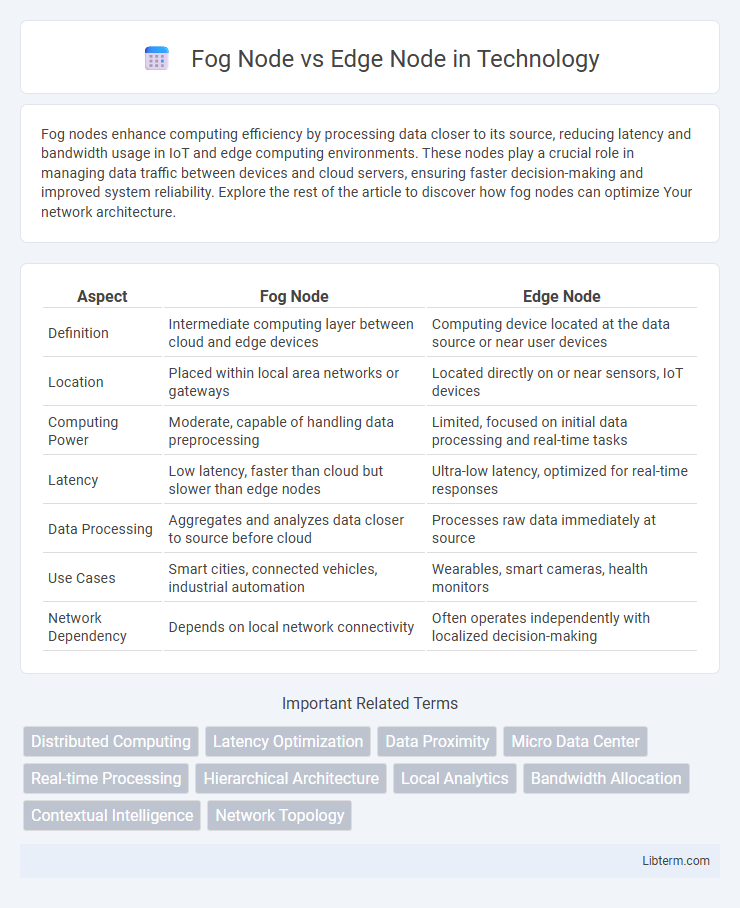

Table of Comparison

| Aspect | Fog Node | Edge Node |

|---|---|---|

| Definition | Intermediate computing layer between cloud and edge devices | Computing device located at the data source or near user devices |

| Location | Placed within local area networks or gateways | Located directly on or near sensors, IoT devices |

| Computing Power | Moderate, capable of handling data preprocessing | Limited, focused on initial data processing and real-time tasks |

| Latency | Low latency, faster than cloud but slower than edge nodes | Ultra-low latency, optimized for real-time responses |

| Data Processing | Aggregates and analyzes data closer to source before cloud | Processes raw data immediately at source |

| Use Cases | Smart cities, connected vehicles, industrial automation | Wearables, smart cameras, health monitors |

| Network Dependency | Depends on local network connectivity | Often operates independently with localized decision-making |

Introduction to Fog Node and Edge Node

Fog nodes extend cloud computing by bringing processing, storage, and networking closer to IoT devices, reducing latency and bandwidth usage. Edge nodes operate directly at the data source, enabling real-time data processing and immediate decision-making for critical applications. Both fog and edge nodes form a hierarchical infrastructure that enhances the efficiency and responsiveness of distributed computing systems.

Key Differences Between Fog Node and Edge Node

Fog nodes operate as intermediate processing units between cloud data centers and edge devices, providing latency reduction and enhanced data filtering closer to the source. Edge nodes are located directly on or near the data-generating devices, enabling real-time processing and immediate response for IoT applications. The primary differences lie in their placement within the network hierarchy, scope of data handling, and specific use cases, with fog nodes supporting broader regional computing while edge nodes focus on localized, device-level operations.

Architecture Overview: Fog Computing vs Edge Computing

Fog computing architecture distributes computing resources between the cloud and end devices through intermediate fog nodes, facilitating data processing, storage, and networking closer to IoT devices. Edge computing architecture places compute and storage directly on edge nodes such as gateways or sensors, minimizing latency by processing data at the network edge. Fog nodes typically act as local micro data centers with broader geographical distribution, while edge nodes are located at or near the data source for real-time analytics and decision-making.

Use Cases for Fog Nodes

Fog nodes enable real-time processing and analytics closer to IoT devices, making them ideal for smart city applications such as traffic management and environmental monitoring. They are deployed in industrial automation to reduce latency and enhance decision-making at the network edge. Use cases also include augmented reality, where fog nodes handle high-bandwidth data streams to support seamless user experiences.

Use Cases for Edge Nodes

Edge nodes are essential in scenarios requiring ultra-low latency processing, such as autonomous vehicles, where real-time decision-making is critical. They are widely used in industrial IoT for predictive maintenance and anomaly detection directly at the machinery level. Smart cities leverage edge nodes to handle vast sensor data locally, optimizing traffic management and energy consumption without relying on distant cloud or fog nodes.

Latency and Performance Comparison

Fog nodes process data closer to the source, significantly reducing latency by minimizing data travel distance, which enhances real-time application performance. Edge nodes operate directly on IoT devices or gateways, providing ultra-low latency and high throughput for time-sensitive tasks by handling computation at the network's periphery. Performance-wise, fog nodes offer greater computational power and storage than edge nodes, supporting complex data analytics, while edge nodes prioritize rapid response and local processing for immediate data handling.

Security Considerations in Fog and Edge Nodes

Fog nodes implement comprehensive security measures, including encryption, authentication, and intrusion detection, to protect data transmitted between IoT devices and the cloud, mitigating risks associated with multi-tenant environments. Edge nodes enhance security by processing data locally, reducing latency and minimizing exposure to external cyber threats, often incorporating hardware-based security modules and real-time anomaly detection. Both fog and edge nodes require robust access control policies and secure communication protocols to safeguard sensitive information across distributed networks.

Scalability and Deployment Challenges

Fog nodes offer enhanced scalability by distributing computing resources closer to data sources, reducing latency and bandwidth usage compared to centralized cloud models. Edge nodes, while providing real-time data processing at the device level, face deployment challenges related to hardware limitations and heterogeneous environments that complicate uniform scaling. Both architectures require robust orchestration and management tools to address their unique constraints and optimize performance in large-scale IoT ecosystems.

Real-World Applications and Industry Adoption

Fog nodes enable real-time processing and analytics closer to IoT devices, enhancing smart city infrastructure by reducing latency in traffic management and environmental monitoring. Edge nodes support immediate data handling within manufacturing environments, improving automation and predictive maintenance through localized computing power. Industries such as transportation, healthcare, and industrial automation increasingly adopt fog and edge computing to optimize performance, ensure data security, and drive efficient decision-making at the source of data generation.

Future Trends in Fog Node and Edge Node Technologies

Future trends in fog node and edge node technologies emphasize increased integration of AI and machine learning capabilities to enable real-time data processing with minimal latency. Advances in 5G and beyond connectivity are expected to enhance distributed computing power and support ultra-reliable low-latency communication (URLLC) in fog and edge networks. Security enhancements using blockchain and decentralized trust models are also driving innovation to safeguard data across heterogeneous fog and edge infrastructures.

Fog Node Infographic

libterm.com

libterm.com