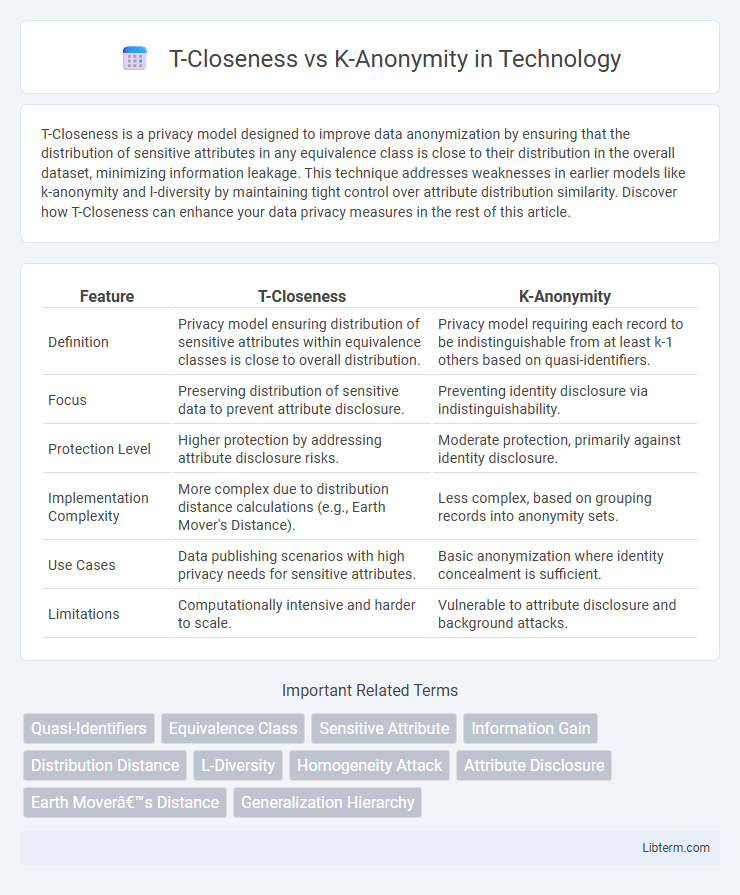

T-Closeness is a privacy model designed to improve data anonymization by ensuring that the distribution of sensitive attributes in any equivalence class is close to their distribution in the overall dataset, minimizing information leakage. This technique addresses weaknesses in earlier models like k-anonymity and l-diversity by maintaining tight control over attribute distribution similarity. Discover how T-Closeness can enhance your data privacy measures in the rest of this article.

Table of Comparison

| Feature | T-Closeness | K-Anonymity |

|---|---|---|

| Definition | Privacy model ensuring distribution of sensitive attributes within equivalence classes is close to overall distribution. | Privacy model requiring each record to be indistinguishable from at least k-1 others based on quasi-identifiers. |

| Focus | Preserving distribution of sensitive data to prevent attribute disclosure. | Preventing identity disclosure via indistinguishability. |

| Protection Level | Higher protection by addressing attribute disclosure risks. | Moderate protection, primarily against identity disclosure. |

| Implementation Complexity | More complex due to distribution distance calculations (e.g., Earth Mover's Distance). | Less complex, based on grouping records into anonymity sets. |

| Use Cases | Data publishing scenarios with high privacy needs for sensitive attributes. | Basic anonymization where identity concealment is sufficient. |

| Limitations | Computationally intensive and harder to scale. | Vulnerable to attribute disclosure and background attacks. |

Introduction to Data Privacy Techniques

T-Closeness enhances data privacy by ensuring that the distribution of sensitive attributes in any anonymized group closely matches the distribution in the entire dataset, addressing limitations of K-Anonymity which only guarantees that each record is indistinguishable among at least k-1 others. K-Anonymity protects against identity disclosure by generalizing or suppressing quasi-identifiers so that individuals cannot be uniquely identified within a group of size k. Both techniques play fundamental roles in data privacy, with T-Closeness providing stronger protection against attribute disclosure by preserving the semantic similarity of sensitive data within anonymized groups.

Understanding K-Anonymity

K-Anonymity ensures data privacy by masking individual records within a dataset so that each person is indistinguishable from at least k-1 others, minimizing re-identification risks. This technique relies on generalizing or suppressing quasi-identifiers, which are attributes that could potentially identify individuals when combined with other external data sources. While effective in protecting privacy, K-Anonymity may fail against attacks that exploit attribute diversity or background knowledge, limitations that T-Closeness aims to address by maintaining the distribution of sensitive attributes within equivalence classes.

Exploring T-Closeness

T-Closeness enhances data privacy by ensuring that the distribution of sensitive attributes in each equivalence class is close to the distribution in the overall dataset, quantifying this closeness using metrics like Earth Mover's Distance. Unlike K-Anonymity, which only requires indistinguishability among k records, T-Closeness addresses attribute disclosure risks by limiting information gain about sensitive attributes. This privacy model is particularly effective against attacks that exploit skewness or background knowledge, providing stronger protection in microdata publishing and data anonymization.

Key Differences Between T-Closeness and K-Anonymity

T-Closeness enhances privacy protection by ensuring the distribution of sensitive attributes in any equivalence class is close to their distribution in the overall dataset, whereas K-Anonymity only requires that each record is indistinguishable from at least k-1 others based on quasi-identifiers. K-Anonymity is vulnerable to homogeneity and background knowledge attacks, while T-Closeness mitigates these risks by preserving the semantic similarity of sensitive data within groups. The key difference lies in T-Closeness's focus on maintaining data utility and privacy through distributional closeness, compared to K-Anonymity's simpler anonymity criterion.

Strengths of K-Anonymity

K-Anonymity effectively protects individual identities by ensuring that each person's data is indistinguishable from at least k-1 others, significantly reducing the risk of re-identification in datasets. It excels in maintaining data utility by allowing generalization and suppression techniques that preserve meaningful information while preventing unique attribute combinations. Its simplicity and widespread adoption make it a practical and scalable privacy model for various data publishing applications.

Advantages of T-Closeness

T-Closeness offers stronger privacy guarantees than K-Anonymity by ensuring that the distribution of sensitive attributes in each equivalence class closely matches the overall dataset, reducing the risk of attribute disclosure. Unlike K-Anonymity, which only hides identity by grouping identical quasi-identifiers, T-Closeness addresses attribute diversity and prevents homogeneity attacks. This makes T-Closeness particularly effective for protecting sensitive data in scenarios requiring both identity and attribute privacy.

Limitations and Vulnerabilities

T-Closeness addresses the limitation of K-Anonymity by ensuring the distribution of sensitive attributes in an equivalence class is close to the distribution in the overall dataset, reducing attribute disclosure risks. However, T-Closeness can be computationally intensive and may result in excessive data distortion, impacting data utility. Both models remain vulnerable to background knowledge attacks and struggle with high-dimensional datasets due to increased complexity and information loss.

Real-World Applications

T-Closeness enhances data privacy by ensuring that the distribution of sensitive attributes in any anonymized group closely mirrors the overall dataset, addressing limitations of K-Anonymity which only guarantees indistinguishability among k individuals. In healthcare data sharing, T-Closeness effectively prevents attribute disclosure, protecting patient confidentiality while maintaining data utility for research. Financial institutions implement T-Closeness to anonymize transaction records, reducing risks of sensitive information leakage beyond what K-Anonymity can achieve.

Choosing the Right Privacy Model

Choosing the right privacy model depends on the data sensitivity and the risk of attribute disclosure; k-anonymity effectively prevents identity re-identification by ensuring each record is indistinguishable among at least k individuals, while t-closeness strengthens privacy by maintaining the distribution of sensitive attributes within each equivalence class close to the overall dataset distribution. Highly sensitive datasets requiring protection against attribute disclosure benefit from t-closeness due to its focus on semantic similarity and information loss minimization. For large-scale anonymization tasks where re-identification is the primary concern, k-anonymity offers computational efficiency and simplicity compared to the more complex t-closeness model.

Conclusion and Future Perspectives

T-Closeness enhances privacy protection by maintaining the distribution of sensitive attributes within equivalence classes, addressing limitations of k-anonymity that only ensures indistinguishability without considering attribute distribution. Future research should focus on developing scalable algorithms for t-closeness that balance data utility and privacy in big data environments, alongside exploring hybrid models combining t-closeness with differential privacy for stronger guarantees. Integrating machine learning techniques to dynamically adjust anonymity parameters could further optimize privacy-preserving data publishing strategies.

T-Closeness Infographic

libterm.com

libterm.com