Remote cache improves application performance by storing data closer to end-users, reducing latency and server load. It enables faster access to frequently requested information, optimizing resource use and enhancing scalability. Explore the rest of the article to learn how implementing remote cache can benefit your system efficiency.

Table of Comparison

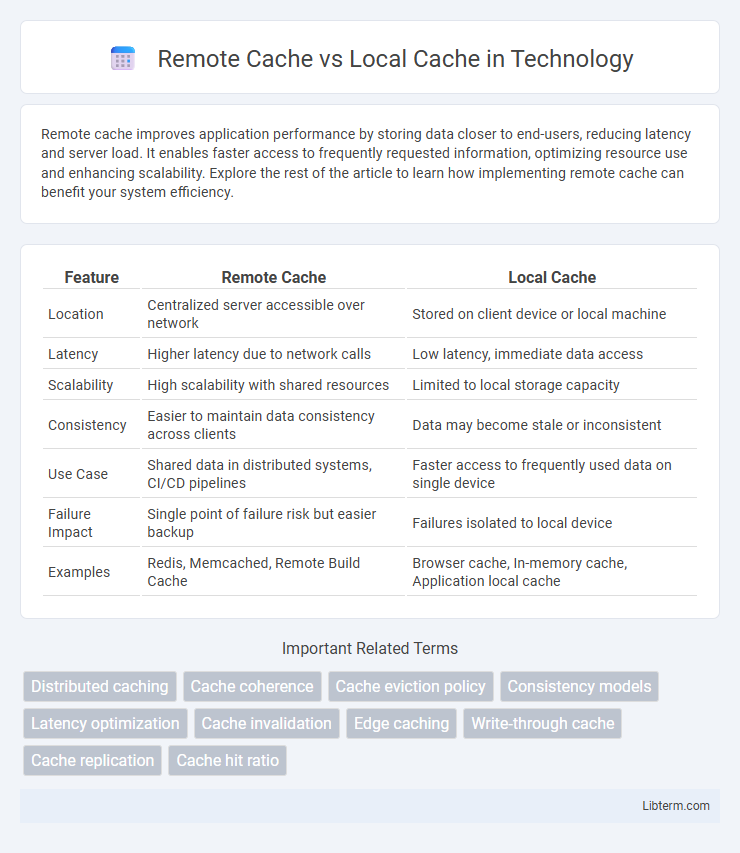

| Feature | Remote Cache | Local Cache |

|---|---|---|

| Location | Centralized server accessible over network | Stored on client device or local machine |

| Latency | Higher latency due to network calls | Low latency, immediate data access |

| Scalability | High scalability with shared resources | Limited to local storage capacity |

| Consistency | Easier to maintain data consistency across clients | Data may become stale or inconsistent |

| Use Case | Shared data in distributed systems, CI/CD pipelines | Faster access to frequently used data on single device |

| Failure Impact | Single point of failure risk but easier backup | Failures isolated to local device |

| Examples | Redis, Memcached, Remote Build Cache | Browser cache, In-memory cache, Application local cache |

Introduction to Caching: Remote vs Local

Remote cache stores frequently accessed data on external servers to improve scalability and data consistency across distributed systems, while local cache maintains data storage within the client or application memory for faster access and reduced latency. Local caching enhances performance by minimizing network calls, but it risks data staleness in dynamic environments where updates happen frequently. Remote caching leverages centralized storage solutions like Redis or Memcached, ensuring synchronized data access, which is essential for multi-instance applications and cloud architectures.

How Remote Cache Works

Remote cache operates by storing frequently accessed data on a centralized server, which multiple clients can connect to over a network. This approach reduces latency and bandwidth use by allowing clients to fetch updates from the remote cache instead of the original data source, enhancing scalability and consistency. Remote cache systems, such as Redis or Memcached, synchronize data across distributed environments, supporting high availability and fault tolerance.

How Local Cache Works

Local cache stores frequently accessed data directly on a user's device or application, reducing latency by eliminating the need to retrieve information over the network. It works by saving copies of data locally after the first access, allowing subsequent requests to be served quickly from the stored cache. This process improves application performance and reduces server load by minimizing redundant data fetch operations.

Performance Comparison: Remote vs Local

Local cache offers faster data retrieval by storing information directly on the user's device, minimizing latency and reducing network traffic. Remote cache, while potentially slower due to network delays, provides centralized management and scalability, which is beneficial for distributed systems needing consistent data across multiple users. Performance differences hinge on use case specifics: local cache excels in low-latency environments, whereas remote cache optimizes data sharing and coherence in multi-user or large-scale applications.

Scalability Considerations

Remote cache enhances scalability by distributing data across multiple servers, reducing the load on individual nodes and enabling real-time data sharing in large-scale systems. Local cache offers faster access times for individual nodes but may face consistency challenges and limited scalability in handling growing traffic. Hybrid approaches combine both, leveraging local cache speed with remote cache's scalability to optimize performance in complex, scalable architectures.

Data Consistency and Synchronization

Remote cache stores data on a centralized server accessible by multiple clients, enabling consistent data updates and synchronization across distributed systems but potentially introducing latency. Local cache resides on the client's device, offering faster data retrieval but requiring complex synchronization mechanisms to maintain consistency with the remote source. Effective data consistency in remote-cache systems relies on protocols like cache invalidation or write-through, whereas local caches often implement stale-while-revalidate strategies to balance performance with synchronization accuracy.

Security Implications

Remote cache can expose sensitive data to network vulnerabilities and unauthorized access if encryption and secure authentication are not implemented, increasing the risk of data breaches. Local cache minimizes exposure by storing data on the client device, but it can be susceptible to physical access attacks or malware targeting the device. Employing encryption, access controls, and regular audits are critical for securing both caching methods and protecting cached data integrity.

Cost-Benefit Analysis

Remote cache reduces local resource consumption and improves data consistency across distributed systems but incurs higher latency and increased network costs. Local cache offers faster data access with minimal network overhead, enhancing application responsiveness but risks stale data and higher memory usage per node. Optimal cost-benefit depends on workload patterns, with remote cache favoring consistency in large-scale systems and local cache benefiting low-latency needs in isolated environments.

Use Cases for Remote Cache

Remote cache is ideal for distributed systems requiring data sharing across multiple nodes, such as microservices architectures and cloud applications, where low latency and consistency are essential. It supports scalability and fault tolerance, enabling data persistence even if individual clients fail or restart, as seen in tools like Redis and Memcached. Remote caches excel in scenarios demanding centralized session management, real-time analytics, and high-throughput data processing across geographically dispersed environments.

Use Cases for Local Cache

Local cache is ideal for applications requiring lightning-fast data retrieval and low latency, such as gaming, real-time analytics, and mobile apps where network connectivity is intermittent or slow. It supports storing frequently accessed data close to the client, reducing the need for remote calls and improving performance in latency-sensitive scenarios. Local cache is also beneficial in edge computing environments where data must be processed quickly at the source without relying on centralized servers.

Remote Cache Infographic

libterm.com

libterm.com