Dataflow is a programming model designed to handle large-scale data processing by enabling parallel and distributed computation through a series of transformations applied to data streams. It efficiently manages the flow of data between operations, optimizing resource use and reducing latency in complex data pipelines. Explore this article to discover how dataflow can enhance Your data processing capabilities and streamline analytics workflows.

Table of Comparison

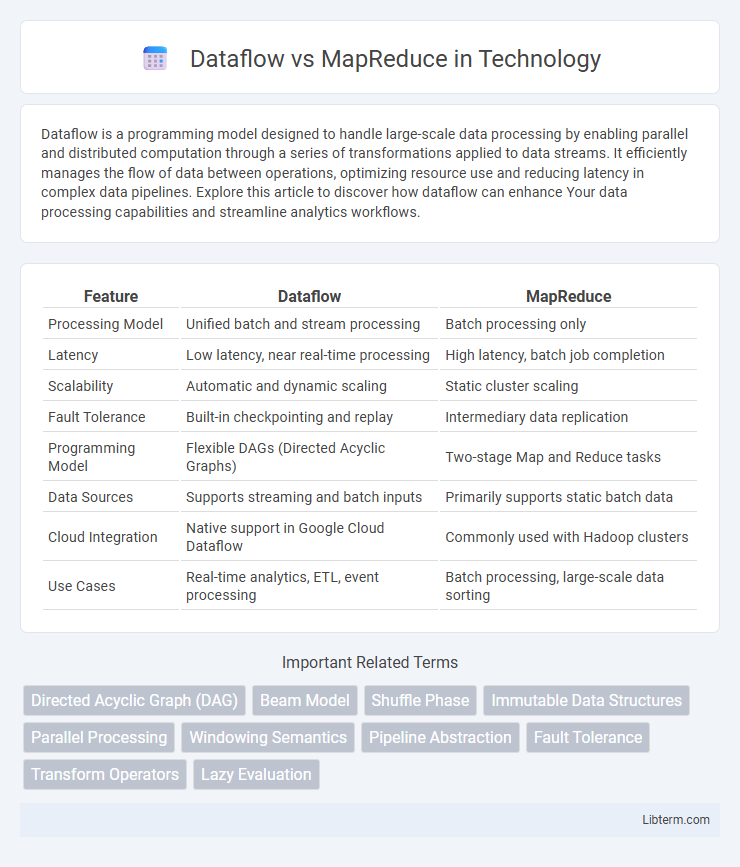

| Feature | Dataflow | MapReduce |

|---|---|---|

| Processing Model | Unified batch and stream processing | Batch processing only |

| Latency | Low latency, near real-time processing | High latency, batch job completion |

| Scalability | Automatic and dynamic scaling | Static cluster scaling |

| Fault Tolerance | Built-in checkpointing and replay | Intermediary data replication |

| Programming Model | Flexible DAGs (Directed Acyclic Graphs) | Two-stage Map and Reduce tasks |

| Data Sources | Supports streaming and batch inputs | Primarily supports static batch data |

| Cloud Integration | Native support in Google Cloud Dataflow | Commonly used with Hadoop clusters |

| Use Cases | Real-time analytics, ETL, event processing | Batch processing, large-scale data sorting |

Introduction to Dataflow and MapReduce

Dataflow is a unified programming model designed for both batch and stream data processing, enabling flexible execution graphs and dynamic work rebalancing. MapReduce is a programming paradigm that processes large-scale data by dividing tasks into map and reduce functions, primarily optimized for batch processing. Dataflow offers improved scalability and fault tolerance through its graph-based model, while MapReduce relies on fixed data shuffling stages for distributed computation.

Core Concepts and Architectures

Dataflow employs a stream processing architecture where data is represented as a continuous, potentially unbounded series of events processed in parallel, enabling low-latency and real-time analytics. MapReduce follows a batch processing paradigm divided into two main phases: the Map function processes input data into intermediate key-value pairs, and the Reduce function aggregates these pairs to produce the final output, optimized for large-scale distributed data processing. Dataflow's architecture supports dynamic work rebalancing and stateful processing, whereas MapReduce relies on rigid task execution stages with no native support for iterative or streaming computations.

Programming Models Compared

Dataflow and MapReduce programming models both process large-scale data sets but differ in their design and execution paradigms. Dataflow uses directed acyclic graphs (DAGs) to represent complex, dynamic pipelines supporting both batch and stream processing, enabling greater flexibility in data transformations and parallelism. MapReduce follows a simpler, rigid two-phase model of map and reduce functions, primarily optimized for batch processing of static data, which may limit adaptability and efficiency compared to Dataflow's more expressive approach.

Data Processing Workflows

Dataflow enables flexible, real-time data processing workflows through unified batch and stream processing, which enhances scalability and efficiency compared to the rigid, batch-only design of MapReduce. It uses directed acyclic graphs (DAGs) to optimize execution pipelines, allowing complex transformations and windowing across diverse data sources. This architectural difference makes Dataflow ideal for dynamic workflows requiring low-latency insights, while MapReduce remains suited for large-scale, offline batch analytics.

Performance and Scalability

Dataflow outperforms MapReduce by enabling low-latency stream processing alongside batch jobs, utilizing dynamic work rebalancing to optimize resource usage. Its autoscaling capabilities adjust compute resources in real-time, ensuring consistent performance under varying workloads, unlike the static node allocation in MapReduce. Dataflow's horizontal scalability efficiently handles massive datasets by breaking tasks into smaller, parallel operations, surpassing the batch-oriented, disk-bound nature of MapReduce that often leads to higher latency and limited flexibility.

Fault Tolerance Mechanisms

Dataflow and MapReduce employ distinct fault tolerance mechanisms tailored to their architectures. MapReduce relies on re-executing failed map or reduce tasks by tracking intermediate outputs through local disk storage, enabling deterministic task recovery. Dataflow frameworks implement checkpointing and state snapshots distributed across nodes, allowing finely-grained recovery and continuous processing with minimal recomputation after failures.

Use Cases and Applications

Dataflow excels in real-time data processing scenarios such as stream analytics, event-driven applications, and continuous ETL pipelines, making it ideal for use cases requiring low latency and dynamic scaling. MapReduce is best suited for batch processing tasks, including large-scale data transformations, offline analytics, and complex computations on massive datasets like log analysis and data warehousing. Organizations often choose Dataflow for interactive, low-latency workloads and MapReduce for straightforward, high-throughput batch jobs in big data ecosystems.

Flexibility and Extensibility

Dataflow offers greater flexibility and extensibility compared to MapReduce by supporting a wide range of programming languages, custom transformations, and advanced windowing techniques suitable for both batch and streaming data processing. Unlike MapReduce's rigid two-stage map and reduce structure, Dataflow enables complex, multi-stage pipelines with dynamic scaling and stateful processing capabilities that adapt to diverse data workloads. This extensibility allows developers to design more intricate workflows and integrate with other cloud-native services for enhanced analytics and real-time insights.

Ecosystem and Tool Support

Dataflow offers a unified programming model that supports batch and stream processing with robust SDKs in Java, Python, and SQL, integrated seamlessly with Google Cloud services like BigQuery and Pub/Sub, enhancing real-time analytics and ETL workflows. MapReduce, pioneered by Hadoop, relies on a well-established ecosystem including HDFS for distributed storage, YARN for resource management, and tools like Hive and Pig for querying and data transformation, making it ideal for large-scale batch processing. Dataflow's compatibility with Apache Beam enables portability across multiple runners such as Flink and Spark, whereas MapReduce's maturity provides extensive community support and integration within traditional Hadoop clusters.

Choosing Between Dataflow and MapReduce

Choosing between Dataflow and MapReduce depends on the specific requirements of your data processing tasks; Dataflow offers real-time stream processing capabilities with rich windowing and event-time features, while MapReduce excels in batch processing with simpler, fault-tolerant design. Dataflow provides dynamic work rebalancing and auto-scaling, making it suitable for complex, large-scale pipelines, whereas MapReduce is better suited for straightforward, batch-oriented workloads on stable infrastructure. Consider the need for low-latency processing, ease of pipeline management, and integration with cloud-native services when deciding which framework to use.

Dataflow Infographic

libterm.com

libterm.com